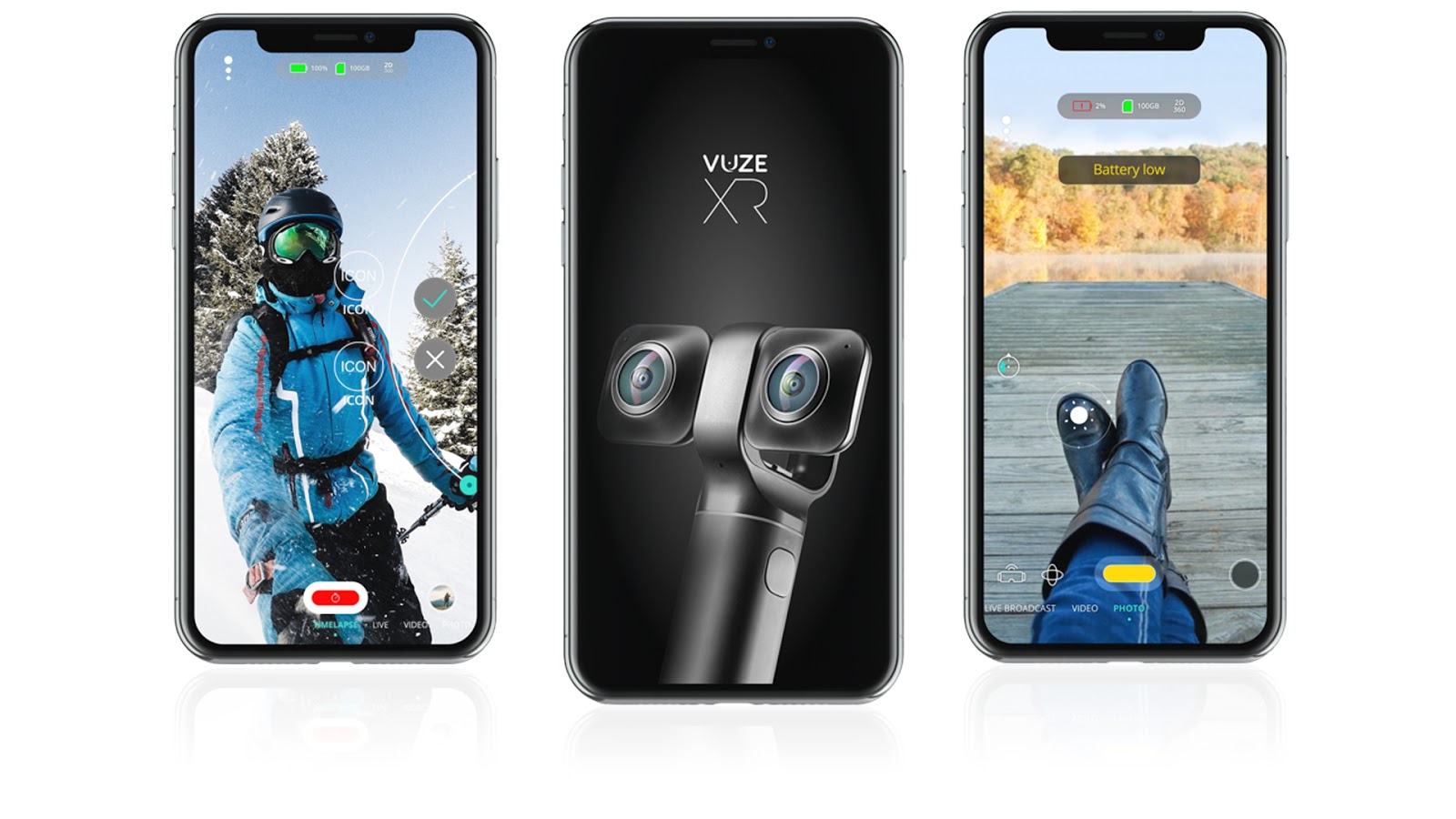

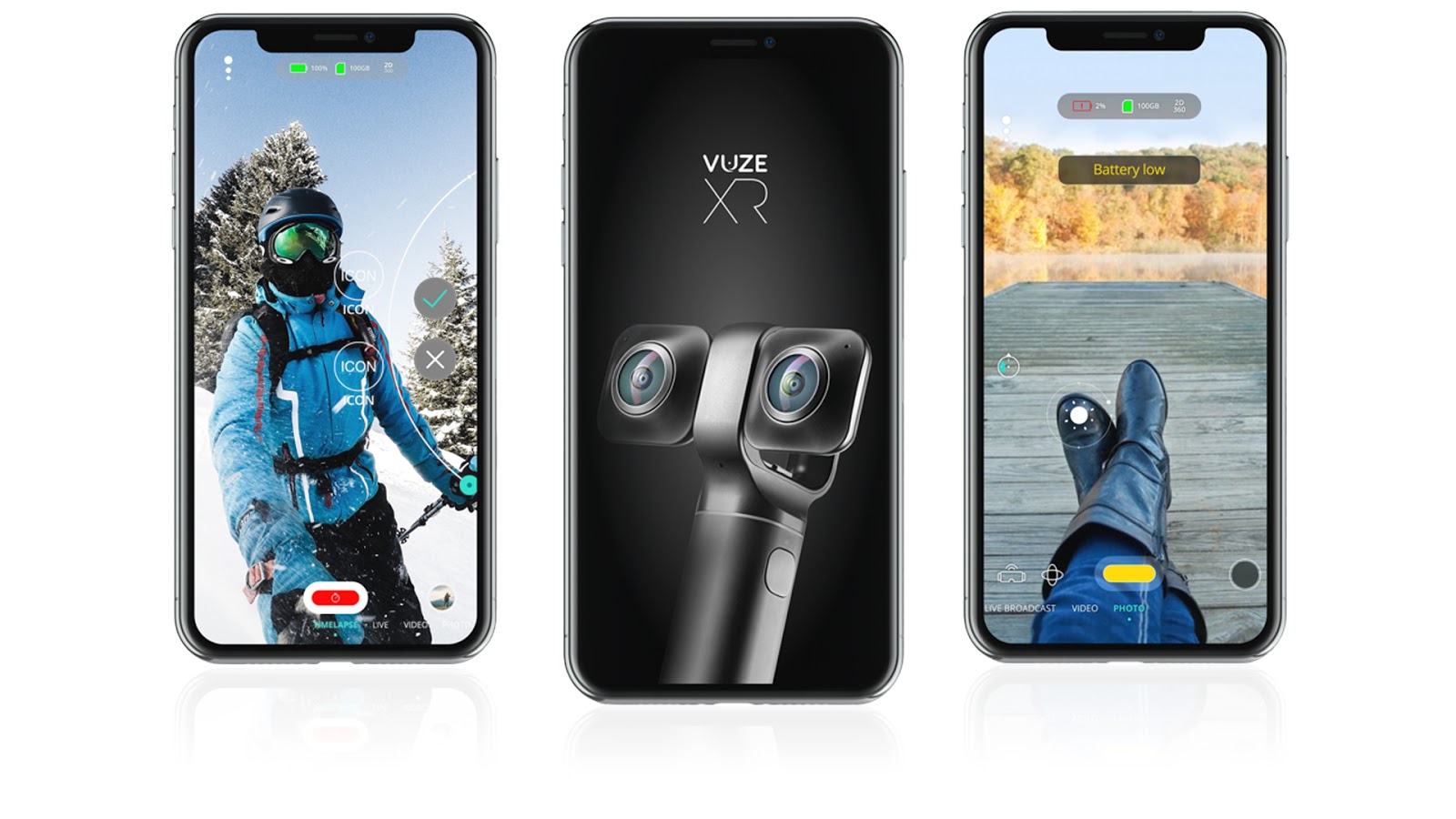

VuzeCamera is the first consumer 360° 3D camera and a new dual-camera that gives anyone the power to create and share immersive experiences in 360° or VR180° (3D) Photo and Video.

This device is regularly ranked in the top selections of the best cameras, it’s been praised by numerous reviews, and a couple of years ago the Vuze camera went to the International Space Station to shoot the first-ever 3D VR movie shot in space.

Initially, the NIX team was given a task to design a video editor app with features that few had implemented before. Two years ago, at the beginning of this project, there were few cameras that could shoot 360 video, few players that supported it, and therefore, almost no examples of technical solutions. In fact, there was one example — Google SDK, focused on the Google Cardboard product — that was able to show 360 video.

In a pilot project with the help of this SDK we showed that we can play 360 videos both in 3D and displayed in full frame using equirectangular projection.

But then the team had to develop features of photos and video processing, and this is where our first difficulties began.

Because of lack of flexibility we had to drop GPU Image as a standard solution in such cases. The library recorded the order in which the effects were to be applied — everything needed to happen before the downscale and display. When it comes to 4K video and 5K in the future, this doesn't seem to be a very good idea. In addition, there were concerns that the GPU Image fixed pipeline could cause problems when streaming from the camera in different modes — we needed to not only show the video from one camera (actually, from two), but also perform stitching, apply stabilization if necessary, and perform other tasks.

It became clear that to process both photo and video, we needed to use OpenGL.

We had some experience in 3D and OpenGL, but it was related to simple tasks like displaying a picture/model or applying some filters or shaders, but this experience was not enough for the task that the team was facing, for a number of reasons.

A specific feature in all editors is streaming the resulting effects, which includes a consistent application of effects or transformations, one after the other, with the ability to change the order and turn on or off certain elements.

We knew only how to render a frame with some effects directly onto the screen and only there. FBO — the OpenGL extension for offscreen rendering — helped us to overcome this limitation. But our real challenge was understanding and applying this mechanism, especially considering how difficult it is to debug it. It seems that you know how everything should work, but this is not happening.

One important feature was Little Planet mode — a popular photo/video effect when the horizon line is curled into a circle and it results in what looks like a small planet. Usually only a single pixel shader is used for its implementation, but it seemed to us not quite justified to use this approach, as there is 5K and many new filters coming, and we will spend iPhone resources on each pixel for geometric transformation.

As a result, we used the view matrix transformation in such a way that the result was exactly the same effect. Our solution became the most productive and quite unique. There was nothing like this on the internet at that time.

When you edited a video in the runtime using FBO, could make a bet on the Little Planet effect, and it seems that life has been a success. But just before it was time to save the result. It turned out that all this does not mean anything yet. As we say, what happens on the screen remains on the screen.

We were lucky to have AVFoundation, one of the old foundations of Apple's success (along with CoreAnimation and CoreAudio). Having figured out how to export video using OpenGL composer, it turned out that the current architecture was not quite suitable, and we had one more rendering flow for export, but all in all we were able to perform refactoring and combine everything from one stream rendering.

The big plus was that there was no need to encode video, as AVFoundaiton itself did it using a video card simply and quickly (somewhere, an Android developer cried).

However,the main obstacle in the export was metadata. If you open a properly encoded 360 video on YouTube, it will appear in a special player, where you can spin it in all directions, and even view it using Google Cardboard.

Our video was shown as it is, in the whole frame with equirectangular distortions. It turned out that neither AVFoundation nor UIKit/CoreImage can help here. Or rather they are ready to record a certain metadata, but it is up to the user to decide what and where. Moreover, AVFoundation could only record iTunes-like metadata. We had to figure out what kind of 3D metadata was there. Everything was so significant that we even made a report on this topic at one of the NIX conferences.

It is well-known that the iOS platform generally provides more complete and decent solutions than Android does, both for users and for developers. But it was difficult for the team to accept the fact that downloading the video file from the camera was much faster on an Android device.

At the beginning it seemed that this is just the default mode, more secure, and once we had free time we would improve the speed and set priorities.The reality was brutal — we couldn't increase the speed without URL Session.

The solution was to parallelize access to parts of the resource, which allowed the download speed to become the same as on Android devices.

Modern software solutions must use modern technology, and one of these for 3D video is object tracking. Since the frame in this case contains all fields of possibilities for movement, the obvious solution was to track the object using the entire frame, and the user viewing in 3D saw a part of the frame, and «rotated» after the object. For this purpose, we used the capabilities of the Vision framework.

This seemed fine, but the decision had pitfalls. The object could move from one edge of the frame to another (the same sphere). It was lost from the tracker's view — this had to be watched, and the tracker did not work well at the top and bottom of the frame, where the projection distortions increased. There was also a frame with 4K resolution at the tracker's input, which itself seemed redundant. That's why in the next version we decided to switch to the «viewport» tracker, to pass to the tracker's input a picture that the user sees, so the tracking object was always in the center of the frame with minimal possible distortions, and the size of the frame that comes to the input of the tracker was much smaller (equal to the screen resolution). This allowed significantly improved tracking.

One of the benefits of the 180 mode is the ability to create a depth map based on the differences in the two cameras frames. Starting from a common «out of the box» feature in smartphones called portrait mode, the team decided to implement it themselves. To do this, we had to go deeper into the implementation of the OpenCV computer vision algorithms.

As with OpenGL, there are practically no ready-made solutions on the internet, and something that seemed to work as we expected — did not do that. At a certain point we decided to fix the achieved results and switched to using the depth map.

One of the most common effects using the depth map is Facebook's 3D-photo. But how could we pass our map onto Facebook? It turned out to be a challenge. Facebook wanted only the portraits taken in the corresponding iPhone portrait mode. It was clear that it’s all about metadata, but could we create this? The iOS SDK allows changing the metadata with a depth map that is already in the photo, but how could we go about creating metadata from scratch? We had no ideas. We had to dive deep into the metadata of portrait photos and figure out what was so magical about it that iOS doesn't allow developers to create it from scratch. The results of the research of all these 3D questions were again so exciting that they were announced at another NIX conference.

As a result, The NIX team created an app with the hottest feature – the ability to make a 2D video from 3D and 360° footage. The application allows users to view videos in Cardboard or Little Planet mode and make live-stream videos on YouTube or Facebook.

This powerful video editor contains many fun features such as adding artistic filters or effects, making color adjustments and adding audio.

«This camera is the only camera that shoots 180 and 360 degrees in resolution 5.7. We are satisfied with the result we’ve achieved, but we need to keep working, and actively use every opportunity to make it better...»—Ilya, VP R&D at HumanEyes.

This device is regularly ranked in the top selections of the best cameras, it’s been praised by numerous reviews, and a couple of years ago the Vuze camera went to the International Space Station to shoot the first-ever 3D VR movie shot in space.

Introduction into the Project

Initially, the NIX team was given a task to design a video editor app with features that few had implemented before. Two years ago, at the beginning of this project, there were few cameras that could shoot 360 video, few players that supported it, and therefore, almost no examples of technical solutions. In fact, there was one example — Google SDK, focused on the Google Cardboard product — that was able to show 360 video.

In a pilot project with the help of this SDK we showed that we can play 360 videos both in 3D and displayed in full frame using equirectangular projection.

But then the team had to develop features of photos and video processing, and this is where our first difficulties began.

Render Selection

Because of lack of flexibility we had to drop GPU Image as a standard solution in such cases. The library recorded the order in which the effects were to be applied — everything needed to happen before the downscale and display. When it comes to 4K video and 5K in the future, this doesn't seem to be a very good idea. In addition, there were concerns that the GPU Image fixed pipeline could cause problems when streaming from the camera in different modes — we needed to not only show the video from one camera (actually, from two), but also perform stitching, apply stabilization if necessary, and perform other tasks.

It became clear that to process both photo and video, we needed to use OpenGL.

We had some experience in 3D and OpenGL, but it was related to simple tasks like displaying a picture/model or applying some filters or shaders, but this experience was not enough for the task that the team was facing, for a number of reasons.

A specific feature in all editors is streaming the resulting effects, which includes a consistent application of effects or transformations, one after the other, with the ability to change the order and turn on or off certain elements.

We knew only how to render a frame with some effects directly onto the screen and only there. FBO — the OpenGL extension for offscreen rendering — helped us to overcome this limitation. But our real challenge was understanding and applying this mechanism, especially considering how difficult it is to debug it. It seems that you know how everything should work, but this is not happening.

Little Planet Mode

One important feature was Little Planet mode — a popular photo/video effect when the horizon line is curled into a circle and it results in what looks like a small planet. Usually only a single pixel shader is used for its implementation, but it seemed to us not quite justified to use this approach, as there is 5K and many new filters coming, and we will spend iPhone resources on each pixel for geometric transformation.

As a result, we used the view matrix transformation in such a way that the result was exactly the same effect. Our solution became the most productive and quite unique. There was nothing like this on the internet at that time.

Exporting Videos

When you edited a video in the runtime using FBO, could make a bet on the Little Planet effect, and it seems that life has been a success. But just before it was time to save the result. It turned out that all this does not mean anything yet. As we say, what happens on the screen remains on the screen.

We were lucky to have AVFoundation, one of the old foundations of Apple's success (along with CoreAnimation and CoreAudio). Having figured out how to export video using OpenGL composer, it turned out that the current architecture was not quite suitable, and we had one more rendering flow for export, but all in all we were able to perform refactoring and combine everything from one stream rendering.

The big plus was that there was no need to encode video, as AVFoundaiton itself did it using a video card simply and quickly (somewhere, an Android developer cried).

However,the main obstacle in the export was metadata. If you open a properly encoded 360 video on YouTube, it will appear in a special player, where you can spin it in all directions, and even view it using Google Cardboard.

Our video was shown as it is, in the whole frame with equirectangular distortions. It turned out that neither AVFoundation nor UIKit/CoreImage can help here. Or rather they are ready to record a certain metadata, but it is up to the user to decide what and where. Moreover, AVFoundation could only record iTunes-like metadata. We had to figure out what kind of 3D metadata was there. Everything was so significant that we even made a report on this topic at one of the NIX conferences.

Downloading Issues

It is well-known that the iOS platform generally provides more complete and decent solutions than Android does, both for users and for developers. But it was difficult for the team to accept the fact that downloading the video file from the camera was much faster on an Android device.

At the beginning it seemed that this is just the default mode, more secure, and once we had free time we would improve the speed and set priorities.The reality was brutal — we couldn't increase the speed without URL Session.

The solution was to parallelize access to parts of the resource, which allowed the download speed to become the same as on Android devices.

Object Tracking

Modern software solutions must use modern technology, and one of these for 3D video is object tracking. Since the frame in this case contains all fields of possibilities for movement, the obvious solution was to track the object using the entire frame, and the user viewing in 3D saw a part of the frame, and «rotated» after the object. For this purpose, we used the capabilities of the Vision framework.

This seemed fine, but the decision had pitfalls. The object could move from one edge of the frame to another (the same sphere). It was lost from the tracker's view — this had to be watched, and the tracker did not work well at the top and bottom of the frame, where the projection distortions increased. There was also a frame with 4K resolution at the tracker's input, which itself seemed redundant. That's why in the next version we decided to switch to the «viewport» tracker, to pass to the tracker's input a picture that the user sees, so the tracking object was always in the center of the frame with minimal possible distortions, and the size of the frame that comes to the input of the tracker was much smaller (equal to the screen resolution). This allowed significantly improved tracking.

3D Photo

One of the benefits of the 180 mode is the ability to create a depth map based on the differences in the two cameras frames. Starting from a common «out of the box» feature in smartphones called portrait mode, the team decided to implement it themselves. To do this, we had to go deeper into the implementation of the OpenCV computer vision algorithms.

As with OpenGL, there are practically no ready-made solutions on the internet, and something that seemed to work as we expected — did not do that. At a certain point we decided to fix the achieved results and switched to using the depth map.

One of the most common effects using the depth map is Facebook's 3D-photo. But how could we pass our map onto Facebook? It turned out to be a challenge. Facebook wanted only the portraits taken in the corresponding iPhone portrait mode. It was clear that it’s all about metadata, but could we create this? The iOS SDK allows changing the metadata with a depth map that is already in the photo, but how could we go about creating metadata from scratch? We had no ideas. We had to dive deep into the metadata of portrait photos and figure out what was so magical about it that iOS doesn't allow developers to create it from scratch. The results of the research of all these 3D questions were again so exciting that they were announced at another NIX conference.

Conclusions

As a result, The NIX team created an app with the hottest feature – the ability to make a 2D video from 3D and 360° footage. The application allows users to view videos in Cardboard or Little Planet mode and make live-stream videos on YouTube or Facebook.

This powerful video editor contains many fun features such as adding artistic filters or effects, making color adjustments and adding audio.

«This camera is the only camera that shoots 180 and 360 degrees in resolution 5.7. We are satisfied with the result we’ve achieved, but we need to keep working, and actively use every opportunity to make it better...»—Ilya, VP R&D at HumanEyes.