Alice is an experienced full stack developer, who is capable of writing a SAAS project framework on her favorite framework using php in a week. As for frontend, she prefers Vue.js.

A client contacts you via Telegram, asking you to develop website that will be the meeting place for the employer and the employee to conduct an in-person interview. In-person means face-to-face, a direct video contact in real time with video and voice. «Why not use Skype?» some may ask. It just so happened that serious projects – and each startup undoubtedly considers itself a serious project – are trying to offer an internal communications service for a variety of reasons, including:

1) They don’t want to lend its users to third-party communicators (Skype, Hangouts, etc.) and want to retain them in the service.

2) The want to monitor their communications, such as call history and interview results.

3) Record calls (naturally, notifying both parties about the recording).

4) They don’t want to depend on policies and updates of third-party services. Everyone knows this story: Skype got updated, and everything goes up in smoke…

It seems like an easy task. WebRTC comes up when googling on the topic, and it seems like you can organize a peer-to-peer connection between two browsers, but there are still some questions:

1) Where to get STUN/TURN servers?

2) Can we do without them?

3) How to record a peer-to-peer WebRTC call?

4) What will happen if we need to add a third party to the call, for example, an HR manager or another specialist from the employer?

It turns out that WebRTC and peer-to-peer alone are not enough, and it is not clear what to do with all this to launch the required video functions of the service.

Article content

- Server and API

- Incoming streams

- Outgoing streams

- Incoming and outgoing

- Incoming stream manipulation

- Incoming stream recording

- Taking a snapshot

- Adding a stream to the mixer

- Stream transcoding

- Adding a watermark

- Adding a FPS filter

- Image rotation by 90, 180, 270 degrees

- Management of incoming streams

- Stream relaying

- Connecting servers to a CDN content processing network

- To summarize

- Links

Server and API

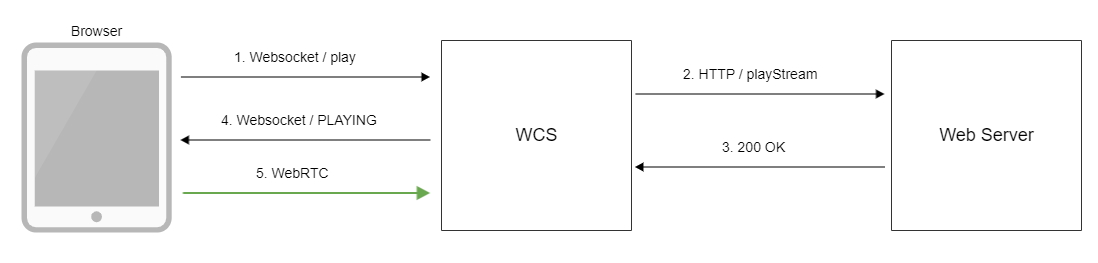

To close these gaps, server solutions and peer-server-peer architecture are used. Web Call Server 5.2 (hereinafter — WCS) is one of the server solutions; it is a development platform that allows you to add such video functions to the project and not worry about STUN/TURN and peer-to-peer connection stability.

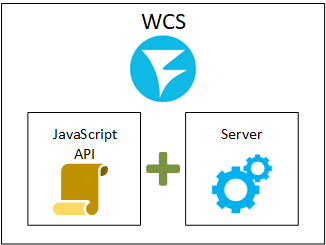

At the highest level, WCS is a JavaScript API + server part. API is used for development using regular JavaScript on the browser side, and the server processes video traffic, acting as a Stateful Proxy for media traffic.

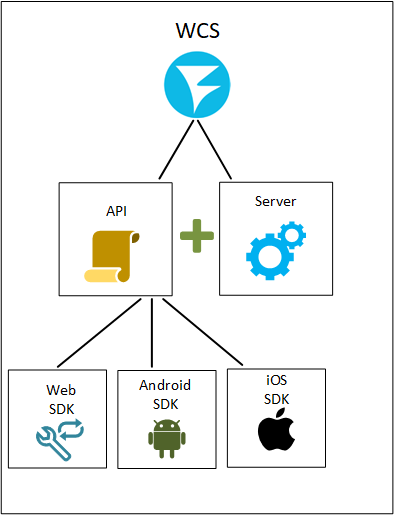

In addition to the JavaScript API, there is also the Android SDK and iOS SDK, which are needed to develop native mobile applications for iOS and Android, respectively.

For example, publishing a stream to the server (streaming from a webcam towards the server) looks like this:

Web SDK

session.createStream({name:”stream123”}).publish(); Android SDK

publishStream = session.createStream(streamOptions)

publishStream.publish();

iOS SDK

FPWCSApi2Stream *stream = [session createStream:options error:&error];

if(![stream publish:&error]) {

//published without errors

}

As a result, we can implement not only a web application, but also full-fledged ups for Google Play and the App Store with video streaming support. If we add mobile SDK to the top-level picture, we will get this:

Incoming streams

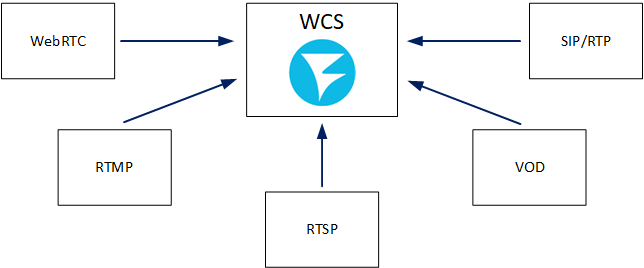

The streaming server, which is WCS, starts with incoming streams. To distribute something, we need to have it. To distribute video streams to viewers, it is necessary that these streams enter the server, go through its RAM, and exit through the network card. Therefore, the first question that we need to ask when familiarizing ourselves with the media server is what protocols and formats the latter uses to accept streams. In the case of WCS, these are the following technologies: WebRTC, RTMP, RTSP, VOD, SIP/RTP.

Each of the protocols can be used by diverse clients. For example, not only the stream from the browser can enter via WebRTC, but also from another server. In the table below there are the possible sources of incoming traffic.

| WebRTC | RTMP | RTSP | VOD | SIP/RTP |

|

|

|

|

|

If we go through the sources of incoming traffic, we can add the following:

Incoming WebRTC

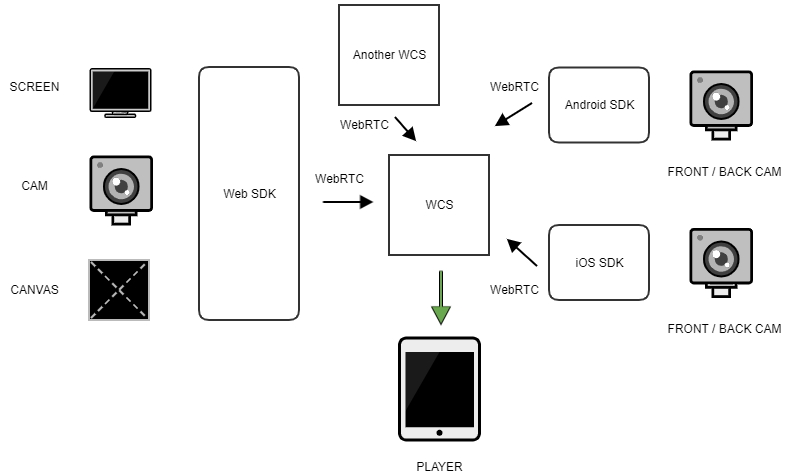

Web SDK allows not only capturing the camera and microphone, but also using the capabilities of the browser API to access the screen by means of screen sharing. In addition, we can capture an arbitrary Canvas element, and all that is drawn on it for subsequent broadcasting is canvas streaming:

Due to the mobile specifics, the Android SDK and iOS SDK have the ability to switch between the front and rear cameras of the device on the go. This allows us to switch the source during streaming without having to pause the stream.

The incoming WebRTC stream can also be obtained from another WCS server using the push, pull and CDN methods, which will be discussed later.

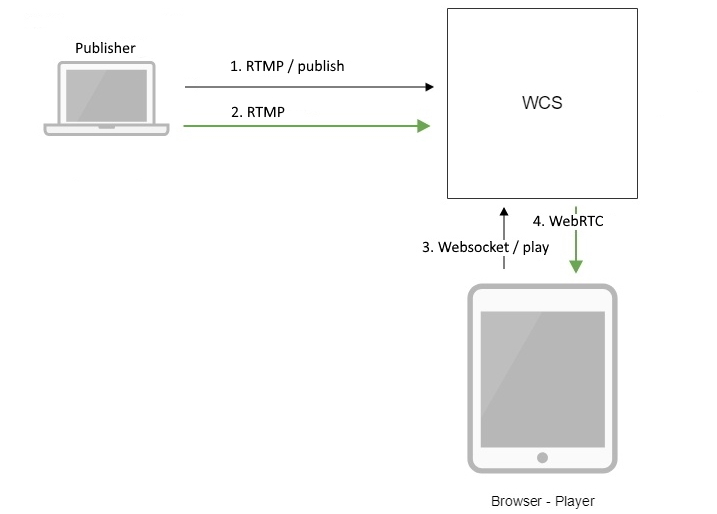

Incoming RTMP

The RTMP protocol is widely used in the streamers’ favorite OBS, as well as in other encoders: Wirecast, Adobe Media Encoder, ffmpeg, etc. Using one of these encoders, we can capture the stream and send it to the server.

We can also pick up an RTMP stream from another media server or WCS server using the push and pull methods. In the case of push, the initiator is the remote server. In the case of pull, we turn to the local server to pull the stream from the remote one.

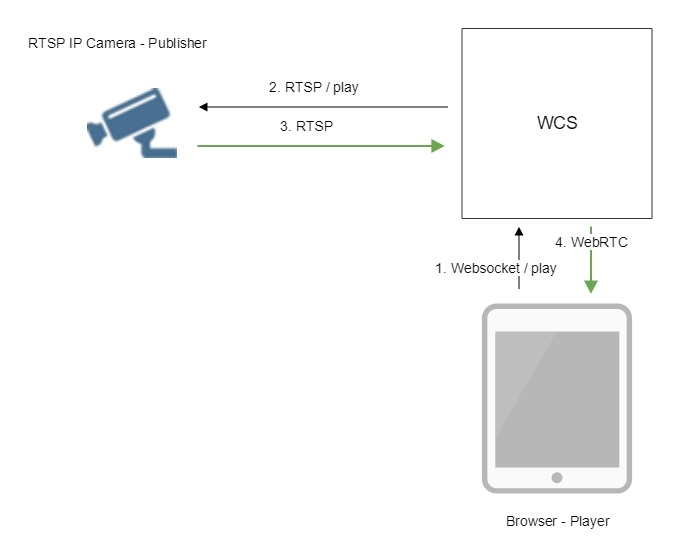

Incoming RTSP

Sources of RTSP traffic are usually IP cameras or third-party media servers that support RTSP protocol. Despite the fact that when initiating an RTSP connection, the initiator is WCS, the audio and video traffic in the direction from the IP camera moves towards the WCS server. Therefore, we consider the stream from the camera to be incoming.

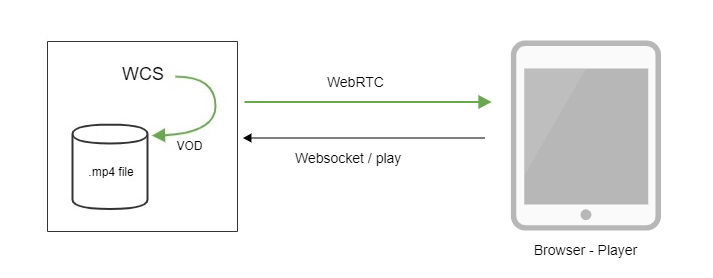

Incoming VOD

At first glance, it might seem that the VOD (Video On Demand) function is exclusively associated with outgoing streams and with the file playback by browsers. But in our case, this is not entirely true. WCS broadcasts an mp4 file from the file system to localhost; as a result, an incoming stream is created, as if it came from a third-party source. Further, if we restrict one viewer to one mp4 file, we get the classic VOD, where the viewer gets the stream and plays it from the very beginning. If we do not restrict one viewer to one mp4 file, we get VOD LIVE – a variation of VOD, in which viewers can play the same file as a stream, connecting to the playback point at which all the others are currently located (pre-recorded television broadcast mode).

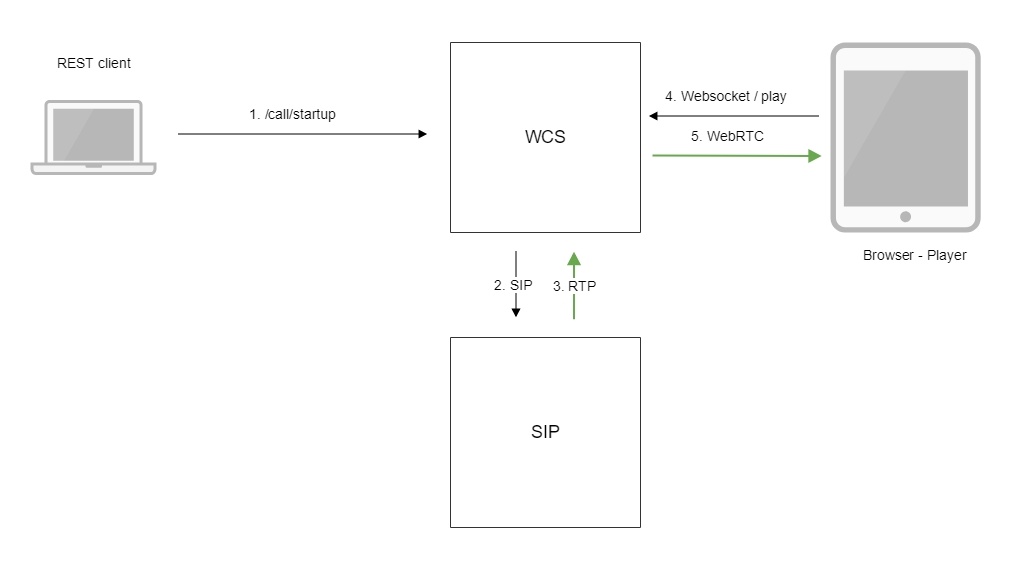

Incoming SIP/RTP

To receive incoming RTP traffic inside a SIP session, we need to set up a call with a third-party SIP gateway. If the connection is successfully established, the audio and/or video traffic will go from the SIP gateway, which will be wrapped in the incoming stream on the WCS side.

Outgoing streams

After receiving the stream to the server, we can replicate the stream received to one or many viewers on request. The viewer requests a stream from the player or other device. Such streams are called outgoing or “viewer streams,” because sessions of such streams are always initiated on the side of the viewer/player. The set of playback technologies includes the following protocols/formats: WebRTC, RTMP, RTSP, MSE, and HLS.

| WebRTC | RTMP | RTSP | MSE | HLS |

|---|---|---|---|---|

|

|

|

|

|

Outgoing WebRTC

In this case, the Web SDK, Android SDK and iOS SDK act as the API for the player. An example of playing WebRTC stream looks like this:

Web SDK

session.createStream({name:”stream123”}).play();

Android SDK

playStream = session.createStream(streamOptions);

playStream.play();

iOS SDK

FPWCSApi2Stream *stream = [session createStream:options error:nil];

if(![stream play:&error])

{

//published without errors

} This is very similar to the publishing API, with the only difference being that instead of stream.publish(), stream.play() is called to play.

A third-party WCS server can be the player, which will be instructed to pick up the stream via WebRTC from another server using the pull method or pick up the stream inside CDN.

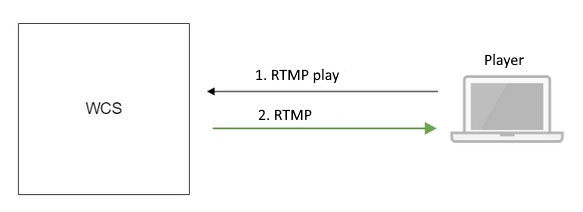

Outgoing RTMP

Here there will be mainly RTMP players – both the well-known Flash Player and desktop and mobile applications that use the RTMP protocol, receive and play an RTMP stream. Despite the fact that Flash left the browser, it kept the RTMP protocol, which is widely used for video broadcasts, and the lack of native support in browsers does not prevent the use of this quite successful protocol in other client applications. It is known that RTMP is widely used in VR players for mobile applications on Android and iOS.

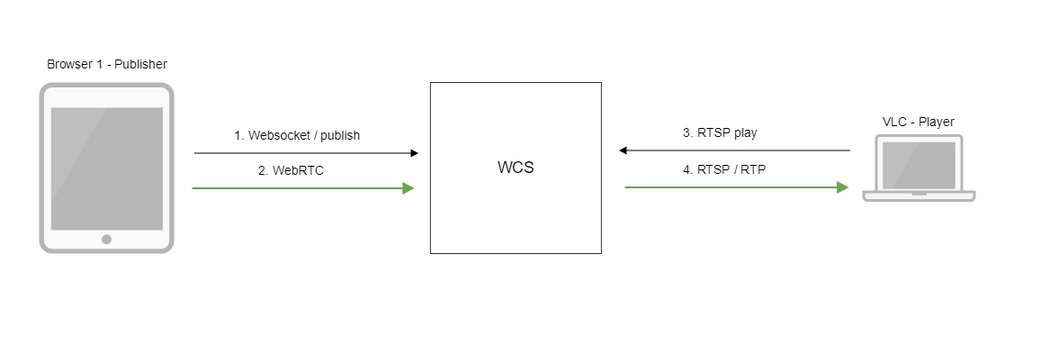

Outgoing RTSP

The WCS server can act as an RTSP server and distribute the received stream via RTSP as a regular IP camera. In this case, the player must establish an RTSP connection with the server and pick up the stream for playback, as if it was an IP camera.

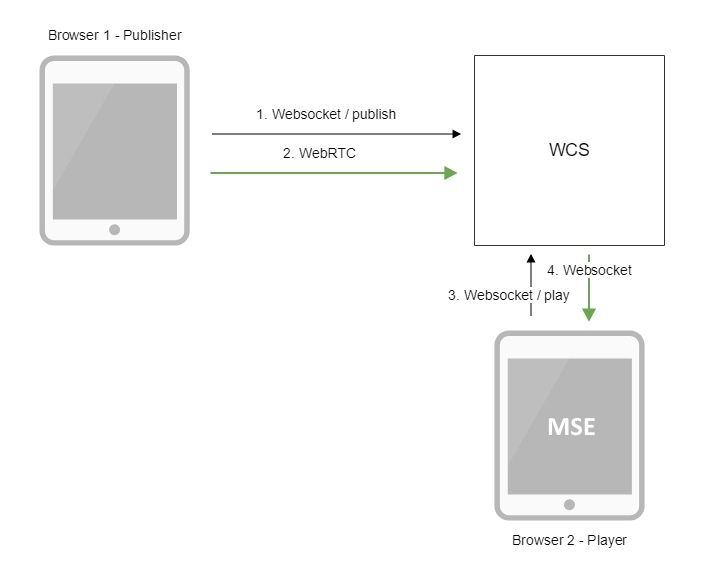

Outgoing MSE

In this case, the player requests a stream from the server using the Websocket protocol. The server distributes audio and video data via web sockets. The data reaches the browser and is converted into chunks that the browser can play thanks to the native MSE extension supported out of the box. The player ultimately works on the basis of the HTML5 video element.

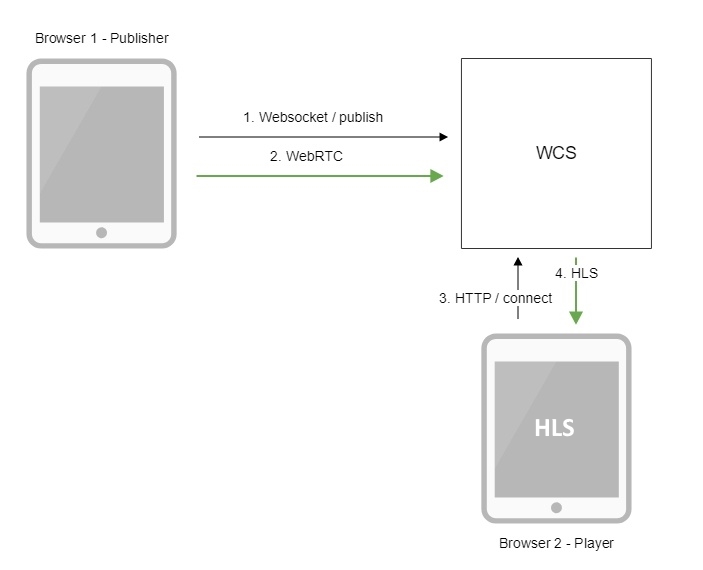

Outgoing HLS

Here, WCS acts as an HLS server or Web server that supports HLS (HTTP Live Streaming). Once the incoming stream appears on the server, an .m3u8 HLS playlist is generated, which is given to the player in response to an HTTP request. The playlist describes which video segments the player should download and display. The player downloads video segments and plays it on the browser page, on the mobile device, on the desktop, in the Apple TV set-top box, and wherever HLS support is claimed.

Incoming and outgoing

In total, we have 5 incoming and outgoing stream types. They are listed in the table:

| Входящие | Исходящие |

|---|---|

| WebRTC | WebRTC |

| RTMP | RTMP |

| RTSP | RTSP |

| VOD | MSE |

| SIP/RTP | HLS |

That is, we can upload the streams to the server, connect to them, and play them with suitable players. To play a WebRTC stream, use the Web SDK. To play a WebRTC stream as HLS, use an HLS player, etc. One stream can be played by many spectators. One-to-many broadcasts do work.

Now, let us describe what actions can be performed with streams.

Incoming stream manipulation

Outgoing streams with spectators are not easily manipulated. Indeed, if the viewer has established a session with the server and is already getting some kind of stream, there is no way to make any changes to it without breaking the session. For this reason, all manipulations and changes take place on incoming streams, at the point where its replication has not yet occurred. The stream that has undergone changes is then distributed to all connected viewers.

Stream opeartions include:

— recording

— taking a snapshot

— adding a stream to the mixer

— stream transcoding

— adding a watermark

— adding a FPS filter

— image rotation by 90, 180, 270 degrees

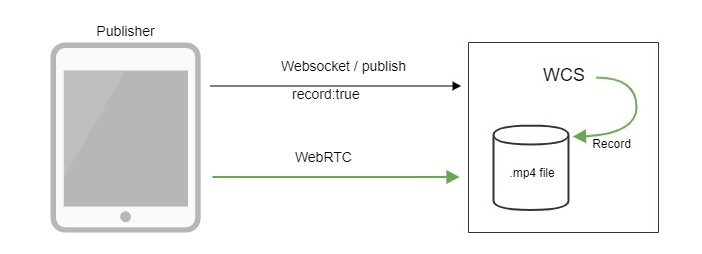

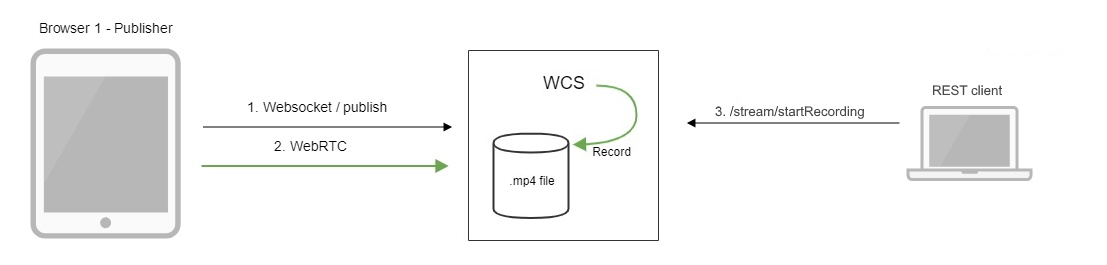

Incoming stream recording

Perhaps the most understandable and frequently encountered function. Indeed, in many cases, streams require recording: webinars, English lessons, consultations, etc.

Recording can be initiated with either the Web SDK or the REST API with a special request:

/stream/startRecording {} The result is saved in the file system as an mp4 file.

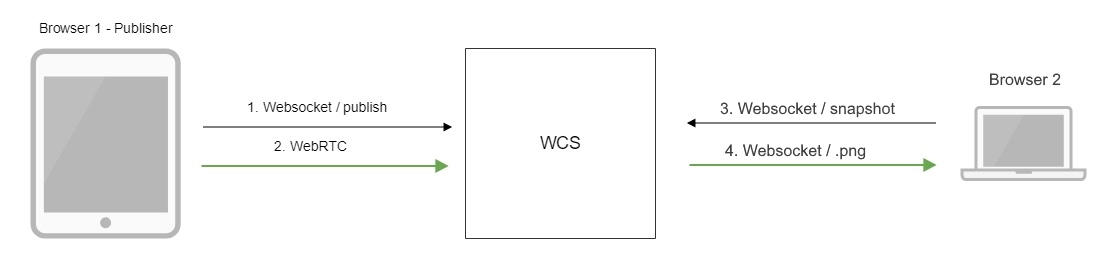

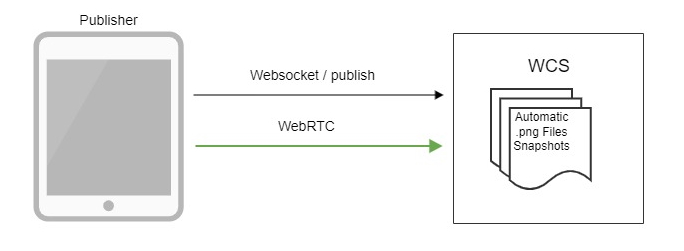

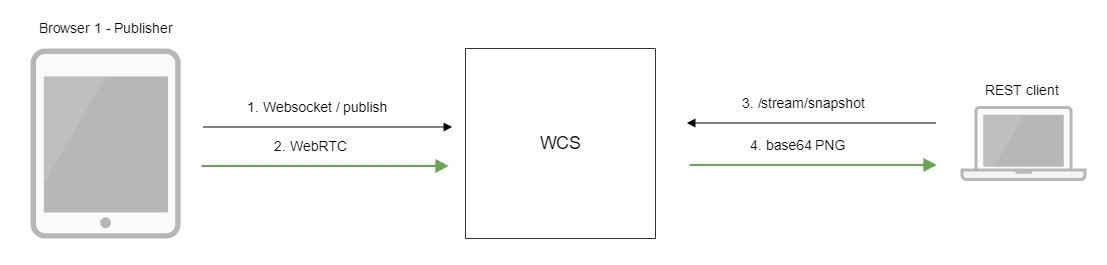

Taking a snapshot

An equally common task is to take pictures of the current stream to display icons on the site. For example, we have 50 streams in a video surveillance system, each of which has one IP camera as a source. Displaying all 50 threads on one page is not only problematic for browser resources, but also pointless. In case of 30 FPS, the total FPS of the changing picture will be 1500, and the human eye simply will not accept such a display frequency. As a solution, we can configure automatic slicing or snapshot-taking on demand; in this case, images with an arbitrary frequency can be displayed on the site, for example, 1 frame in 10 seconds. Snapshots can be removed from the SDK through the REST API, or sliced automatically.

The WCS server supports the REST method for receiving snapshots:

/stream/snapshot

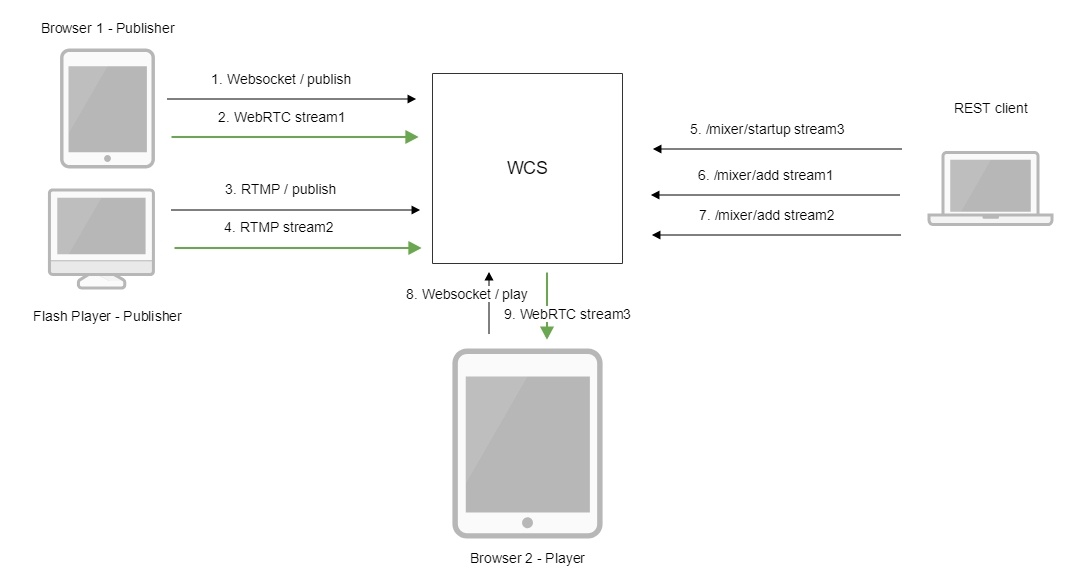

Adding a stream to the mixer

An image from two or more sources can be combined into one for display to end viewers. This procedure is called mixing. Basic examples: 1) Video surveillance from multiple cameras on the screen in one picture. 2) Videoconference, where each user receives one stream, in which the rest are mixed, to save resources. The mixer is controlled via the REST API and has an MCU mode of operation for creating video conferences.

REST command to add a stream to the mixer:

/mixer/startup

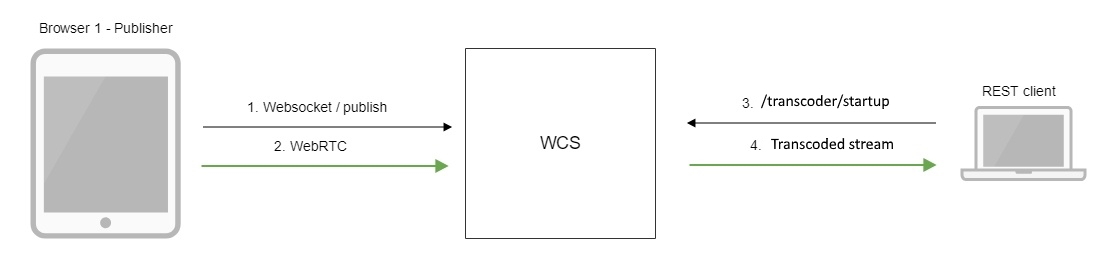

Stream transcoding

Streams sometimes need to be compressed in order to adapt for certain groups of client devices by resolution and bit rate. For this, transcoding is used. Transcoding can be enabled on the Web SDK side, through the REST API, or automatically through a special transcoding node in the CDN. For example, a video of 1280x720 can be transcoded to 640x360 for distribution to customers from a geographic region with a traditionally low bandwidth. Where are your satellites, Elon Musk?

Used REST method:

/transcoder/startup

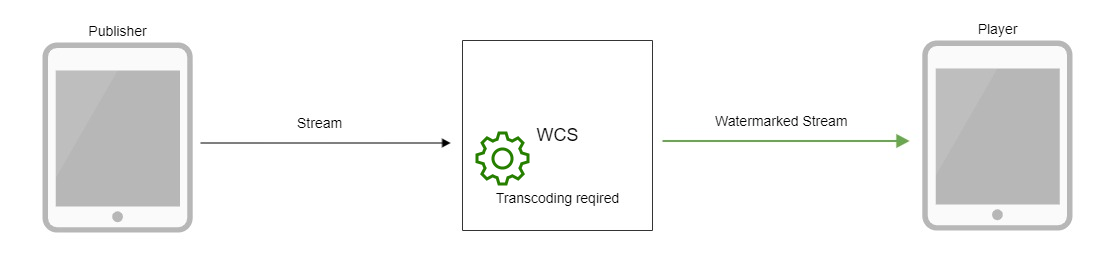

Adding a watermark

It is known that any content can be stolen and turned into WebRip, no matter what protection the player has. If your content is valuable, you can embed a watermark or logo into it that will greatly complicate its further use and public display. To add a watermark, just upload a PNG image, and it will be inserted into the video stream by transcoding. Therefore, you will have to prepare a couple of CPU cores on the server side in case you still decide to add a watermark to the stream. In order not to create the watermark on the server by transcoding, it is better to add it directly on the encoder/streamer, which often provide such an opportunity.

Adding a FPS filter

In some cases, it is required that the stream have an even FPS (frames per second). This may come in handy if we re-stream the stream to a third-party resource like Youtube or Facebook or play it with a sensitive HLS player. Filtering also requires transcoding, so make sure to properly assess the power of your server and prepare 2 cores per stream if such an operation is planned.

Image rotation by 90, 180, 270 degrees

Mobile devices have the ability to change the resolution of the published stream depending on the angle of rotation. For example, you started to stream, holding the iPhone horizontally, and then rotated it. According to the WebRTC specification, the streamer browser of the mobile device (in this case iOS Safari) should signal the rotation to the server. In turn, The server must send this event to all subscribers. Otherwise, it would be like this – the streamer put the phone on its side, but still sees its camera vertically, while the viewers see a rotated image. To work with rotations on the SDK side, the corresponding cvoExtension extension is included.

Management of incoming streams

Automatic — the configuration is usually set on the server side in the settings.

| Действие с потоком | Web, iOS, Android SDK | REST API | Automatic | CDN |

|---|---|---|---|---|

| Запись | + | + | |

|

| Снятие снапшота | + | + | + | |

| Добавление в микшер | + | + | |

|

| Транскодирование потока | + | + | |

+ |

| Добавление водяного знака |

|

|

+ | |

| Добавление FPS-фильтра | |

|

+ | |

| Поворот картинки на 90, 180, 270 градусов |

+ | |

|

|

Stream relaying

Relaying is also an option for manipulating streams entering the server; it consists in forcing the stream to a third-party server. Relaying is synonymous with such words as republishing, push, inject.

Relaying can be implemented using one of the following protocols: WebRTC, RTMP, SIP/RTP. The table shows the direction in which the stream can be relayed.

| WebRTC | RTMP | SIP/RTP |

|---|---|---|

| WCS | RTMP server WCS | SIP server |

WebRTC relaying

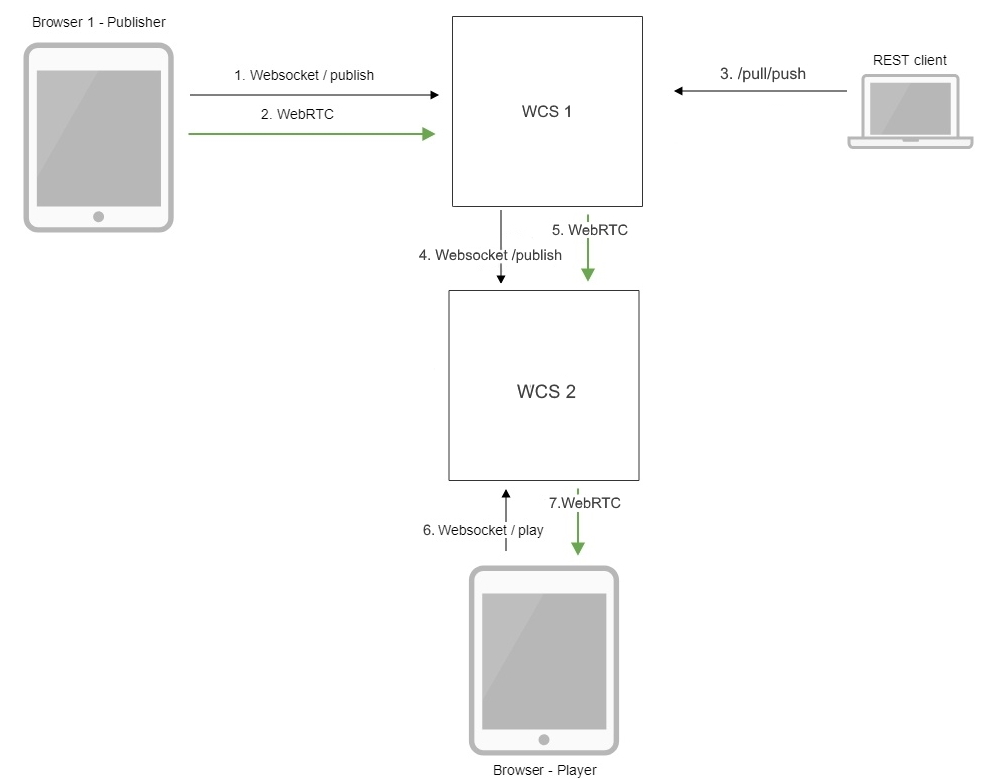

A stream can be relayed to another WCS server if for some reason it is required to make the stream available on another server. Relaying is done through the REST API via /push method. Upon receipt of such a REST request, WCS connects to the specified server and publishes a server-server stream to it. After that, the stream becomes available for playback on another machine.

/pull/push — used REST method

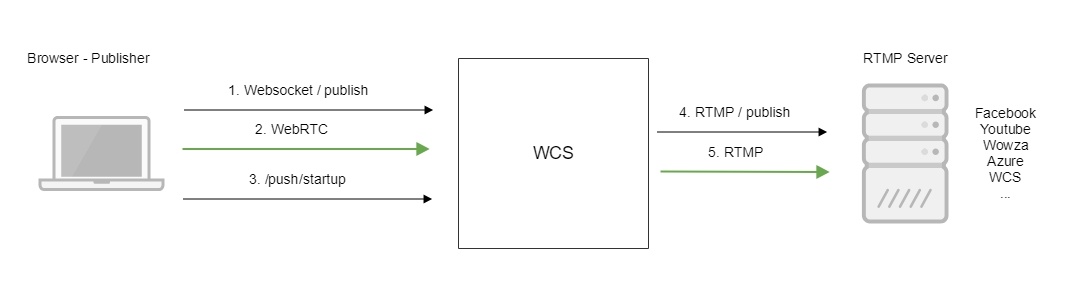

RTMP relaying

As with WebRTC relaying, RTMP relaying to another server is also possible. The difference will only be in the relay protocol. RTMP relaying is also performed via /push and allows transferring the stream to both third-party RTMP servers and to services supporting RTMP Ingest: Youtube, Facebook streaming, etc. Thus, WebRTC stream can be relayed to RTMP. We might as well as relay any other stream entering the server, for example RTSP or VOD, to RTMP.

The video stream is relayed to another RTMP server using REST calls.

/push/startup — used REST call

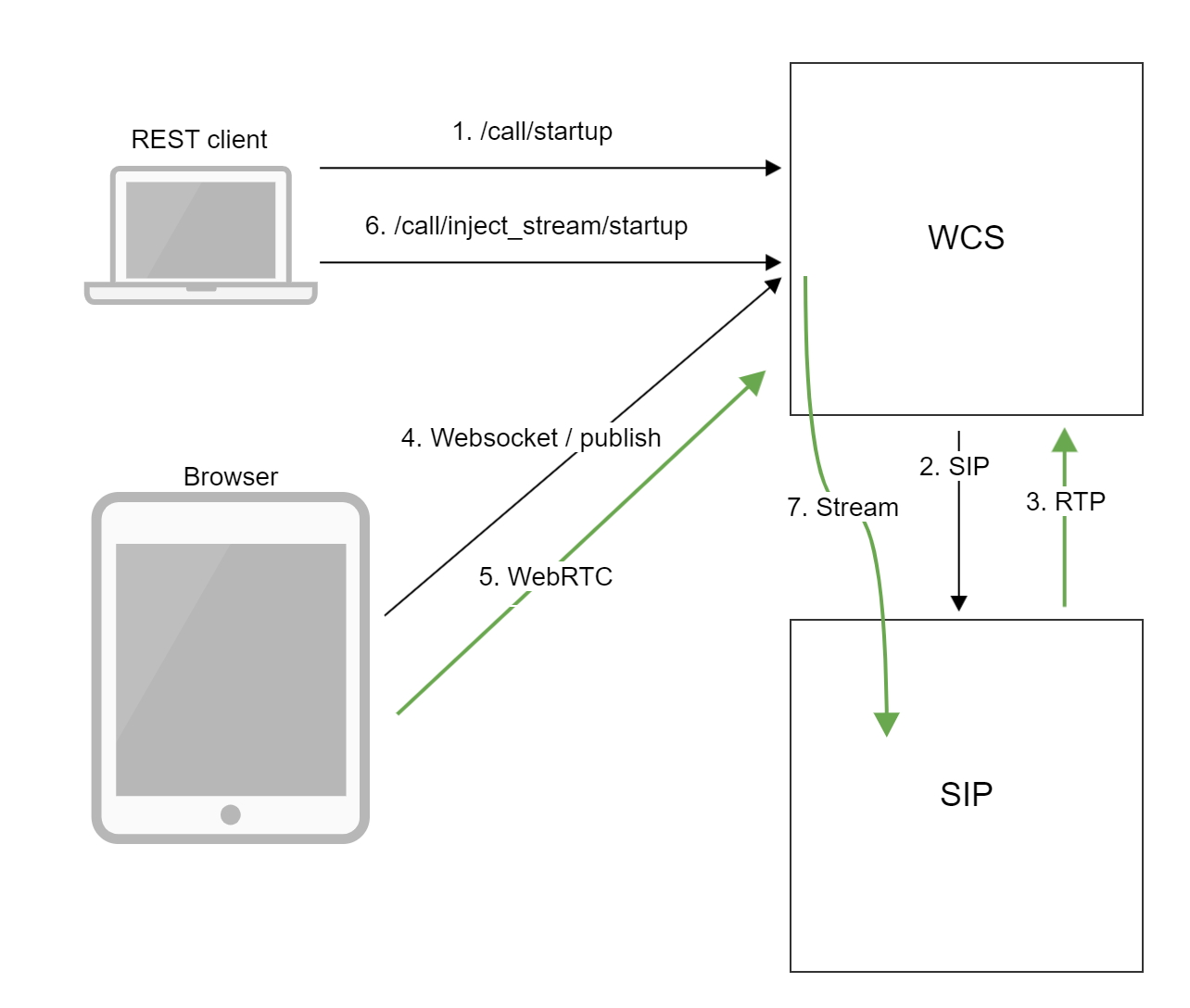

SIP/RTP relaying

It is rarely used function. Most often, it is used in enterprise. For example, when we need to establish a SIP call with an external SIP conference server and redirect the audio or video stream to this call so that the audience of the conference sees some kind of video content: “Please watch this video” or “Colleagues, now let's watch an IP camera stream from the construction site”. We need to keep in mind that in this case, the conference itself exists and is managed on an external VKS-server with SIP support (recently, we have tested the solution from Polycom DMA), whereas we just connect and relay the existing stream to this server. The REST API function is called /inject and serves just for this case.

REST API command:

/call/inject_stream/startup

Connecting servers to a CDN content processing network

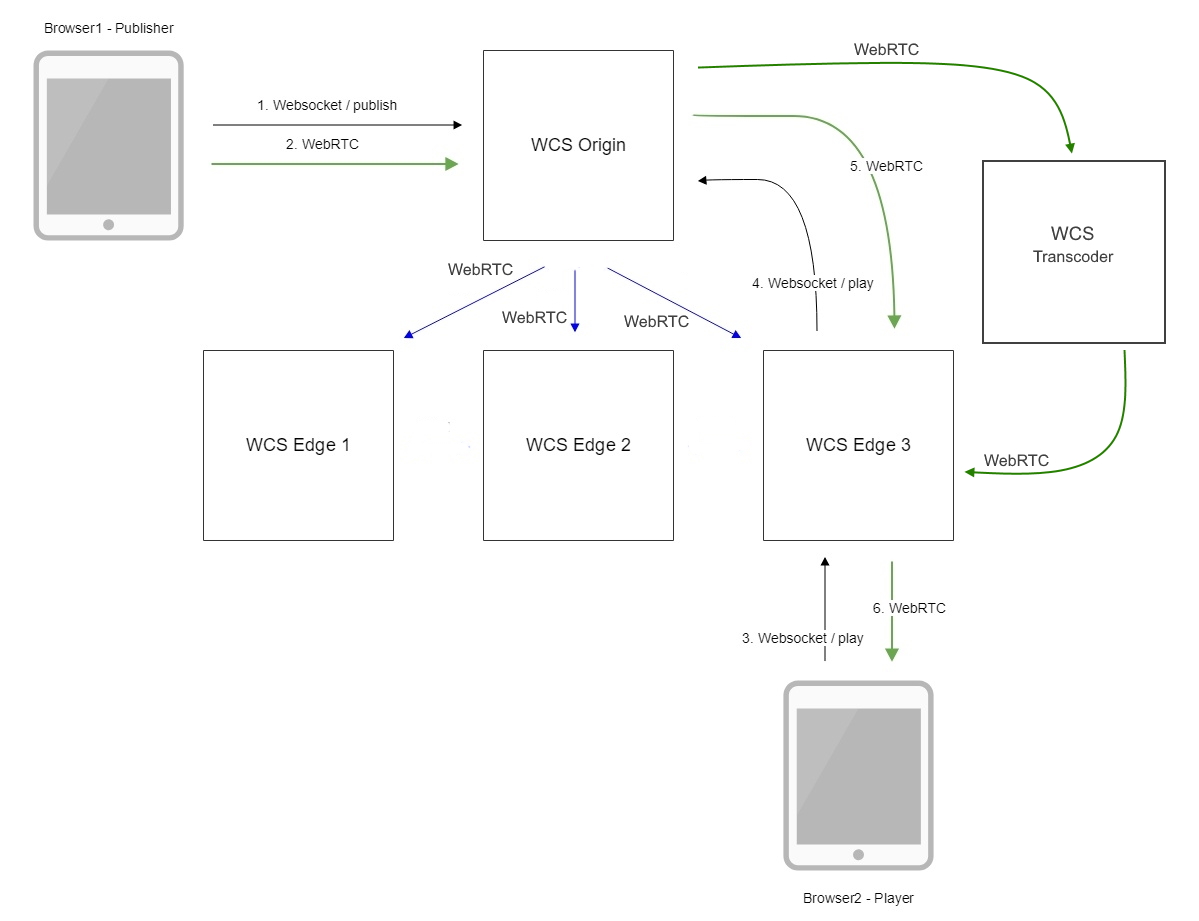

Usually, one server has a limited amount of resources. Therefore, for large online broadcasts where the audience counts for thousands and tens of thousands, scaling is necessary. Several WCS servers can be combined into one CDN content delivery network. Internally, CDN will work through WebRTC to maintain low latency during streaming.

The server can be configured in one of the following roles: Origin, Edge, or Transcoder. Origin type servers receive traffic and distribute it to the Edge servers, which are responsible for delivering the stream to viewers. If it is necessary to prepare a stream in several resolutions, Transcoder nodes are included in the scheme, which take on the resource-consuming mission of transcoding streams.

To summarize

WCS 5.2 is a server for developing applications with realtime audio and video support for browsers and mobile devices. Four APIs are provided for development: Web SDK, iOS SDK, Android SDK, REST API. We can publish (feed) video streams to the server using five protocols: WebRTC, RTMP, RTSP, VOD, SIP/RTP. From the server, we can play streams with players using five protocols: WebRTC, RTMP, RTSP, MSE, HLS. Streams can be controlled and undergo such operations as recording, slicing snapshots, mixing, transcoding, adding a watermark, filtering FPS, and broadcasting video turns on mobile devices. Streams can be relayed to other servers via WebRTC and RTMP protocols, as well as redirected to SIP conferences. Servers can be combined into a content delivery network and scaled to process an arbitrary number of video streams.

What Alice must know to work with the server

The developer needs to be able to use Linux. The following commands in the command line should not cause confusion:

tar -xvzf wcs5.2.tar.gz cd wcs5.2 ./install.sh tail -f flashphoner.log ps aux | grep WebCallServer top One also need to know Vanilla JavaScript when it comes to Web development.

//publishing the stream

session.createStream({name:’mystream’}).publish();

//playing the stream

session.createStream({name:’mystream’}).play();

The ability to work with the back-end may also come in handy.

WCS can not only receive control commands through the REST API, but also send hooks — i.e. notifications about events that occur on it. For example, when trying to establish a connection from a browser or mobile application, WCS will trigger the /connect hook, and when trying to play a stream, it will trigger the playStream hook. Therefore, the developer will have to walk a little in the shoes of the back ender, who is able to create both a simple REST client and a small REST server for processing hooks.

REST API example

/rest-api/stream/find_all — example of REST API for listing streams on the server

REST hook example

https://myback-end.com/hook/connect - REST hook /connect processing on the backend side.

Linux, JavaScript, REST Client / Server — three elements that are enough to develop a production service on the WCS platform working with video streams.

Developing mobile applications will require knowledge of Java and Objective-C for Android and iOS, respectively.

Installation and launch

There are three ways to quickly launch WCS today:

1) Install Ubuntu 16.x LTS or Ubuntu 18.x LTS etc. on your Centos7. or be guided by article from the documentation.

or

2) Get ready-made image on Amazon EC2.

or

3) Get server ready-made image on Digital Ocean.

And start an exciting project development with streaming video features.

The review article turned out to be quite big. Thank you for the patience to read it.

Have a good streaming!

Links

WCS 5.2 — WebRTC сервер

Installation and launch

Installation and launch WCS

Launch ready-made image on Amazon EC2

Launch server ready-made image on DigitalOcean

SDK

Documentation Web SDK

Documentation Android SDK

Documentation iOS SDK

Cases

Incoming streams

Outgoing streams

Management of streams

Stream relaying

CDN for low latency WebRTC streaming