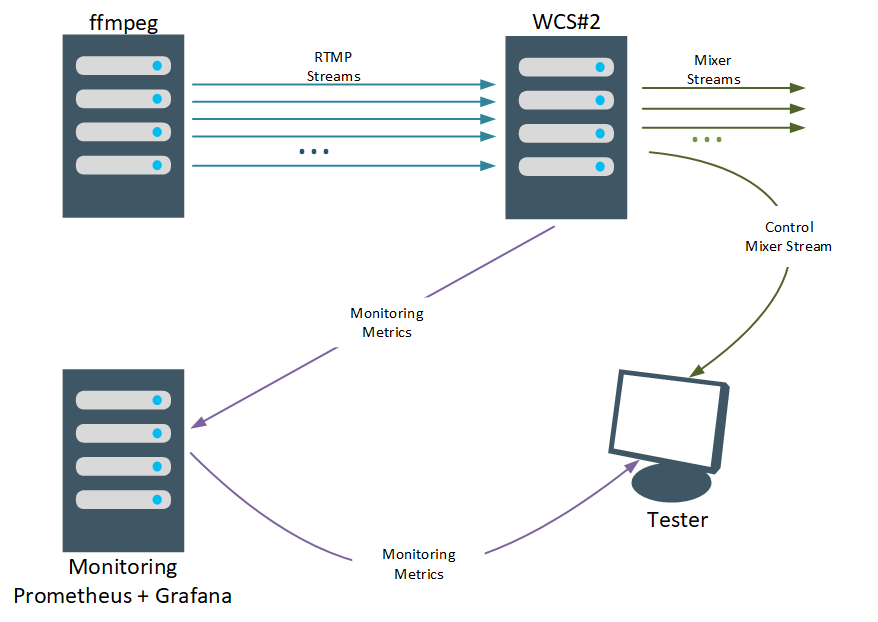

This article is a continuation of our series of write-ups about load tests for our server. We have already discussed how to compile metrics and how to use them to choose the equipment, and we also provided an overview of various load testing methods. Today we shall look at how the server handles stream mixing. There are many applications and systems, such as chatrooms, systems for video conferences and webinars, and small surveillance systems, that operate on the basis of mixers, which is why it is safe to say that stream mixing is an extremely popular tool.

So, how many mixers can there be on a server? In theory, there are no limits, aside from common sense and the server hardware, meaning a server can have can have any number of mixers, and a mixer can have any number of streams.

Let us test this and experimentally find out the optimal number of mixers and streams.

A little bit of math

We begin by using Iperf to test the bandwidth of the channel connecting the servers. In our case, the bandwidth equals 8.7 Gbps

In this experiment we are publishing streams with the resolution of 720p. One such stream takes up approximately 2 Mbps of the channel. For the quality to remain stable, the channel shouldn't come under loads exceeding 80% of its capacity. In theory, given its bandwidth, we can publish 4000 720p streams with no drops in quality.

The test server has the following specifications:

2 x Intel(R) Xeon(R) CPU E5-2696 v2 @ 2.50GHz (12 cores, 24 hyper-threads);

128 GB RAM;

10 Gbps.

Mixers operate using transcoding. It is recommended to plan out the server capacities as follows: two CPU cores for one mixer, which means our 48-thread server can support 24 mixers.

For the tests, we will use a 720p video. Its properties are as follows:

Preparing the servers

An installed and configured WCS is a pre-requisite for the testing. Supposedly, you already have it installed. If you don't, please do so, following this guide.

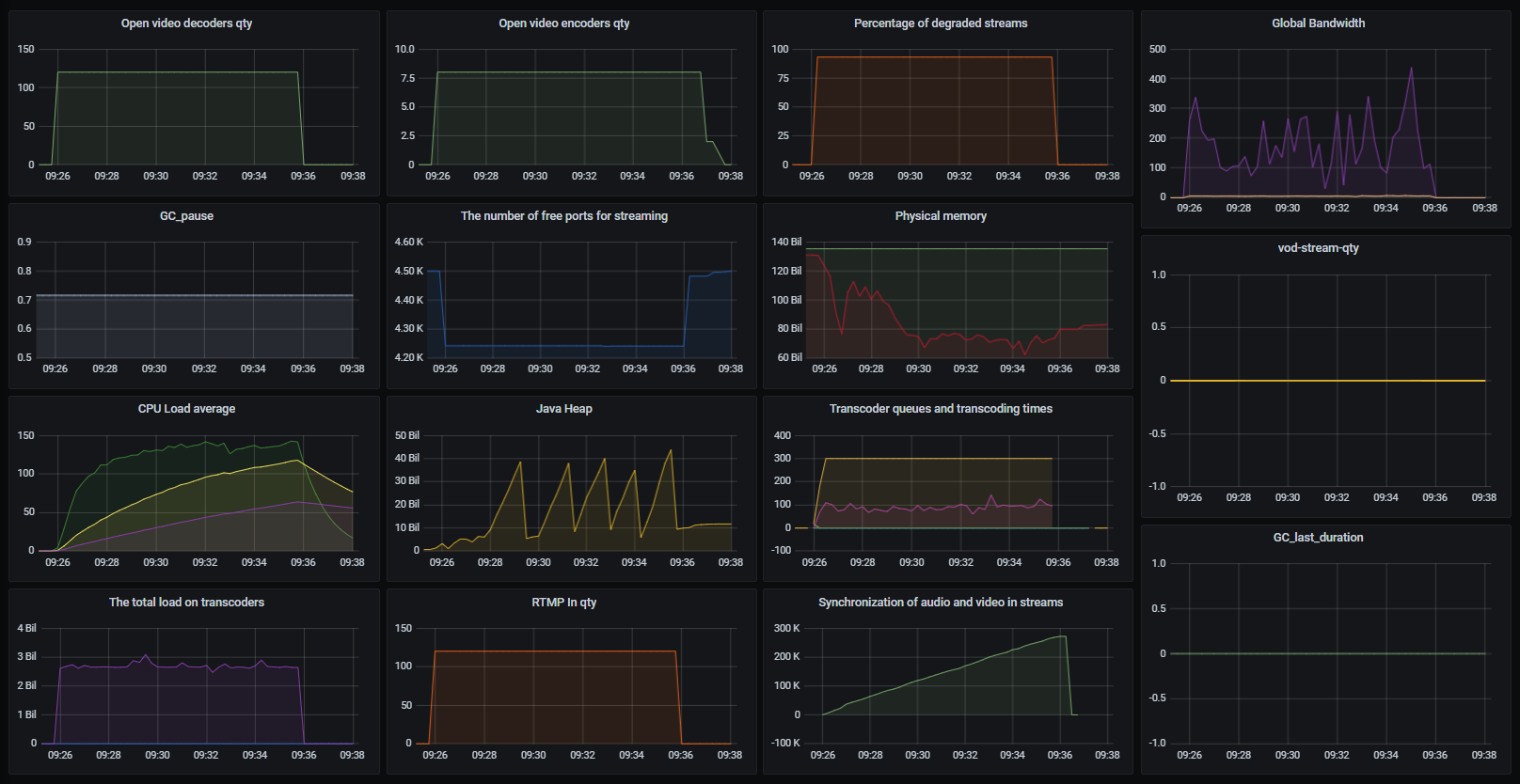

Prepare the server for testing and enable the monitoring via Prometheus+Grafana as per this guide with a small difference:

-

For the sake of clarity, let's delineate the graphs of open decoders and encoders. The number of decoders = the number of streams in the mixers. The number of encoders = the number of mixers. The metrics for Grafana are as follows:

native_resources{instance="demo.flashphoner.com:8081", job="flashphoner", param="native_resources.video_decoders"}and

native_resources{instance="demo.flashphoner.com:8081", job="flashphoner", param="native_resources.video_encoders"} -

The first test doesn't count the outcoming WebRTC ones, but instead counts the incoming RTMP ones. The metrics for Grafana are as follows:

streams_stats{instance="demo.flashphoner.com:8081", job="flashphoner", param="streams_rtmp_in"} -

For the second test we'll need to count the existing VODs on the WCS server, but given the fact that, at the moment of this writing, acquiring this information requires a REST request, we'll have to modify the script to acquire custom metrics.

Let's add the following commands to the "custom_stats.sh" script:

curl -X POST -H "Content-Type: application/json" http://localhost:8081/rest-api/vod/find_all > vod.txt vod_stream=$(head -n 1 vod.txt | grep -o "localMediaSessionId" | wc -l) echo "vod-stream-qty=$vod_stream"Here we use a REST request to receive data on the VOD streams on the server and to calculate their number using the number of open media sessions. The number of VOD streams is displayed in the stats screen under the "vod-stream-qty" variable, which is then recorded by Prometheus. You can find a sample "custom_stats.sh" file under the "Useful files" at the end of the article.

The metrics for Grafana are as follows:

custom_stats{instance="demo.flashphoner.com:8081", job="flashphoner", param="vod-stream-qty"}In the "Useful files" section you can find the Grafana panels with all the metrics required for testing.

-

Now let's optimize the mixer settings. Generally, a mixer encodes one video stream and multiple audio streams — two streams for each user plus one general audio stream. This is why it is recommended to allocate a higher number of threads for audio encoding that for video encoding.

Multithreaded mixing and the number of processor threads are determined by the following settings in the flashphoner.properties file (you can download flashphoner.properties from the "Useful files" section):

mixer_type=MULTI_THREADED_NATIVE mixer_audio_threads=10 mixer_video_threads=4 -

Enable or disable ZGC. Depending on the task, there is a choice to be made: whether to use the new Z Garbage Collector or the classic CMS. CMS is less taxing for servers and doesn't take up a lot of server processing power, but, at the same time, it might mean lengthy pauses. We recommend using CMS for medium servers and in cases calling for a big number of non-real-time mixers. For instance, when mixers are used for recording the streams.

For real-time and MCU it is better to use ZGC. The number of mixers in this case might be smaller compared to CMS, but the time the Garbage Collector will spend processing will be diminished, which will make the outgoing stream smoother (in the case of conferences, video chats, and webinars).

The Garbage Collector type is determined in the wcs-core.properties. For more info, see here.

Test one: over the network

If there is a '#' symbol in the name of the published RTMP-stream, the server considers everything after it the name of the mixer. That mixer will be created automatically upon publishing. For the sake of the test, let's create RTMP-streams on another server using ffmpeg. The RTMP-streams will be rebroadcast on the test server and automatically put into mixers.

The following script is used to start the publishing:

#! /bin/bash

mixer=24

stream=2

for i in `seq 1 $mixer`; do

for j in `seq 1 $stream`; do

ffmpeg -re -i /root/test.mp4 -acodec copy -vcodec copy -g 24 -strict -2 -f flv "rtmp://172.16.40.21:1935/live/test$j#mixer$i" -nostdin -nostats </dev/null >/dev/null 2>&1 &

done

donewhere:

mixer - the number of mixers created;

stream - the number of streams in each mixer.

Through trial and error let us determine the maximum number of mixers and streams that preserves the optimal quality of the video output.

We begin with 4 mixers with 15 streams each:

Here, the server load wasn't substantial: Load Average 1 (green line on the graph) amounted to 15-20. No stream degradation was detected. Visually, the quality of the output stream was acceptable.

For the next iteration let's increase the number of mixers to 8 , while keeping the number of streams unchanged ( 15 ):

Here, the server couldn't handle the load. Load Average 1 (green line on the graph) peaked at 150. Stream degradation was detected within the first few minutes and the control stream had freezes.

Let's try reducing the number of mixers.

Six ( 6 ) mixers with 15 streams each:

The result is not much better — the server still couldn't handle the load, which is evidenced by the high Load Average 1 (over 100 ) and the stream degradation.

Five ( 5 ) mixers with 15 streams each:

Here, the performance was much better — Load Average 1 was under 40 and no stream degradation was observed. The control stream suffered neither artifacts, nor freezes:

As you can see, the number of mixers for 15 streams (i.e. "conference rooms") has turned out to be quite small. Now let's test the second way of using mixing — video chat rooms for two users:

Let's begin with 12 mixers with 2 streams each:

The test was passed — Load Average 1 under 10 (green line on the graph). No stream degradation. Smooth playback of the control stream:

Now let's add 12 more mixers. As you may recall, we talked about the ratio of 2 CPU cores for 1 mixer, which means that for our 48-thread server the maximum number of mixers is 24. The test of 24 mixers with 2 streams each:

This test was a failure. The server couldn't handle the load (Load Average 1 peaked at 75 and there was stream degradation).

Thus, the maximum number of mixers for 2 streams is somewhere between 12 and 24. To minimize the number of tests, we'll utilize the strategy similar to the binary search.

Test of 18 mixers with 2 streams each:

The test was a success. The Load Average 1 peaked at over 20 and no stream degradation was detected. The control stream had no issues either:

Now let's test 21 mixers with 2 streams each:

The test was a success. The Load Average 1 peaked around 50. No stream degradation was detected.

Now let's test 23 mixers with 2 streams each:

The test was a failure. Stream degradation was detected. The last number left to check is 22 mixers with 2 streams each:

Unfortunately, closer to the end of the test more than 40% of the stream displayed signs of degradation, meaning the test wasn't successful.

It follows that the maximum number of mixers with 2 streams for our server is 21.

The test results are presented in the following table:

Mixers qty |

Number of streams per mixer |

Test results |

|---|---|---|

4 |

15 |

Passed |

5 |

15 |

Passed |

6 |

15 |

Failed |

8 |

15 |

Failed |

12 |

2 |

Passed |

18 |

2 |

Passed |

21 |

2 |

Passed |

22 |

2 |

Failed |

23 |

2 |

Failed |

24 |

2 |

Failed |

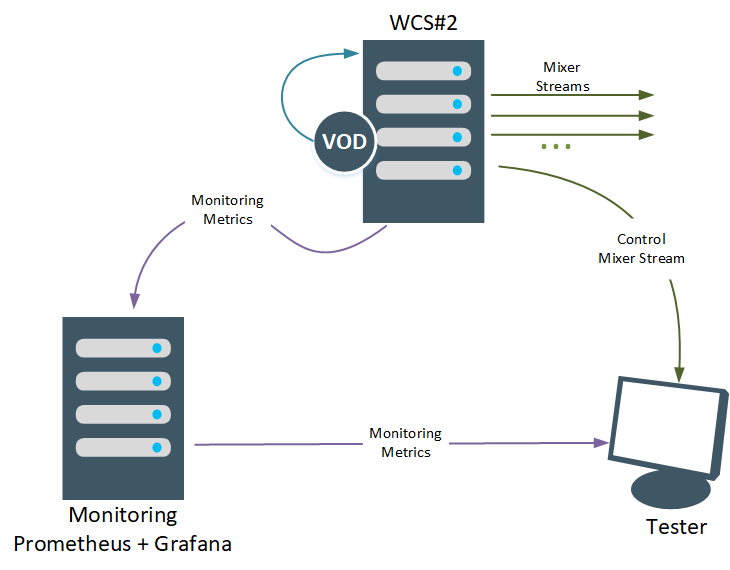

Test two: network-less

For this round we're going to exclude the network entirely. The streams will be published locally using the VOD (Video on Demand) technology. We'll use the same 720p video for capture.

To work with VODs, let's add the following lines into flashphoner.properties

vod_live_loop=true

vod_stream_timeout=14400000where:

vod_live_loop=true - cyclical stream capture, meaning once the video ends the capture begins again;

vod_stream_timeout=14400000 - parameter determining the duration of VOD publication after the users have disconnected. The time is set in ms, the duration of 20 secs being the default. For this test, we set it to be 4 hours.

The following script is used to start the publishing:

#! /bin/bash

contentType="Content-Type:application/json"

mixer=24

stream=2

for i in `seq 1 $mixer`; do

data='{"uri":"mixer://mixer'$i'", "localStreamName":"mixer'$i'", "hasVideo":"true", "hasAudio":"true"}'

curl -X POST -H "$contentType" --data "$data" "http://localhost:8081/rest-api/mixer/startup" -nostdin -nostats </dev/null >/dev/null 2>&1 &

for j in `seq 1 $stream`; do

dataj='{"uri":"vod-live://test.mp4", "localStreamName":"test'$i'-'$j'"}'

datai='{"uri":"mixer://mixer'$i'", "remoteStreamName":"test'$i'-'$j'", "hasVideo":"true", "hasAudio":"true"}'

curl -X POST -H "$contentType" --data "$dataj" "http://localhost:8081/rest-api/vod/startup" -nostdin -nostats </dev/null >/dev/null 2>&1 &

sleep 1s

curl -X POST -H "$contentType" --data "$datai" "http://localhost:8081/rest-api/mixer/add" -nostdin -nostats </dev/null >/dev/null 2>&1 &

done

doneIn this version of the test we excluded the network, but some of the processing power has to be diverted to processing VODs. Let's rerun the tests and look at the results.

Let's determine the number of mixers for 15 streams.

We begin with the maximum number discovered in the previous series of tests — 5 mixers with 15 VOD streams each:

The test was passed. No stream degradation was detected. The control stream was played successfully:

Let's increase the number of mixers to 6. Six mixers with 15 VOD streams:

As was the case with the RTMP streams, the test of 6 mixers with 15 streams each was failed. The graph shows stream degradation and freezes of the control stream:

Now let's test 24 mixers with 2 VOD streams each. As you recall, this is the maximum number of mixers our 48-thread server can handle.

Unfortunately, this test was a failure. Put together, the VOD processing and the mixers put serious load on the server processor, so the test results come as no surprise. The Load Average 1 of around 80 (green line of the graph) indicates high processor loads. Both degraded streams and freezes of the control stream were detected:

Let's test 12 mixers with 2 VOD streams each:

The test was passed. No stream degradation was detected, the control output stream of a random mixer had no artifacts or freezes:

Thus, the maximum number of mixers for 2 streams is somewhere between 12 and 24.

The test of 18 mixers with 2 VOD streams each:

The test was passed. No stream degradation was detected and the control stream displayed no loss in quality.

Next is the test of 21 mixers with 2 streams each. In the previous round of tests 21 was the maximum number of mixers with RTMP streams the server could handle. Let's see how many it can handle when VOD streams are involved:

The test was passed. As before, no stream degradation was detected and the control stream displayed no loss in quality.

The test of 23 mixers with 2 VOD streams each:

The test was failed. Stream degradation was detected.

The one number left lies between 21 and 23 mixers — the test of 22 mixers with 2 VOD streams each

The test was failed. Stream degradation was detected.

Thus, the maximum number of mixers with 2 VOD streams for our server is 21 — 21 chat rooms for two users.

The test results for mixers with VOD streams are presented in the following table:

Mixers qty |

Number of streams per mixer |

Test results |

|---|---|---|

5 |

15 |

Passed |

6 |

15 |

Failed |

12 |

2 |

Passed |

18 |

2 |

Passed |

21 |

2 |

Passed |

22 |

2 |

Failed |

23 |

2 |

Failed |

24 |

2 |

Failed |

Test three: audio only

The previous rounds of testing involved mixers with complete streams, that is, streams with audio and video components. But what if we don't need the video component, what if we're running a voice chat? Let's see how many chat rooms the server can handle.

For this, we'll be using an audio-only file. Its properties are as follows:

The audio streams for the mixers will be published using the VOD (Video on Demand) technology.

The following script is used to start the testing:

#! /bin/bash

contentType="Content-Type:application/json"

mixer=24

stream=2

for i in `seq 1 $mixer`; do

data='{"uri":"mixer://mixer'$i'", "localStreamName":"mixer'$i'", "hasVideo":"false", "hasAudio":"true"}'

curl -X POST -H "$contentType" --data "$data" "http://localhost:8081/rest-api/mixer/startup" -nostdin -nostats </dev/null >/dev/null 2>&1 &

for j in `seq 1 $stream`; do

dataj='{"uri":"vod-live://audio-only.mp4", "localStreamName":"test'$i'-'$j'"}'

datai='{"uri":"mixer://mixer'$i'", "remoteStreamName":"test'$i'-'$j'", "hasVideo":"false", "hasAudio":"true"}'

curl -X POST -H "$contentType" --data "$dataj" "http://localhost:8081/rest-api/vod/startup" -nostdin -nostats </dev/null >/dev/null 2>&1 &

sleep 1s

curl -X POST -H "$contentType" --data "$datai" "http://localhost:8081/rest-api/mixer/add" -nostdin -nostats </dev/null >/dev/null 2>&1 &

done

donePlease note that for this test we're disabling the creation of the video stream upon mixer creation and disabling the creation of video streams upon addition of streams to mixers.

"hasVideo":"false"For the graphs to display the data on the streams' audio components, we're adding the following metrics:

native_resources{instance="demo.flashphoner.com:8081", job="WCS_metrics_statistic", param="native_resources.audio_codecs"}and

native_resources{instance="demo.flashphoner.com", job="WCS_metrics_statistic", param="native_resources.audio_resamplers"}You can find the scripts and panels for Grafana in the "Useful files" section.

We begin with 24 mixers with 2 streams each. It is possible to up the number of streams, but it would make the assessment of the control stream performance slightly inconvenient.

For our server 24 is the maximum number of mixers with video that the server can, in theory, handle (and the tests showed that it doesn't). In the audio-only mode the server was running without a hitch. Load Average 1 never exceeded 10. The control stream displayed no audible signs of quality degradation - no stutters, accelerations, etc.

Without further ado: in the audio-only mode the server could handle 240 mixers with 2 streams each with no stream degradation and no drops in quality. For our server this means 5 audio mixers for 1 CPU core:

The test results for audio-only streams are presented in the following table:

Mixers qty |

Number of streams per mixer |

Test results |

|---|---|---|

24 |

2 |

Passed |

48 |

2 |

Passed |

240 |

2 |

Passed |

In conclusion

The results of the three rounds of tests are presented in the following table:

**Mixers qty ** |

Number of streams per mixer |

Test results for RTMP streams |

Test results for VOD streams |

Test results for audio-only streams |

|---|---|---|---|---|

4 |

15 |

Passed |

- |

- |

5 |

15 |

Passed |

Passed |

- |

6 |

15 |

Failed |

Failed |

- |

8 |

15 |

Failed |

- |

- |

12 |

2 |

Passed |

Passed |

- |

18 |

2 |

Passed |

Passed |

- |

21 |

2 |

Passed |

Passed |

- |

22 |

2 |

Failed |

Failed |

- |

23 |

2 |

Failed |

Failed |

- |

24 |

2 |

Failed |

Failed |

Passed |

48 |

2 |

- |

- |

Passed |

240 |

2 |

- |

- |

Passed |

In conclusion, the maximum number of mixers for streams with video is 21 with 2 streams each. Most of the load in this case comes as a result of stream transcoding within the mixers and the mixing process itself. Audio transcoding is a lot less taxing, which greatly increases the maximum number of mixers for audio-only streams, compared to the video streams.

In this article, we've demonstrated three similar methods for testing the server performance and for determining the maximum number of mixers depending on your goals and server capacity.

We have been testing the "classic" mixers, which means that if you're using MCU the results will be different. This is due to the fact that in the MCU mode a separate mixer is created for each incoming stream. For instance, in the MCU mode, a mixer with 10 incoming streams will create 10 mixers with 9 streams each. The resulting total will be 10x9 plus 1x10 — 11 mixers.

You might see results that are different from ours when it comes to your servers. The test results might be influenced by the following factors:

quality, resolution, and bitrate of incoming streams;

network state — connection speed, connection stability, availability of broadcast traffic, etc.;

state of server hardware — clock speed, number of physical and/or hyper-threading cores, RAM size, etc.;

distance from the data center to the end users (publishers and viewers).

All of these factors may influence the test results, so keep that in mind when selecting a server. We recommend performing a series of test in conditions similar to the ones in which the server is expected to operate.

Good streaming to you!

Useful files

Panel for Grafana:

WCS settings:

Script for custom metrics:

Scripts for testing:

Bonus section on how to use the panels for Grafana

Download and save the .json files from the previous section.

-

Open the file and change the IP address from our server's (172.16.40.23) to that of your server. Save changes.

-

Launch the Grafana web interface. Click "Import" in the left menu:

-

Click "Upload JSON file" on the page that opens:

-

Select the previously edited .json file and click "Import" on the page that opens:

Links

Settings file flashphoner.properties

Settings file wcs-core.properties

Quick deployment and testing of the server