With ML projects still on the rise we are yet to see integrated solutions in almost every device around us. The need for processing power, memory and experimentation has led to machine learning and DL frameworks targeting desktop computers first. However once trained, a model may be executed in a more constrained environment on a smartphone or on an IoT device. A particularly interesting environment to run the model on is browser. Browser-based solutions may be used on a wide range of devices, desktop and mobile, online and offline. The topic of this post is how to prepare a model for the in-browser usage.

This post presents an end-to-end implementations of a model creation in Python and Node.js. The end goal is to create a model and to use it in a browser. I'll use TensorFlow and TensorFlow.js as main frameworks. One could train a model in Python and convert it to JS. Alternative is to train a model directly in javascript, hence omitting the conversion step.

I have more experience in Python and use it in my everyday work. I occasionally use javascript, but have very little experience in the contemporary front-end development. My hope from this post that python developers with little JS experience could use it to kick start their JS usage.

What is Node.js?

Node.js is a runtime environment/engine that executes JavaScript code outside a browser. JavaScript is a dynamically typed programming language that conforms to ECMAScript specification. This may not tell you a lot, but what this means in plain English is that there are different specifications of JavaScript.

Node.js definition through analogies in the Python world:

Node.js is an interpreter that processes files written in JavaScript.

NPM is the package manager, think

pipfor JavaScript. NPM was the original package manager. These days several alternatives exist.yarngained popularity.ECAMScript specifications are Python versions.

A package in node.js must have a

package.jsonfile, which is somewhat similar tosetup.py.A release version of js code intended for usage in a browser is usually minified. Think of creating a

.pycfile in Python. Python does it behind the scene, but in javascript one has to do it explicitly.

Why Node.js and javascript?

IBM gives a couple of reasons:

The large community of JavaScript developers can be effective in using AI on the large scale.

The smaller footprint and fast start time of Node.js can be an advantage when deployed in containers and IoT devices.

AI models process voice, written text, and images, and when the models are served in the cloud, the data must be sent to a remote server. Data privacy has become a significant concern recently, so being able to run the model locally on the client with JavaScript can help to alleviate this concern.

Running a model locally on the client can help make browser apps more interactive.

For the project I have been working on, the possibility to use the model offline was the selling point. We had to use the model inside a web application in the wild with no connection. End-user would sync the data/model once in a while when they do get internet connection.

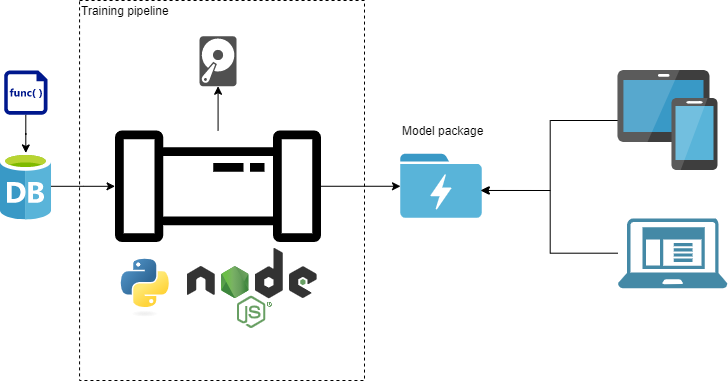

This post refers to two implementations of training pipeline for a text classification model. One is written in Python, another one in Node.js, both use Tensorflow. I will not focus much on the models, but rather on the code around them.

Code

All code ready to be run (locally and in Docker) is available from modileml group on Gitlab. This is a collection of repositories that contain fully implemented training pipelines and a web client. Below I will walk through the most interesting parts of it.

Architecture

Project architecture includes data in the DB and the code that will fetch the data, train the model and evaluate it. Trained model will be consumed by a web app.

Incoming data consists of posts, which we need to preprocess before handling to the model. The preprocessing is rather simple and consists of:

text cleaning (removing and replacing some patterns in the text).

tokenization. Our model will analyze words, hence we need to split text into words.

Finally we will need to convert text into numbers. This involves building a vocabulary. Multiple approaches available here (bag of words, TF-IDF). We are going to use the simplest model: each word from the training set will get an integer number in ascending order.

The preprocessing step is necessary both during the model training and usage (inference). During inference we do not need to create a vocabulary and use the one obtained during training.

At this point you may spot a tricky bit. We need to do preprocessing both during training and inference. However, we may have training code in Python and inference code in Javascript! Just to give you a taste of it: inference code may have a requirement like support iPhone up to model 5. Some regex expressions, which are perfectly fine in python won't work in these circumstances, so one will need to adjust python code to match up inference requirements.

Data

We will use a subset of the 20 newsgroups text dataset. The dataset comprises around 18000 newsgroups posts on 20 topics. We will use just 8 topics instead of 20. As the very first step (independent of ML) we will store all the data in a database (sqlite for simplicity). This is done in order to mimic a real-life environment where one often does not have a clean train/test files as input, but a database. So train and test splits are created from the data in the database based on some criteria. We will assign a random year value for every post. Train and test data have disjoint year distributions, i.e. it is easy to figure out which data corresponds to train and which to test.

Python code to create the database is found in file news_tosqlite.py. Database has a single table news with fields:

Data (string) - raw post text.

Category (string) - newsgroup topic as string. Our classification target.

Year (integer) - a random year. Train data have year in range (1922-1953), test data have year in range (1990-2000).

Python

Our team leverages Luigi for python code orchestration. We would normally have one task called Train, which we run with a local scheduler.

The following figure shows the pipeline:

It is a direct acyclic graph (DAG) meaning that task dependencies do not need to be linear.

The most interesting code is in ConvertToJs task, which prepares the model for inference in javascript. One has two options to convert model from within the python code:

Function

tensorflowjs.converters.save_keras_modelacts on a model instance (i.e. model loaded into memory) and saves it as a Keras model. One has to usetf.loadLayersModelfunction to load it in javascript.Function

tensorflowjs.converters.convert_tf_saved_model, which is able to convert a model stored on a disc. It saves the model in SavedModel format and one has to usetf.loadGraphModelon the client side.

Regardless of saved format a model in javascript format consist of two logical parts: model definition in a json file (model.json) and one or several binary files with model weights. Function save_keras_model may be better during developing as the resulting json file is more human readable. Another difference is that Keras models are fine tunable whereas SavedModel saved in a frozen state.

ConvertToJson task also generates constants.js file which contains vocabulary and necessary text preprocessing constants.

The easiest way to run the whole pipeline including the demonstration website is to use provided dockerfile:

# Build the image

docker build -t tf_demo .

# Run it. After training is done, visit http://localhost:5000/

docker run --rm -itp 5000:80 tf_demoThe provided demo is quite simple. For a more modern approach one could think of generating an NPM package. An NPM package will allow front-end developers to integrate the model easier.

Inference

Before we come to node.js code, let's have a look on how to use our model in a browser. This is, in fact, quite easy. I assume vanilla javascript. We reference TensorFlow.js library and our js code in html:

<script src="https://cdn.jsdelivr.net/npm/@tensorflow/tfjs@2.3.0/dist/tf.min.js"></script>

<script type="text/javascript" src="assets/constants.js"></script>

<script type="text/javascript" src="predict.js"></script>constants.js contains vocabulary, predict.js - javascript code to load the model, run preprocessing and inference. In a nutshell it is:

// global variable to hold our model

let model;

(async function() {

// load the model after page loaded

model = await tf.loadLayersModel('assets/model.json');

})();

async function predict(input = '', count = 5) {

// equivalent of argsort

const dsu = (arr1, arr2) => arr1

.map((item, index) => [arr2[index], item]) // add the args to sort by

.sort(([arg1], [arg2]) => arg2 - arg1) // sort by the args

.map(([, item]) => item); // extract the sorted items

// Convert input text to words

const words = cleanText(input).split(' ');

// Model works on sequences of MAX_SEQUENCE_LENGTH words, make one

const sequence = makeSequence(words);

// convert JS array to TF tensor

let tensor = tf.tensor1d(sequence, dtype='float32').expandDims(0);

// run prediction, obtain a tensor

let predictions = await model.predict(tensor);

const numClasses = predictions.shape[1];

// array of sequential indices into predictions

const indices = Array.from(Array(numClasses), (x, index) => index);

// convert tensor to JS array

predictions = await predictions.array();

// we predicted just a single instance, get it's results

predictions = predictions[0];

// prediction indices sorted by score (probability)

const sortedIndices = dsu(indices, predictions);

const topN = sortedIndices.slice(0, count);

const results = topN.map(function(tagId) {

const topic = getKey(TAGS_VOCAB, tagId);

const prob = predictions[tagId];

return {

topic: topic,

score: prob

}

});

return results;

}Function predict classifies input text and outputs top count topics.

The inference code is the same regardless of the programming language used for model training.

Node.js

Node.js is based on packages. In this example, we can say that a package is our project. All npm packages contain a file package.json (usually in the project root), which holds various metadata about the package and a list of package dependencies. The first step in consuming the package is to install it's dependencies. A package manager is used for this.

Traditionally, there was an NPM package manager. At some point a better alternative appeared called Yarn. Yarn is currently on version 2, but because of backwards compatibility in mind you get version 1 by default when you do fresh installation.

I found the ideas in yarn 2 interesting, however I was not able to use it in this project. There were two errors I wasn't able to overcome:

it couldn't handle

"type": "module"inpackage.jsonfile. More on this later.it was constantly complaining that I have to build package

@tensorflow/tfjs-nodefrom source.

So Yarn v1.x it is.

Once we have our dependencies installed, we need to reference them in the code. Here comes a tricky and annoying part for someone with Python background. For some time there was no official way to write modular js code. Variables and functions were global. Naturally, people tried to overcome this problem and so module loaders were born (there are module bundlers as well). The JavaScript language didn’t have a native way of organizing code before the ES2015 standard. Code organization guides how one references dependencies, i.e. defines and uses modules. Nowadays, we can broadly speak about two syntaxes: import and require.

An example of import syntax:

import tf from "@tensorflow/tfjs-node";An example of require syntax:

const tf = require('@tensorflow/tfjs-node');Looks innocent. However, trying to execute code with a pure node command (i.e. node train.js), I found these two syntaxes being mutually exclusive (and it is indeed described in the documentation). Once you try to use import, node will complain that you can't use import outside a module! A common proposed solution on the Internet is to add "type": "module" to your package.json. Once done you won't be able to use require anymore. The error message was: ReferenceError: require is not defined. And here is another downside of Node.js for me. I was trying to google solutions for appearing errors (we all do it, right?!). With Node.js I often found myself with a 100 proposed solutions and all of them were different and not working, which means that there could be many underlying causes resulting in the same error message.

I see two possible solutions for import/require issue:

Carefully read the documentation for ECMAScript modules and CommonJS modules.

Use babel-node. Then it will be possible to mix

importwithrequire. Not only this is an extra dependency to your project, but if you want to use import, you will have to write it in a special form anyway:import * as tf from "@tensorflow/tfjs-node";.

Not bad for just starting a new project and getting dependencies in place! Trying to figure out all the details feels like this:

Let's finally move towards the code. In order to mimic python pipeline I used a couple of libraries:

better-sqlite3- to access sqlite database.dataframe-js- to process data in dataframes somewhat similar to Pandas.pipeline-js- to organize code into a pipeline. This is a linear pipeline and not a DAG. It aims to replace code like thistrainModel(makeFeatures(preprocess(fetchData(database))))withvar pipeline = new Pipeline([ fetchData, preprocess, makeFeatures, trainModel, ]); pipeline.process(database);I didn't find a DAG package with built-in task executor. Perhaps this is due to my low familiarity with the ecosystem.

Once we know the above, the rest is quite similar to python. Here is a function to preprocess data that we get from the database:

function preprocessData(rawData) {

const columns = ["Data", "Category", "Year"];

let df = new DataFrame.DataFrame(rawData, columns);

// filter out missing values

df = df.dropMissingValues(["Data", "Category"]);

df = df.filter(row => row.get("Category") !== " ");

// split into train and test sets

df = df.withColumn("testData", () => false);

df = df.map((row) => row.set("testData", row.get("Year") > config.testsSplitYear));

// convert sentenses/categories to words

df = df.withColumn("Words", (row) => cleanText(row.get("Data")).split(" "));

df = df.chain(row => row.set("Category", row.get("Category").split(" ")))

const featuresFile = `${getBuildFolder('data')}/features.json`;

df.select('Category', 'testData', 'Words').toJSON(true, featuresFile);

console.log(`Features saved to: ${featuresFile}`);

return df;

};Note that is uses global variable config. We may save intermediate data to disc. Here I save preprocessed data into a JSON file.

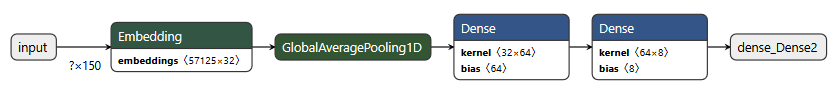

Model definition is straightforward:

export function createModel(wordsVocabSize, outputVocabSize, inputLength, embeddingDim = 64) {

const model = tf.sequential({

layers: [

tf.layers.embedding({

inputDim: wordsVocabSize,

outputDim: embeddingDim,

inputLength: inputLength,

}),

tf.layers.globalAveragePooling1d(),

tf.layers.dense({

activation: "relu",

units: embeddingDim * 2,

}),

tf.layers.dense({

activation: "softmax",

units: outputVocabSize,

}),

],

});

model.compile({

optimizer: tf.train.adam(),

loss: "sparseCategoricalCrossentropy",

metrics: ["acc"],

});

return model;

}

We build a sequential model with an embedding layer and one hidden (dense) layer.

When saving the model we do not need to convert it and can simply do await model.save('nodejs_model'). Please refer to the complete source code for all the details.

Node.js pipeline produces a model and reports recall@K metric for K being 1, 3, and 5.

Further improvements

The demonstrated pipelines may be further improved:

Separate training from inference, i.e. use different docker images. Training image may save the model to some sort of persistent storage and inference image may download it.

Generate an npm package with embedded model instead of a bunch of files, publish this package.

Tests. They have been omitted here.

Docker tweaks like not running from a root user.

Conclusion

Benefits of training in Node.js when you are going to inference in javascript:

You have one preprocessing coding base.

You omit model conversion step. When making model in Python, one has always to double check, which layers are implemented in TensorFlow.js. The conversion tool won't report any problems, but loading the model in javascript may fail. Not only you have layers that are not implemented, but you may get differently implemented ones.

You could perhaps create a single npm package, which will contain training and inference code, which you may publish on npm repository (public or private). This may make your life a lot easier. Our current solution for a client includes an npm inference package. Preparing such package with python in docker was a tedious process.

Downsides of working in node.js (from a python developer perspective):

A steep learning curve in order to use it right. Perhaps, you start with node and vanilla javascript. Soon babel kicks in and you will need to learn about all es5/6/2020 standards. Then you may want to move towards TypeScript (typed javascript language) where you would begin to compile

.tsfiles into.js. After that you may find out that not all of your dependencies are typescript-compatible (although as I understood it shall run OK, you just miss some benefits of typescript).Debugging difficulties. Node.js is very asynchronous whereas python is mostly synchronous by default. Loading data from disc in order to apply different functions to it may be hard. This may be just a habit. Currently a big chunk of my work includes trying out things on data. By this I mean that I would very often do:

df = pd.read_parquet('data.parquet') preprocess_simple(df) do_more(df) ... <think> df2 = pd.read_parquet('another_data.parquet') do_even_more(df, df2)In node.js data loading will probably be asynchronous and the code is like this:

let df = await dataframe.loadParquet('data.parquet'); do_more(df); // Ops! You'll get an error here as df is a promise df.then(df => { do_more(df) });The real-data

dfobject exists only insidethenfunction. It's possible to overcome this and savedfglobally, but doing so is not natural for people coming from a synchronious programming world.Node.js REPL. In python I can just copy paste chunks of my code from a working file to REPL, including import statemetns. Node.js REPL uses

CommonJSmodule loader. If you use ECMAScript modules, then you won't be able to pasteimportchunks. Alternatively, you may startbabel-nodeREPL in order to overcomeimport/requireissue.Babel-nodehas it's own peculiarities: onlyvarvariables are supported in REPL.

If I am asked what to choose Python or Node.js for a new project involving inference in the browser, it will still be a hard question to answer. I feel like it is easier to experiment in python, but writing in Python and rewriting to JS afterwards is time consuming. So if your project is relatively small and straightforward, go with JS from the very beginning. You will be closer to consumers of your model and will understand their needs better. As always, choose the tool that suits the job.

I hope that the provided code helps you to start you journey into node.js and mobile machine learning.

References

For further dive into the topic have a look at these links:

codelabs.developers.google.com/codelabs/tensorflowjs-nodejs-codelab - a gentle introduction to TensorFlow.js + node.js from Google.

github.com/tensorflow/tfjs-examples/ - repository with official TensorFlow.js examples. Get inspired!

auth0.com/blog/javascript-module-systems-showdown/ - Information about modules in node.js. Use it to understand what modules are and how to use them.