At Miro, we always try to improve the maintainability of our code by using common practices, including in matters of multithreading. This does not solve all the issues that arise because of the ever-increasing load, but it simplifies the support: it increases both the code readability and the speed of developing new features.

Today (May 2020) we have more than 100,000 daily users, about 100 servers in the production environment, 6,000 HTTP API requests per second and more than 50,000 WebSocket API commands, and daily releases. Miro has been developing since 2011; in the current implementation, user requests are handled in parallel by a cluster of different servers.

The core asset of our product is the collaborative user whiteboards, so the main load falls on them. The primary subsystem that controls most of the concurrent access is a stateful system for user sessions on a whiteboard.

When a new whiteboard is opened, a state is created on one of the servers. The state stores both the runtime data required for simultaneous collaboration and display of the content and the system data, such as mapping to the processing threads. Information about which server stores the state is recorded in a distributed data structure and is available to the cluster as long as the server is running and at least one user is on the whiteboard. We use Hazelcast for this part of the subsystem. All new whiteboard connections are forwarded to the server that stores the state.

When connecting to the server, the user enters the acceptor thread, whose sole purpose is to bind the connection to the state of the whiteboard, in the threads of which all following work will take place.

There are two threads associated with a whiteboard: a network thread, which handles connections, and a “business” thread, which is responsible for the business logic. This allows us to handle the processing of various types of tasks (network packets and business commands) differently. Processed network commands from users in the form of applied business tasks are queued to the business thread, where they are processed sequentially. This avoids unnecessary synchronization when writing the application logic.

The division of code into business/application and system code is our internal convention. It allows us to separate the code responsible for user features from the low-level details of communication, scheduling, and storage, which have a service function.

If the acceptor thread detects that the whiteboard state doesn’t exist, it will create a corresponding initialization task. State initialization tasks are handled by a different type of thread.

This implementation has the following advantages:

The subsystem described above is quite nontrivial to implement. The developer has to keep the whole model of relationships between threads in their head and take into account the reverse process of closing the whiteboards. When closing a whiteboard, you have to remove all subscriptions and delete entries from the registries and do this in the same threads where they were originally initialized in the desired sequence.

We noticed that the bugs and difficulties of modifying the code that appeared in this subsystem were often related to misunderstanding of the processing context. Juggling the threads and tasks made it difficult to answer the question of in which thread a particular piece of code was being executed.

To solve this problem, we used a method of “coloring” the threads — it is a policy aimed at regulating the use of threads in the system. Threads are assigned colors, and methods determine the acceptability of execution within threads. The color here is just an abstraction; it could be another entity, like an enumeration. In Java, annotations can serve as a color markup language:

Annotations are applied to a method and can be used to define the validity of a method call. For example, if the method’s annotation allows yellow and red colors, the first thread will be able to call that method; for the second, an attempt to call the method will result in an error.

We can also specify unacceptable colors:

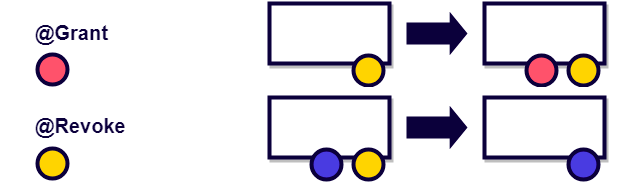

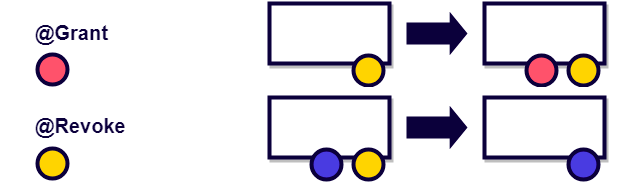

We can add and remove thread privileges dynamically:

The lack of annotation or annotation as in the example below means that the method can be executed in any thread:

Android developers may be familiar with this approach through the use of MainThread, UiThread, WorkerThread, and similar annotations.

Thread coloring uses the principle of self-documenting code, and the method itself is well amenable to static analysis. Using static analysis, it is possible to check whether the code is written correctly or not before executing it. If we exclude Grant and Revoke annotations and assume that a thread upon initialization has an immutable set of privileges, it will be a flow-insensitive analysis — a simple version of static analysis that does not take a call order into account.

When we implemented the thread coloring, we did not have any ready-to-be-used solutions for static analysis in our DevOps infrastructure, so we took a simpler and cheaper path — we created our annotations that are uniquely associated with each thread type. We started using aspects to check the correctness of the annotations at runtime.

For aspects, we use the AspectJ extension and a plugin for Maven that performs weaving at compile time. Initially, we set weaving to be performed at load time, when ClassLoader loads the classes. However, we have encountered the fact that the weaver sometimes behaved incorrectly when loading the same class concurrently, as a result of which the original byte code of the class remained unchanged. This resulted in very unpredictable and difficult to reproduce the behavior in the production environment. It is possible that current versions of AspectJ do not have this problem.

Using aspects allowed us to quickly find most of the problems in the code.

It is important to remember to always keep the annotations up to date: you can delete them, feel lazy about adding them, or aspect weaving can be turned off altogether — in these cases, the thread coloring will quickly lose its relevance and value.

One of the types of coloring is the GuardedBy annotation from Java.util.concurrent. It delineates access to fields and methods by specifying which locks are required for correct access.

Modern IDEs even support the analysis of this annotation. For instance, IntelliJ IDEA shows this message if there is something wrong with the code:

The method of coloring threads itself is not new, but it seems that in languages such as Java, where multiple threads are often used to access mutable objects, its use not only in the documentation but also in compiling and building phases could greatly simplify the development of multithreaded code.

We still use the aspect implementation of coloring. If you know a more elegant solution or an analysis tool that allows you to increase the stability of this approach to system changes, please feel free to share it.

P.S. The article was first published at Medium.

Today (May 2020) we have more than 100,000 daily users, about 100 servers in the production environment, 6,000 HTTP API requests per second and more than 50,000 WebSocket API commands, and daily releases. Miro has been developing since 2011; in the current implementation, user requests are handled in parallel by a cluster of different servers.

The concurrent access control subsystem

The core asset of our product is the collaborative user whiteboards, so the main load falls on them. The primary subsystem that controls most of the concurrent access is a stateful system for user sessions on a whiteboard.

When a new whiteboard is opened, a state is created on one of the servers. The state stores both the runtime data required for simultaneous collaboration and display of the content and the system data, such as mapping to the processing threads. Information about which server stores the state is recorded in a distributed data structure and is available to the cluster as long as the server is running and at least one user is on the whiteboard. We use Hazelcast for this part of the subsystem. All new whiteboard connections are forwarded to the server that stores the state.

When connecting to the server, the user enters the acceptor thread, whose sole purpose is to bind the connection to the state of the whiteboard, in the threads of which all following work will take place.

There are two threads associated with a whiteboard: a network thread, which handles connections, and a “business” thread, which is responsible for the business logic. This allows us to handle the processing of various types of tasks (network packets and business commands) differently. Processed network commands from users in the form of applied business tasks are queued to the business thread, where they are processed sequentially. This avoids unnecessary synchronization when writing the application logic.

The division of code into business/application and system code is our internal convention. It allows us to separate the code responsible for user features from the low-level details of communication, scheduling, and storage, which have a service function.

If the acceptor thread detects that the whiteboard state doesn’t exist, it will create a corresponding initialization task. State initialization tasks are handled by a different type of thread.

This implementation has the following advantages:

- There is no business logic in the service types of threads that could potentially slow down a new connection or I/O operations. This logic is isolated in the special types of threads — “business” threads — to reduce the impact of any potential delay in that thread brought by mistake in the frequently modified business code.

- State initialization is not performed in the business thread and does not affect the processing time of business commands from users. The initialization can take some time, and business threads process several whiteboards at once, so this way creating new whiteboards does not directly affect the existing ones.

- The parsing of network commands is usually done faster than their execution, so the network thread pool configuration can differ from the business thread pool configuration to leverage the system resources.

Coloring the threads

The subsystem described above is quite nontrivial to implement. The developer has to keep the whole model of relationships between threads in their head and take into account the reverse process of closing the whiteboards. When closing a whiteboard, you have to remove all subscriptions and delete entries from the registries and do this in the same threads where they were originally initialized in the desired sequence.

We noticed that the bugs and difficulties of modifying the code that appeared in this subsystem were often related to misunderstanding of the processing context. Juggling the threads and tasks made it difficult to answer the question of in which thread a particular piece of code was being executed.

To solve this problem, we used a method of “coloring” the threads — it is a policy aimed at regulating the use of threads in the system. Threads are assigned colors, and methods determine the acceptability of execution within threads. The color here is just an abstraction; it could be another entity, like an enumeration. In Java, annotations can serve as a color markup language:

@Color

@IncompatibleColors

@AnyColor

@Grant

@Revoke

Annotations are applied to a method and can be used to define the validity of a method call. For example, if the method’s annotation allows yellow and red colors, the first thread will be able to call that method; for the second, an attempt to call the method will result in an error.

We can also specify unacceptable colors:

We can add and remove thread privileges dynamically:

The lack of annotation or annotation as in the example below means that the method can be executed in any thread:

Android developers may be familiar with this approach through the use of MainThread, UiThread, WorkerThread, and similar annotations.

Thread coloring uses the principle of self-documenting code, and the method itself is well amenable to static analysis. Using static analysis, it is possible to check whether the code is written correctly or not before executing it. If we exclude Grant and Revoke annotations and assume that a thread upon initialization has an immutable set of privileges, it will be a flow-insensitive analysis — a simple version of static analysis that does not take a call order into account.

When we implemented the thread coloring, we did not have any ready-to-be-used solutions for static analysis in our DevOps infrastructure, so we took a simpler and cheaper path — we created our annotations that are uniquely associated with each thread type. We started using aspects to check the correctness of the annotations at runtime.

@Aspect

public class ThreadAnnotationAspect {

@Pointcut("if()")

public static boolean isActive() {

... // Here we check flags that determine whether aspects are enabled or not. This is used, for instance, in a couple of tests.

}

@Pointcut("execution(@ThreadAnnotation * *.*(..))")

public static void annotatedMethod() {

}

@Around("isActive() && annotatedMethod()")

public Object around(ProceedingJoinPoint joinPoint) throws Throwable {

Thread thread = Thread.currentThread();

Method method = ((MethodSignature) jp.getSignature()).getMethod();

ThreadAnnotation annotation = getThreadAnnotation(method);

if (!annotationMatches(annotation, thread)) {

throw new ThreadAnnotationMismatchException(method, thread);

}

return jp.proceed();

}

}For aspects, we use the AspectJ extension and a plugin for Maven that performs weaving at compile time. Initially, we set weaving to be performed at load time, when ClassLoader loads the classes. However, we have encountered the fact that the weaver sometimes behaved incorrectly when loading the same class concurrently, as a result of which the original byte code of the class remained unchanged. This resulted in very unpredictable and difficult to reproduce the behavior in the production environment. It is possible that current versions of AspectJ do not have this problem.

Using aspects allowed us to quickly find most of the problems in the code.

It is important to remember to always keep the annotations up to date: you can delete them, feel lazy about adding them, or aspect weaving can be turned off altogether — in these cases, the thread coloring will quickly lose its relevance and value.

GuardedBy

One of the types of coloring is the GuardedBy annotation from Java.util.concurrent. It delineates access to fields and methods by specifying which locks are required for correct access.

public class PrivateLock {

private final Object lock = Object();

@GuardedBy (“lock”)

Widget widget;

void method() {

synchronized (lock) {

//Access or modify the state of widget

}

}

}Modern IDEs even support the analysis of this annotation. For instance, IntelliJ IDEA shows this message if there is something wrong with the code:

The method of coloring threads itself is not new, but it seems that in languages such as Java, where multiple threads are often used to access mutable objects, its use not only in the documentation but also in compiling and building phases could greatly simplify the development of multithreaded code.

We still use the aspect implementation of coloring. If you know a more elegant solution or an analysis tool that allows you to increase the stability of this approach to system changes, please feel free to share it.

P.S. The article was first published at Medium.