Introduction

Our project implements a real-time edge detection system based on capturing image frames from an OV7670 camera and streaming them to a VGA monitor after applying a grayscale filter and Sobel operator. Our design is built on a Cyclone IV FPGA board which enables us to optimize the performance using the powerful features of the low-level hardware and parallel computations which is important to meet the requirements of the real-time system.

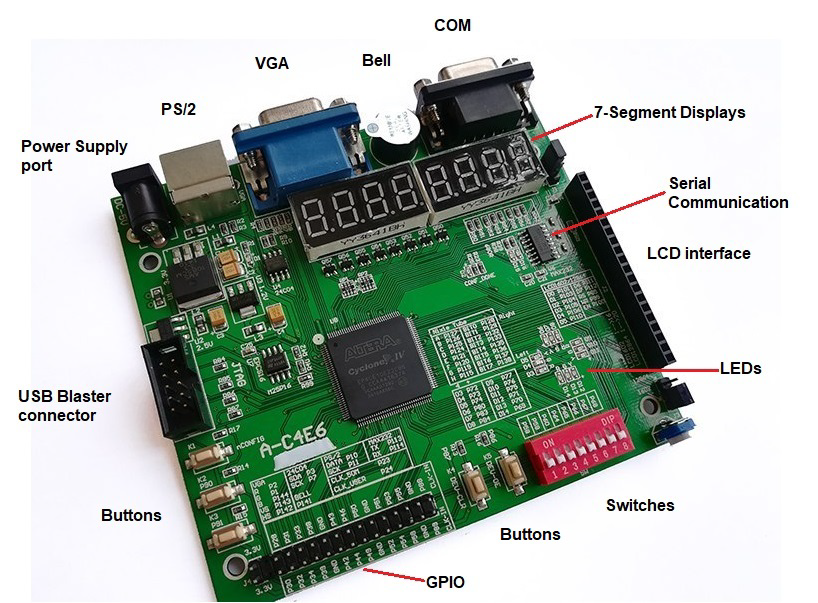

We used ZEOWAA FPGA development board which is based on Cyclone IV (EP4CE6E22C8N). Also, we used Quartus Prime Lite Edition as a development environment and Verilog HDL as a programming language. In addition, we used the built-in VGA interface to drive the VGA monitor, and GPIO (General Pins for Input and Output) to connect the external hardware with our board.

Architecture

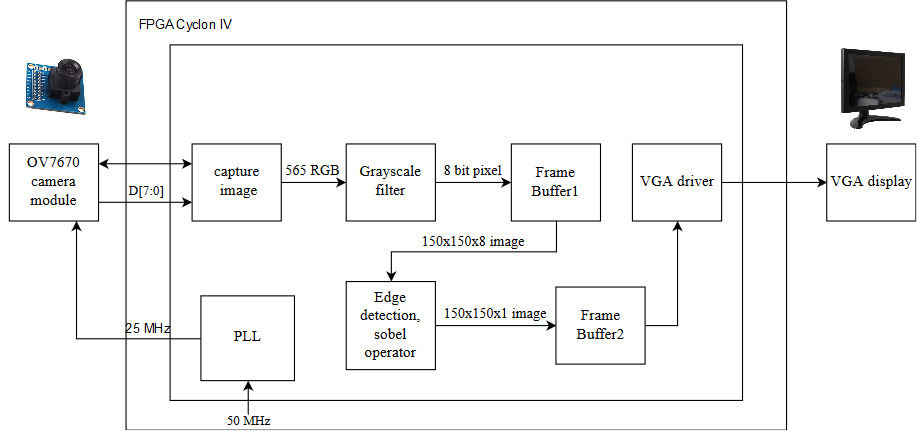

Our design is divided into 3 main parts:

- Reading the data pixels from the camera.

- Implementing our edge detection algorithm (grayscale converter and Sobel operator).

- Displaying the final image by interfacing with a VGA monitor.

Also, there is an intermediate memory storage between reading/writing the data and operating on this data. For this purpose, we implemented two buffers that work as temporary space for pixels before they are used.

Note that after we took the pixel from the camera, we did not store it directly into the intermediate memory buffer. Instead, we converted it to the grayscale then we stored it in the buffer. This is because storing 8-bit grayscale pixels takes less memory than storing the colored pixels which are 16-bits. Also, we have another buffer which stores the data after applying the Sobel operator to make them ready to be displayed on the monitor.

Here are the details about the implementation of our architecture:

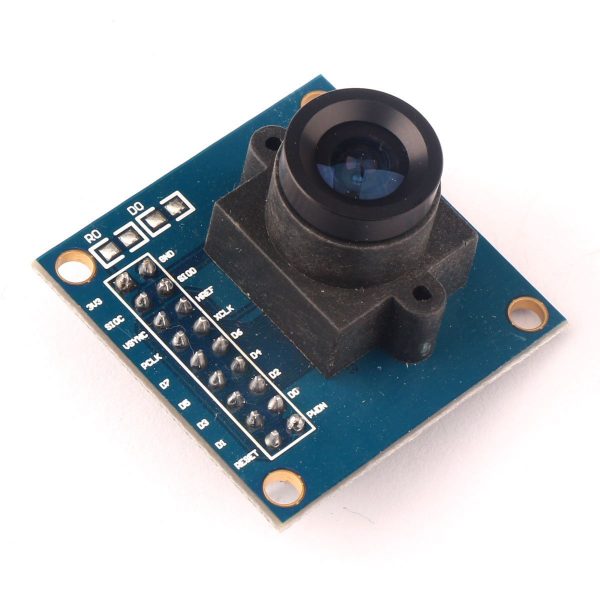

Camera

We used OV7670 camera which is one of the cheapest camera modules that we found. Also, this camera can work on 3.3V and does not need difficult communication protocols like I2c or SPI to extract the data of the image. It only requires SCCB interface which is similar to I2c interface to set the configuration of the camera in terms of color format (RGB565, RGB555, YUV, YCbCr 4:2:2), resolution (VGA, QVGA, QQVGA, CIF, QCIF) and many others settings.

The video consists of frames which are being changed at a specific rate. One frame is an image consisting of rows and columns of pixels where each pixel is represented by color values. In this project, we used the default configuration of the camera where the frame's size is the VGA resolution 640 x 480 (0.3 Megapixels), and the pixel's color format is RGB565 (5 bits for Red, 6 bits for Blue, 5 bits for Green) and the rate of changing the frames is 30 fps.

In below, the connections of the camera to the FPGA using the GPIO which exists in the development board:

| Pin in the camera | pin in the FPGA | Description | Pin in the camera | pin in the FPGA | Description |

|---|---|---|---|---|---|

| 3.3V | 3.3V | Power Supply (+) | GND | GND | Ground Supply Level (-) |

| SDIOC | GND | SCCB clock | SDIOD | GND | SCCB Data |

| VSYNC | P31 | Vertical synchronization | HREF | P55 | Horizontal Synchronization |

| PCLK | P23 | Pixel's clock | XCLK | P54 | Input System clock (25 MHz) |

| D7 | P46 | 8th bit of data | D6 | P44 | 7th bit of data |

| D5 | P43 | 6th bit of data | D4 | P42 | 5th bit of data |

| D3 | P39 | 4th bit of data | D2 | P38 | 3rd bit of data |

| D1 | P34 | 2nd bit of data | D0 | P33 | 1st bit of data |

| RESET (Active Low) | 3.3V | Reset pin | PWDN | GND | Power Down pin |

Note that we did not use SCCB interface for configuration. So, we put their corresponding wires on the ground to prevent any floating signals that can affect the data.

To provide the 25MHz clock for the camera we used Phase-Locked Loop (PLL) which is a closed-loop frequency-control system to provide the needed clock from the 50MHz provided from the board. To implement the PLL, we used the internal IP catalog tool inside Quartus software.

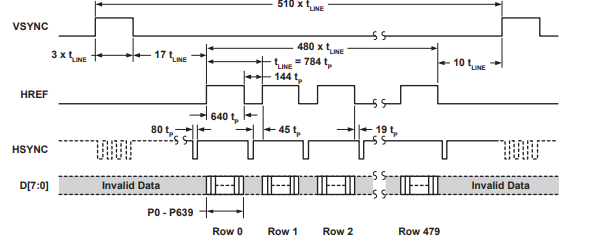

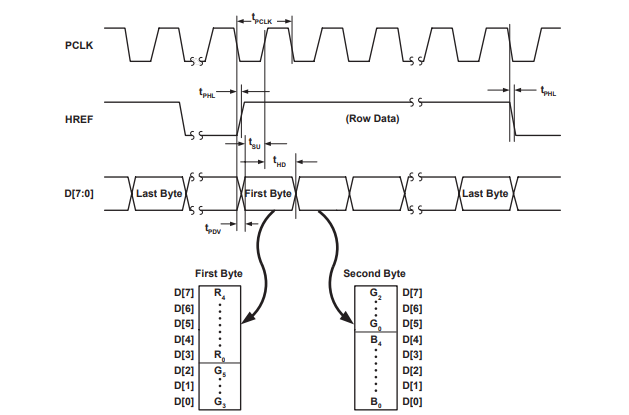

This camera uses vertical synchronization (VSYNC) signal to control the sending process of the frame and the horizontal synchronization (HREF) signal to control the sending of each row of the frame. This camera uses only 8 lines of data (D0-D7) to transfer the bits which represent the pixel's color values as the camera divides the 16-bit RGB pixel value into 2 (8-bit) parts and send each one separately.

The below figures from the datasheet of OV7670 camera module illustrate the signals of vertical and horizontal synchronization.

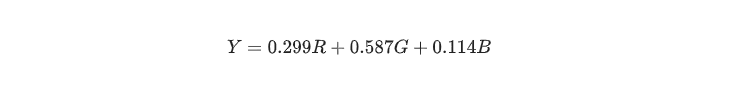

Grayscale converter

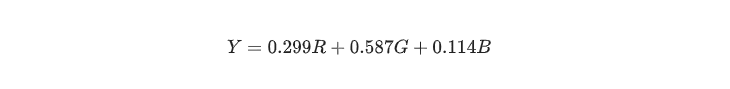

To produce a grayscale image from its original colored image, many factors should be taken into consideration, because the image may lose contrast, sharpness, shadow, and structure. Moreover, the image should preserve the relative luminance of the color space. Several linear and non-linear techniques are used for converting the color image to grayscale. Accordingly, to achieve our objective we used the colorimetric (perceptual luminance-preserving) conversion to grayscale represented in the following equation:

To enhance the performance in terms of computations, it is faster to use the shift operator. Hence, the equation above can be reduced to the following:

As a result, after capturing a (565 RGB) pixel value from the camera, it can be immediately converted into an 8-bit grayscale pixel value applying the formula of conversion. The grayscaled image is easier to store in the memory and fast enough to serve the functionality of our real-time system as its complexity is approximately logarithmic and FPGA can make it even faster by accessing the memory in parallel. After that, the stored image is ready for implementing the edge detection algorithm.

Intermediate memory (The buffer)

We have 2 buffers, the first one is used to store the pixels after converting them to grayscale and its size (8-bits x 150 x 150) and the second one is used to store the pixels after applying Sobel operator and the threshold for the output value and its size (1-bit x 150 x 150). Unfortunately, 150 x 150 buffers do not store the whole image from the camera but stores only part of it.

We have chosen our buffers’ size as 150 x 150 because of the limitation of cyclone IV memory as it only has 276.480 Kbit while our two buffers take 202.500 Kbit (150 x 150 x 9) which is equivalent to 73.24% from the original memory of cyclone IV and the rest of the memory is used for storing the algorithm and the architecture. Furthermore, we tried (170 x 170) as a size for our buffers which takes 94.07% from the memory which does not leave enough space implementing the algorithm.

Our buffers are True Dual-port RAM which can read and write in different clock cycles simultaneously. Here, we created our implementation instead of using the IP catalog tool inside Quartus software to have more flexibility in the implementation. Also, we integrated both buffers in only one module instead of having different modules.

Sobel operator

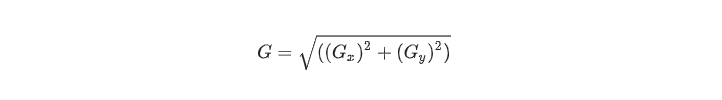

We used a first derivative edge detection operator which is a matrix area gradient operator that determines the change of luminance between different pixels. To be more precise, as it is a straightforward and efficient method in terms of memory usage and time complexity, we used Sobel gradient operator that uses 3x3 kernel centered on a chosen pixel to represent the strength of the edge. The Sobel operator is the magnitude of the gradient computed by:

Where Gx and Gy can be represented using convolution masks:

Note that the pixels that are closer to the center of the mask are given more weight. Also, Gx and Gy can be calculated as follows:

Where pi is the corresponding pixel in the following array, and the value of pi is 8-bit grayscale value:

It is a common practice to approximate the gradient magnitude of Sobel operator by absolute values:

This approximation is easier to implement and faster to calculate which again serves our functionality in terms of time and memory.

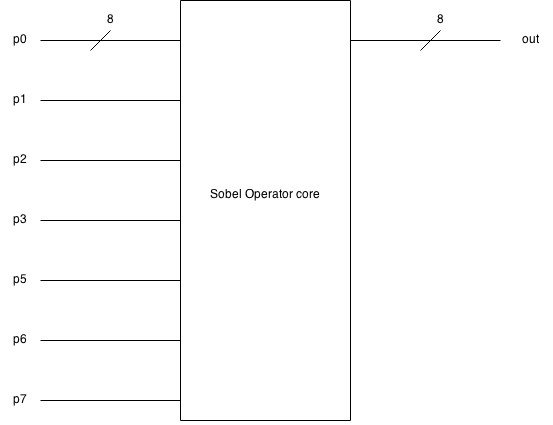

Here is the block diagram of Sobel operator which takes 9 (8-bit) pixels as input and produces (8 bit) pixel value:

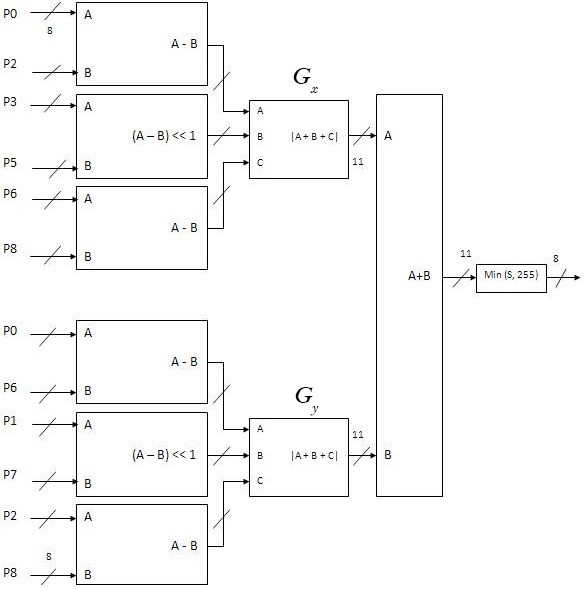

And here is the detailed block diagram of the Sobel operator implementation.

VGA monitor

Our development board has a built-in VGA interface which has the capability to display only 8 colors on the VGA monitor as it has only 3-bits to control the colors through one bit for Red, one for Green and one for Blue. This has made our debugging harder as it prevents us to display the image from the camera directly to the monitor. So, we used a threshold to convert the pixels into 1-bit value so it is possible to display the image.

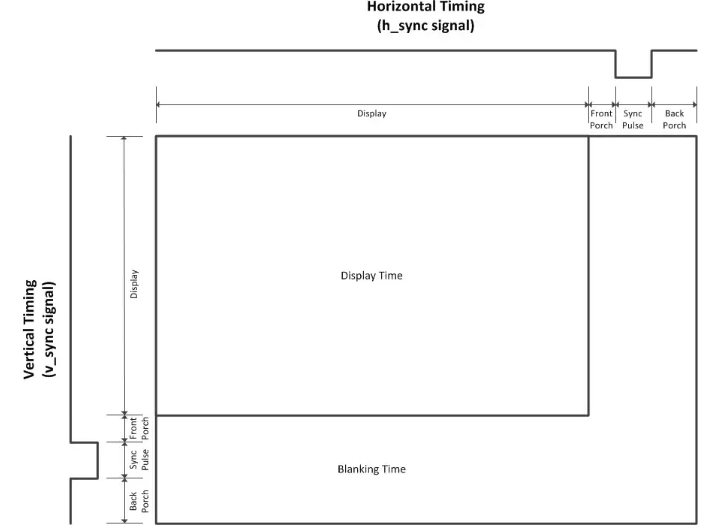

The VGA interface works like the camera as it operates pixel by pixel from the upper-left corner to the lower-right corner. Using the vertical and horizontal synchronization, we can synchronize the signals that control the flow of pixels.

The vertical synchronization signal is used to represent the index of the row while the horizontal synchronization signal is used to represent the index of the column. Also, both signals use front porch, sync pulse and back porch as synchronization signals to separate the old row from the new row in the horizontal synchronization signal, and the old frame from the new frame in the vertical synchronization signal.

We used the standard VGA signal interface (640 x 480 @60 MHz). All the standard specifications of the signal is described here.

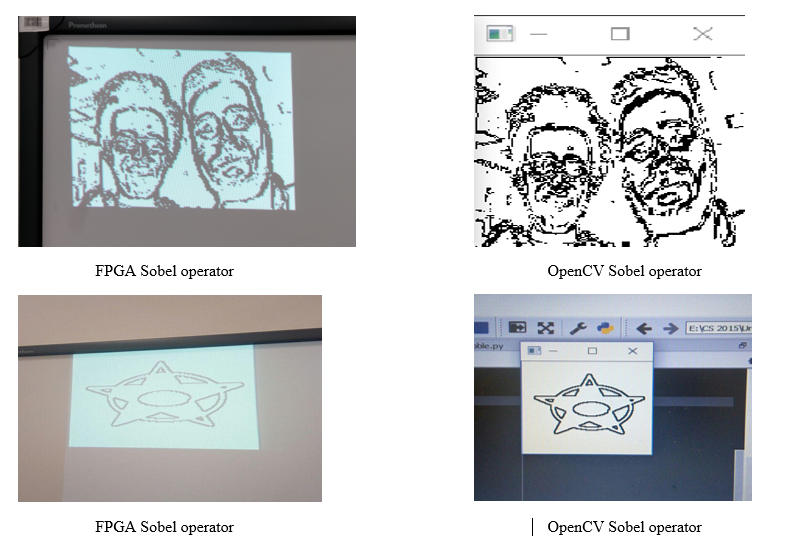

Testing

Before putting everything together and testing the real-time system. We first had to test each part separately. At first, we checked the values and signals that come from the camera by displaying certain pixel values. Then, with the help of OpenCV using Python programming language, we were able to apply Sobel filter on several images to compare the results with our algorithm and check the correctness of our logic. Moreover, we tested our buffers and VGA driver by displaying several static images on the VGA monitor after applying Sobel operator and thresholding. Furthermore, by changing the value of the threshold, the accuracy of the image is affected.

The python code which we used:

# This code is made to test the accuracy of our algorithm on FPGA

import cv2 #import opencv library

f = open("sample.txt",'w') # Open file to write on it the static image initialization lines

img = cv2.imread('us.jpg') # Read the image which has our faces and its size 150x150

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY) #convert to grayscale

sobelx = cv2.Sobel(gray,cv2.CV_64F,1,0,ksize=3) #x-axis sobel operator

sobely = cv2.Sobel(gray,cv2.CV_64F,0,1,ksize=3) #y-axis sobel operator

abs_grad_x = cv2.convertScaleAbs(sobelx)

abs_grad_y = cv2.convertScaleAbs(sobely)

grad = abs_grad_x + abs_grad_y

for i in range(0,150):

for x in range(0,150):

#read the pixels of the grayscaled image and Store them into file with specific format to initialize the buffer in FPGA code

f.write("data_a[{:d}]<=8'd{:d};\n".format(i*150+x,gray[i][x]))

#apply threshold to be exactly like the code on FPGA

if(grad[i][x] < 100):

grad[i][x] = 255

else:

grad[i][x] = 0

cv2.imshow("rgb", img) #Show the real img

cv2.imshow("gray",gray) #Show the grayscale img

cv2.imshow("sobel",grad)#Show the result img

cv2.waitKey(0) #Stop the img to see itResults

As a result of our implementation, we got a real-time edge detection system that produces a 150x150 image after applying the grayscale filter and Sobel operator. The implemented system provides 30 fps. The camera runs on a 25MHz clock and the system, in general, meets real-time deadlines without noticeable lag. Moreover, the threshold value can affect the amount of details and the noise in the final image.

Here is a comparison between Sobel operator on FPGA and OpenCV sobel operator:

Below is an illustrative video of the results:

Here is the link of the repository on Github which has all the source codes.

Future improvements

As we are using FPGA Cyclone IV, we are limited to its memory capacity and the number of logic gates. Hence, as a future improvement, we can use an external memory source or we can implement our work on another board so we can display all the pixels from the image received from the camera.

Furthermore, although Sobel operator is fast and simple to implement, it is noticeably sensitive to noise. To eliminate the produced noise, we can use a noise filter like the non-linear median filter which works perfectly fine with our system if we had enough memory to implement a third buffer. This will produce a smoother image with sharp features removed.

Accordingly, we used the built-in VGA interface of the FPGA that can only produce a 3-bits image. Thus, we couldn’t display the grayscaled image as it needs 8 bits to be displayed. As a result, implementing another interface or using more powerful board will enhance the flexibility of displaying the image.

Conclusion

We were able to use our knowledge and understanding of crucial concepts in embedded systems as state-machines, computations parallelism, and hardware-software interfacing to create an efficient edge detection application that meets our objectives.

Acknowledgment

This project is built by a team consisting of two students: Hussein Youness and Hany Hamed in first year bachelor of Computer Science in Innopolis University in Russia.

This project is part of Computer Architecture course Fall 2018 in Innopolis University.

https://eu.mouser.com/ProductDetail/Intel-Altera/EP4CE6E22C8N?qs=jblrfmjbeiF2FLmcokX%252bDw%3D%3D

https://www.voti.nl/docs/OV7670.pdf

http://embeddedprogrammer.blogspot.com/2012/07/hacking-ov7670-camera-module-sccb-cheat.html

https://www.intel.com/content/www/us/en/programmable/support/support-resources/operation-and-testing/pll-and-clock-management/pll-basics.html

https://knowledge.ni.com/KnowledgeArticleDetails?id=kA00Z000000P9T3SAK

https://www.intel.com/content/dam/www/programmable/us/en/pdfs/literature/ug/ug_megafunction_overview.pdf

https://www.intel.com/content/dam/www/programmable/us/en/pdfs/literature/ug/ug_ram_rom.pdf

http://www.tannerhelland.com/3643/grayscale-image-algorithm-vb6/

http://www.cse.usf.edu/~r1k/MachineVisionBook/MachineVision.files/MachineVision_Chapter5.pdf

https://www.digikey.com/eewiki/pages/viewpage.action?pageId=15925278

http://tinyvga.com/vga-timing/640x480@60Hz

Комментарии (45)

firedragon

28.11.2018 13:21-1Вы про копипасту без перевода? Я кстати то-же не понял зачем на русскоязычном ресурсе, англоязычная статья. Хотя прочитал с удовольствием.

Exosphere

28.11.2018 13:56+1Друзья, firedragon webzuweb. Немного об английском на Хабре и почему всё так, можно почитать здесь.

johnfound

28.11.2018 17:00Интересно понял что-то не так в середине статьи. Смотрю, а она на английском. :D

Но претензии все-таки есть:

To provide the 25MHz clock for the camera we used Phase-Locked Loop (PLL) which is a closed-loop frequency-control system to provide the needed clock from the 50MHz provided from the board.

Чтобы сделать из 50МГц, 25МГц, не нужен PLL. Нужен просто делитель на 2 — простой триггер. Или "Over-engineering is our lifestyle"?

punzik

28.11.2018 17:50Делить клок — это плохая практика для ПЛИС. Если не хочется PLL, то можно строб каждый второй клок.

johnfound

28.11.2018 18:33Это сигнал, которой подается на внешную камеру. И конечно, решения можно всякие, но PLL всетаки перебор. ИМХО.

ilynxy

28.11.2018 19:48Всегда было интересно почему это плохая практика.

punzik

28.11.2018 20:11В основном потому, что такой клок страссируется не через специальную распределительную сеть, а через общую, как обычный сигнал (через множество коммутаторов и даже через ЛУТы). Это внесёт труднопрогнозируемую задержку и нарушения временных ограничений. Ну и трассировкой ресурс займёт. Поправьте меня, кто в теме.

eugenk

29.11.2018 10:19Я потому и говорю что глобальный буфер. А как он получен, PLL или простым делением, дело уже десятое.

hany606 Автор

28.11.2018 20:48+1Yes, there are a lot of simpler ways to do this as you said. But we were exploring the usage of PLL configuration tool that exists in IP catalog tool in Quartus Software. And I agree with you that this may seem to overkill the task and it is not the good practice to do it and in our future work, we will try to follow the good practices as we are still learning.

Thank you very much for this point.

I hope that I understand your comment correctly using Google translate.

xjesus666christx

28.11.2018 19:06За последний месяц это, наверное, третья статья на английском на Хабре. И все три про использование Cyclone IV. Как-то подозрительно

Exosphere

28.11.2018 19:34+2Если бы вы чуть внимательнее прочитали статьи, то заметили бы небольшую деталь — это статьи ребят из Иннополиса. Согласитесь, они пишут на хорошем английском на серьёзную тему — почему бы и нет? ;-)

geisha

29.11.2018 05:22Согласитесь, они пишут на хорошем английском на серьёзную тему — почему бы и нет? ;-)

Я не спец, но вместо скрипта на питоне в теме про FPGA, почему-то, ожидал, ну там, рулон кода на verilog или чем-то подобном. К английскому тоже есть вопросы, повторяться не буду. То, что молодцы — тоже спорить не буду: да, молодцы для первого курса.

Exosphere

29.11.2018 10:32

eugenk

29.11.2018 10:45Блин ну всё. Они меня уже достали. Нужно срочно разбавить русскоязычной статьёй! Вам интересен метод вычисления логарифма по основанию 2, не использующий CORDIC (а следовательно таблиц)? :))))

Exosphere

29.11.2018 10:48На самом деле лично мне интересны почти все статьи на Хабре. Могу с уверенностью сказать, что математические и околоматематические статьи интересны сообществу. Срочно пишите!

eugenk

29.11.2018 10:56+1Срочно не получится, через полчасика где-то отбываю на собеседование. Кстати интересный довольно проект плюс на удалёнке. Вернусь напишу. Мне в прошлом проекте это здорово помогло. Алгоритм я кстати сам придумал. Хотя скорее всего он уже известен, ибо слишком простой.

hany606 Автор

29.11.2018 17:05As I understand from the translated text. We wrote a python script to compare our results of work with OpenCV results to ensure the performance and the accuracy of our work. And all our codes of Verilog on Github and the link: https://github.com/hany606/Real-time-edge-detection-on-FPGA it is also included in the article.

Thank you very much.

eugenk

29.11.2018 10:37Если бы вы чуть внимательнее прочитали статьи, то заметили бы небольшую деталь — это статьи ребят из Иннополиса.

Простите, не понял. У них что, в план учебного процесса входит публикация на хабре на английском языке ??? Забавно кстати что и отвечает автор тоже по-английски. Нам в своё время лекции по химтермодинамике читали на английском и семинары по ней тоже вели на английском. Народ сначала возмущался, но потом привыкли, и даже нравилось. Правда было это почти 40 лет назад. Рад если сейчас где-то так же делают.

eugenk

29.11.2018 10:24Скорей забавно :))

Сам собирался в этом хабе кое что публикнуть, теперь вот думаю, на каком языке это делать. Тем более речь всё о том же 4-м циклоне :)

AngReload

29.11.2018 08:55

0serg

29.11.2018 10:41Правильные коэффициенты вообще говоря зависят от используемой камеры, т.к. не все камеры выдают результат в sRGB. Конкретно OV7670 не выдает, более того — там встроен DSP и для него вручную задается матрица преобразования цвета (регистры 4F-54) так что при желании можно выдавать результат как в NTSC RGB так и вообще сделать чтобы в одном из цветовых каналов сразу выдавалась grayscale яркость. Но это в любом случае требует калибровки и, имхо, для данной работы бессмысленно.

Кроме того у тех камер что все же выдают результат в sRGB по хорошему нужно бы перед этим компенсировать гамму, т.к. sRGB нелинейное пространство.

AngReload

29.11.2018 12:04+2Я понимаю что это ничего не поменяет, просто не нравится очередное упоминание устаревшей формулы. Там вообще используются сдвиги, так что реально коэффициенты совсем другие:

R>>2 + R>>5 // 0.28125 G>>1 + G>>4 // 0.56250 B>>4 + B>>5 // 0.09375

hany606 Автор

29.11.2018 17:09Thank you very much for these information. Our team will read this and try to find out the best values for the project.

pulsatrix

29.11.2018 13:16Привет пиплы, извиняюсь если не в тему вопрос. Можно ли в реальном времени оцифровывать комнату 3x3x3 метра во что-то наподобие минекрафта с разрешением 10х10х10? На каком железе реально fps ~15-25?

Hi people, I apologize if not the topic of the question. Is it possible in real time to digitize a room 3x3x3 meters into something like a minicraft with a resolution of 10x10x10? What hardware really fps ~ 15-25?

ajratr

Подскажите пожалуйста кто нибудь, какая практическая польза? Для чего это вообще надо, где используется?

Exeland

Компьютерное зрение.

0serg

Столь простая задача в CV сама по себе бесполезна.

Ну и разрешение 150x150 @ 30fps мягко говоря не требует FPGA :)

Эта статья — обычная студенческая курсовая работа, по сути.

hany606 Автор

Yes, it is indeed a simple CV task and this is a just simple model for implementing the edge detection algorithm and in future, we hope to enhance in it using a different camera and different techniques. And yes, as you said it is just our course project as we mentioned in the article. But we choose this kind of projects as the first real practical project for us as a base to research and develop more in this area.

It is just the beginning of our work.

Thank you for your support and I hope that our future work will please you.

pulsatrix

????????

Mirn

??FPGA?????????????????

www.youtube.com/watch?v=d4Rrn4SnTM0

????????????FullHD?60FPS??????????????????5??????????

150x150??Arduino???????????300uSec???????

www.youtube.com/watch?v=kkZLZnUYn9E

www.youtube.com/watch?v=210UZUjZwBs

pulsatrix

I understand, that we, Asian, all in one face for you, but Chinese language really different from Japanese. Google saw that ????????

— I do not know anything. on Chinese and My prefecture Shikoku way on Japanese language.

Why did you decide that it is Japanese?

eugenk

Sorry, but want to ask. Is some English publication on habr included in your teaching plan?

hany606 Автор

Yes, our course project is to create the project then write an article on habr but our team is only two people and both of us don't know Russian. So, we wrote it in English. Thank you.

eugenk

«both of us don't know Russian» it is excellent, especially сonsidering that you answer Russian comments :)))))

40 years ago we have lectures and seminars about chemical terodymamics in English only. Рeople was angry. But now I understatd that it was very usefull. Great practice in English! I'm glad to see, that the similar learning practice is used in modern Russian high school.

hany606 Автор

I am using Google Translate to translate the comments. Innopolis University (our university) is an international university and all the courses, curriculums, lectures and everything are only in English.

eugenk

Really ??? You don't speak Russian ??? I'm shocked!!! From where are you? But maybe, maybe… Remember one funny case. I'm a motobiker. And one day I was on our motobike place. I was going to leave, but speak with mans. Suddenly appeared boy and girl. Both don't speak Russian absolutely! They are got lost. And cannot find metropoliten station. I was the only from mans, who can a little speak English. And I spent them to metro. When walk, talk a little. They are from Iceland. Boy works remote with Nokia. He was verilog developer. But I also verilog developer! It is not very often proffesion! When we find out this, we laugh long :))) Really, travel from Reykjavik to Moscow. Got lost in Moscow. And find a colleague onto far far motobike place! :)))

Exeland

Ну надо с чего-то начинать. На мой взгляд неплохая статья и отличная курсовая с практической частью, что в моём вузе не хватало.

hany606 Автор

Thank you very much.

eugenk

Ну если без FPGA, чисто на софте, то капчу какую-нибудь раздраконить :)