?At the moment, cloud CI systems are a highly-demanded service. In this article, we'll tell you how to integrate analysis of source code into a CI cloud platform with the tools that are already available in PVS-Studio. As an example we'll use the Travis CI service.

Why do we consider third-party clouds and don't make our own? There is a number of reasons, the main one is that the SaaS implementation is quite an expensive and difficult procedure. In fact, it is a simple and trivial task to directly integrate PVS-Studio analysis into a third-party cloud platform — whether it's open platforms like CircleCI, Travis CI, GitLab, or a specific enterprise solution used only in a certain company. Therefore we can say that PVS-Studio is already available «in the clouds». Another issue is implementing and ensuring access to the infrastructure 24/7. This is a more complicated task. PVS-Studio is not going to provide its own cloud platform directly for running analysis on it.

Travis CI is a service for building and testing software that uses GitHub as a storage. Travis CI doesn't require changing of programming code for using the service. All settings are made in the file .travis.yml located in the root of the repository.

We'll take LXC (Linux Containers) as a test project for PVS-Studio. It is a virtualization system at the operation system level for launching several instances of the Linux OS at one node.

The project is small, but more than enough for demonstration. Output of the cloc command:

Note: LXC developers already use Travis CI, so we'll take their configuration file as the basis and edit it for our purposes.

To start working with Travis CI we follow the link and login using a GitHub-account.

In the open window we need to login Travis CI.

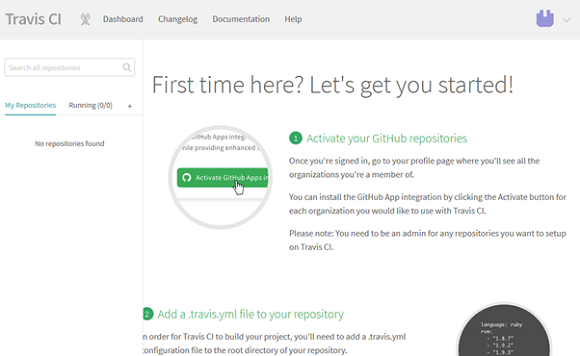

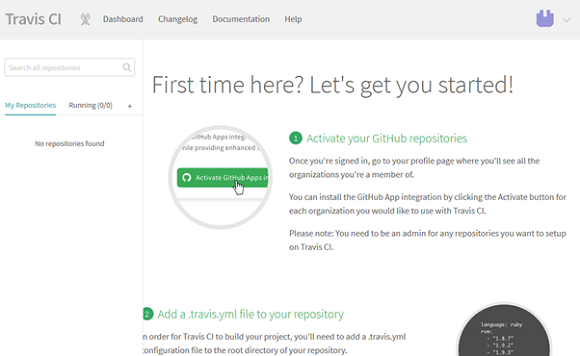

After authorization, it redirects to the welcome page «First time here? Lets get you started!», where we find a brief description what has to be done afterwards to get started:

Let's start doing these actions.

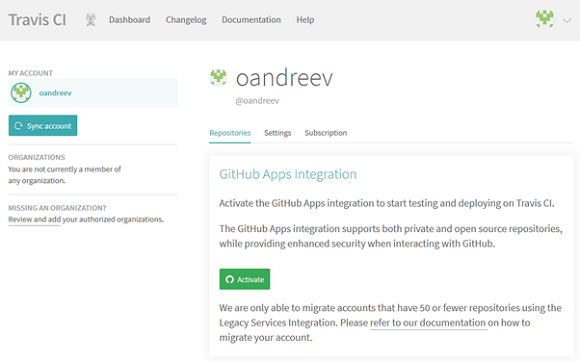

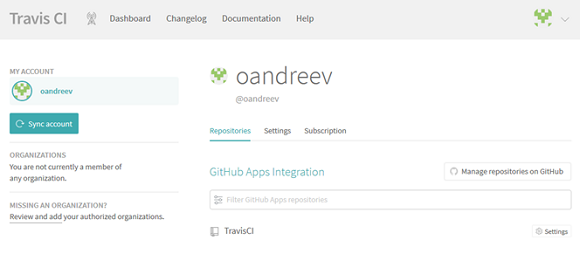

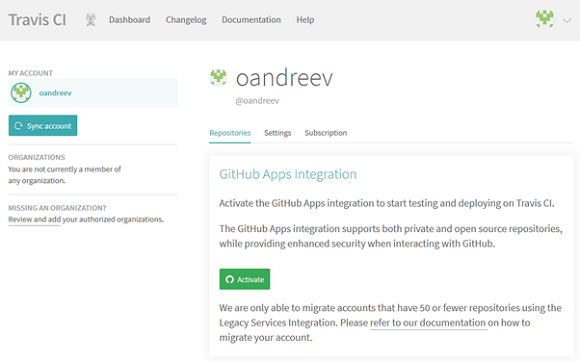

To add our repository in Travis CI we go to the profile settings by the link and press «Activate».

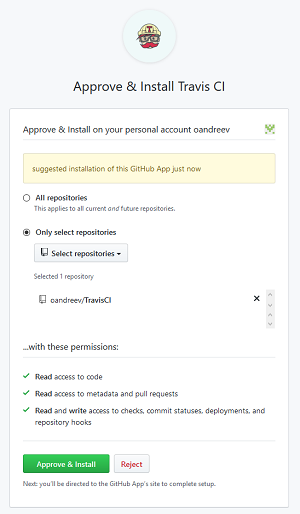

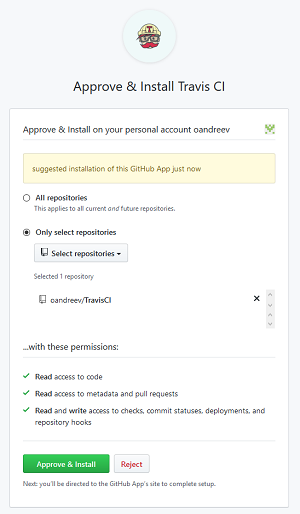

Once clicked, a window will open to select repositories that the Travis CI app will be given access to.

Note: to provide access to the repository, your account must have administrator's rights to do so.

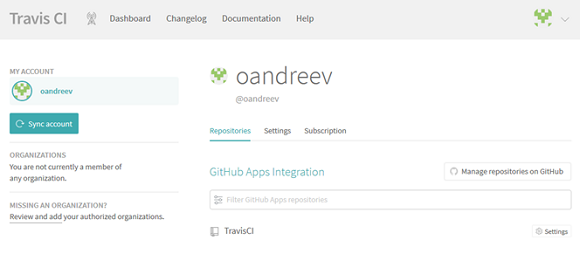

After that we choose the right repository, confirm the choice with the «Approve & Install» button, and we'll be redirected back to the profile settings page.

Let's add some variables that we'll use to create the analyzer's license file and send its reports. To do this, we'll go to the settings page — the «Settings» button to the right of the needed repository.

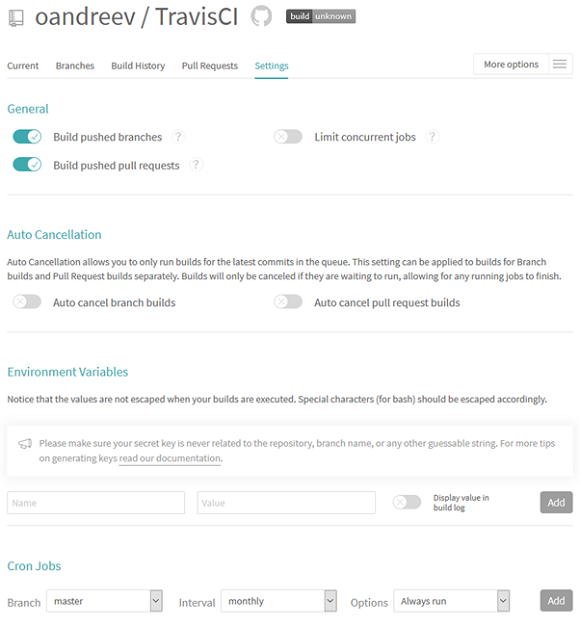

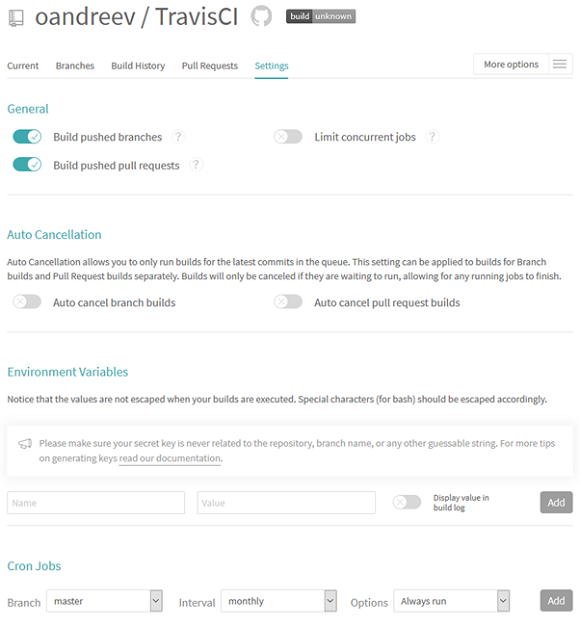

The settings window will open.

Brief description of settings;

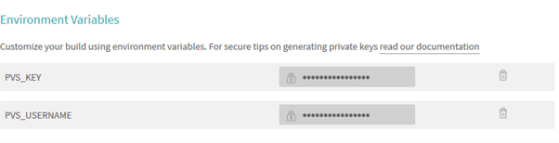

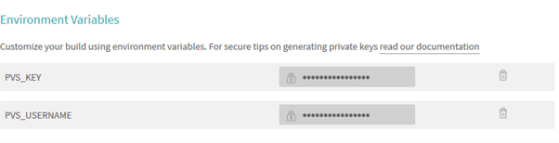

In the section «Environment Variables» we'll create variables PVS_USERNAME and PVS_KEY containing a username and a license key for the static analyzer respectively. If you don't have a permanent PVS-Studio license, you can request a trial license.

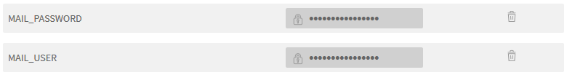

Right here we'll create variables MAIL_USER and MAIL_PASSWORD, containing a username and an email password, which we'll use for sending reports.

When running tasks, Travis CI takes instructions from the .travis.yml file, located in the root of the repository.

By using Travis CI, we can run static analysis both directly on the virtual machine and use a preconfigured container to do so. The results of these approaches are no different from each other. However, usage of a pre-configured container can be useful. For example, if we already have a container with some specific environment, inside of which a software product is built and tested and we don't want to restore this environment in Travis CI.

Let's create a configuration to run the analyzer on a virtual machine.

For building and testing we'll use a virtual machine on Ubuntu Trusty, its description is available by the link.

First of all, we specify that the project is written in C and list compilers that we will use for the build:

Note: if you specify more than one compiler, the tasks will run simultaneously for each of them. Read more here.

Before the build we need to add the analyzer repository, set dependencies and additional packages:

Before we build a project, we need to prepare your environment:

Next, we need to create a license file and start analyzing the project.

Then we create a license file for the analyzer by the first command. Data for the $PVS_USERNAME and $PVS_KEY variables is taken from the project settings.

By the next command, we start tracing the project build.

After that we run static analysis.

Note: when using a trial license, you need to specify the parameter --disableLicenseExpirationCheck.

The file with the analysis results is converted into the html-report by the last command.

Since TravisCI doesn't let you change the format of email notifications, in the last step we'll use the sendemail package for sending reports:

Here is the full text of the configuration file for running the analyzer on the virtual machine:

To run PVS-Studio in a container, let's pre-create it using the following Dockerfile:

In this case, the configuration file may look like this:

As you can see, in this case we do nothing inside the virtual machine, and all the actions on building and testing the project take place inside the container.

Note: when you start the container, you need to specify the parameter --cap-add SYS_PTRACE or --security-opt seccomp:unconfined, as a ptrace system call is used for compiler tracing.

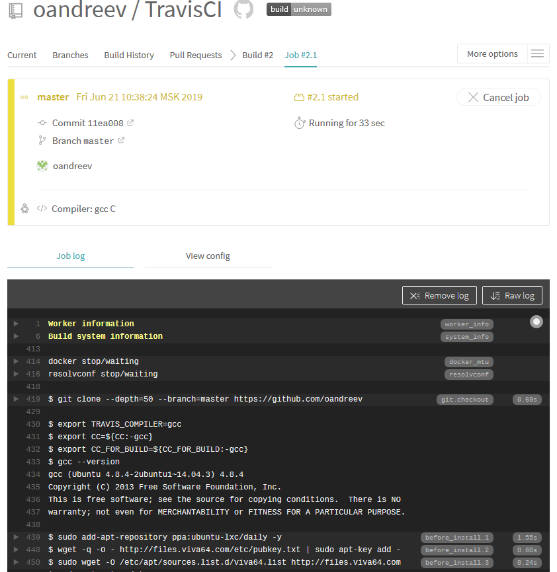

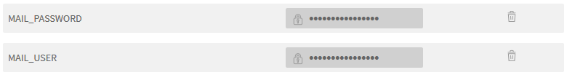

Next, we load the configuration file in the root of the repository and see that Travis CI has been notified of changes in the project and has automatically started the build.

Details of the build progress and analyzer check can be seen in the console.

After the tests are over, we will receive two emails: the first — with static analysis results for building a project using gcc, and the second — for clang, respectively.

In general, the project is quite clean, the analyzer issued only 24 high-certainty and 46 medium-certainty warnings. Let's look at a couple of interesting notifications:

V590 Consider inspecting the 'ret != (- 1) && ret == 1' expression. The expression is excessive or contains a misprint. attach.c 107

If ret == 1, it is definitely not equal to -1 (EOF). Redundant check, ret != EOF can be removed.

Two similar warnings have been issued:

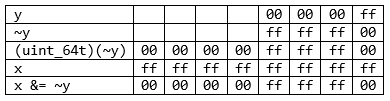

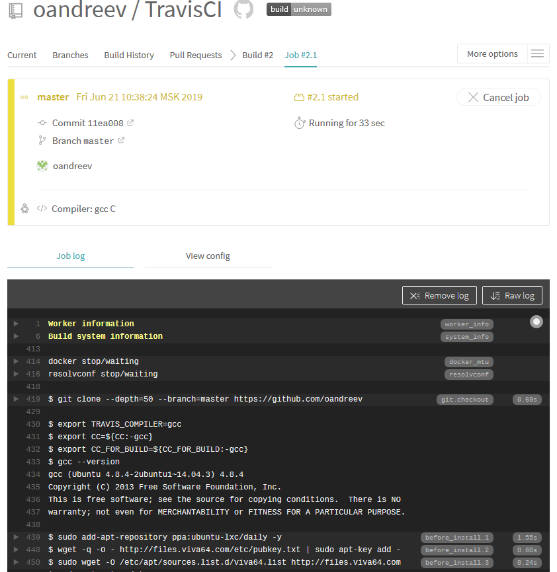

V784 The size of the bit mask is less than the size of the first operand. This will cause the loss of higher bits. conf.c 1879

Under Linux, long is a 64-bit integer variable, mo->flag is a 32-bit integer variable. Usage of mo->flag as a bit mask will lead to the loss of 32 high bits. A bit mask is implicitly cast to a 64-bit integer variable after bitwise inversion. High bits of this mask may be lost.

I'll show it using an example:

Here is the correct version of code:

The analyzer issued another similar warning:

V612 An unconditional 'return' within a loop. conf.c 3477

The loop is started and interrupted on the first iteration. This might have been made intentionally, but in this case the loop could have been omitted.

V557 Array underrun is possible. The value of 'bytes — 1' index could reach -1. network.c 2570

Bytes are read in the buffer from the pipe. In case of an error, the lxc_read_nointr function will return a negative value. If all goes successfully, a terminal null is written by the last element. However, if 0 bytes are read, the index will be out of buffer bounds, leading to undefined behavior.

The analyzer issued another similar warning:

V576 Incorrect format. Consider checking the third actual argument of the 'sscanf' function. It's dangerous to use string specifier without width specification. Buffer overflow is possible. lxc_unshare.c 205

In this case, usage of sscanf can be dangerous, because if the oparq buffer is larger than the name buffer, the index will be out of bounds when forming the name buffer.

As we see, it's a quite simple task to configure a static code analyzer check in a cloud. For this, we just need to add one file in a repository and spend little time setting up the CI system. As a result, we'll get a tool to detect problem at the stage of writing code. The tool lets us prevent bugs from getting to the next stages of testing, where their fixing will require much time and efforts.

Of course, PVS-Studio usage with cloud platforms is not only limited to Travis CI. Similar to the method described in the article, with small differences, PVS-Studio analysis can be integrated into other popular cloud CI solutions, such as CircleCI, GitLab, etc.

Why do we consider third-party clouds and don't make our own? There is a number of reasons, the main one is that the SaaS implementation is quite an expensive and difficult procedure. In fact, it is a simple and trivial task to directly integrate PVS-Studio analysis into a third-party cloud platform — whether it's open platforms like CircleCI, Travis CI, GitLab, or a specific enterprise solution used only in a certain company. Therefore we can say that PVS-Studio is already available «in the clouds». Another issue is implementing and ensuring access to the infrastructure 24/7. This is a more complicated task. PVS-Studio is not going to provide its own cloud platform directly for running analysis on it.

Some Information about the Used Software

Travis CI is a service for building and testing software that uses GitHub as a storage. Travis CI doesn't require changing of programming code for using the service. All settings are made in the file .travis.yml located in the root of the repository.

We'll take LXC (Linux Containers) as a test project for PVS-Studio. It is a virtualization system at the operation system level for launching several instances of the Linux OS at one node.

The project is small, but more than enough for demonstration. Output of the cloc command:

| Language |

files |

blank |

comment |

code |

| C |

124 |

11937 |

6758 |

50836 |

| C/C++ Header |

65 |

1117 |

3676 |

3774 |

Configuration

To start working with Travis CI we follow the link and login using a GitHub-account.

In the open window we need to login Travis CI.

After authorization, it redirects to the welcome page «First time here? Lets get you started!», where we find a brief description what has to be done afterwards to get started:

- enable the repositories;

- add the .travis.yml file in the repository;

- start the first build.

Let's start doing these actions.

To add our repository in Travis CI we go to the profile settings by the link and press «Activate».

Once clicked, a window will open to select repositories that the Travis CI app will be given access to.

Note: to provide access to the repository, your account must have administrator's rights to do so.

After that we choose the right repository, confirm the choice with the «Approve & Install» button, and we'll be redirected back to the profile settings page.

Let's add some variables that we'll use to create the analyzer's license file and send its reports. To do this, we'll go to the settings page — the «Settings» button to the right of the needed repository.

The settings window will open.

Brief description of settings;

- «General» section — configuring auto-start task triggers;

- «Auto Cancelation» section allows to configure build auto-cancellation;

- «Environment Variables» section allows to define environment variables that contain both open and confidential information, such as login information, ssh-keys;

- «Cron Jobs» section is a configuration of task running schedule.

In the section «Environment Variables» we'll create variables PVS_USERNAME and PVS_KEY containing a username and a license key for the static analyzer respectively. If you don't have a permanent PVS-Studio license, you can request a trial license.

Right here we'll create variables MAIL_USER and MAIL_PASSWORD, containing a username and an email password, which we'll use for sending reports.

When running tasks, Travis CI takes instructions from the .travis.yml file, located in the root of the repository.

By using Travis CI, we can run static analysis both directly on the virtual machine and use a preconfigured container to do so. The results of these approaches are no different from each other. However, usage of a pre-configured container can be useful. For example, if we already have a container with some specific environment, inside of which a software product is built and tested and we don't want to restore this environment in Travis CI.

Let's create a configuration to run the analyzer on a virtual machine.

For building and testing we'll use a virtual machine on Ubuntu Trusty, its description is available by the link.

First of all, we specify that the project is written in C and list compilers that we will use for the build:

language: c

compiler:

- gcc

- clangNote: if you specify more than one compiler, the tasks will run simultaneously for each of them. Read more here.

Before the build we need to add the analyzer repository, set dependencies and additional packages:

before_install:

- sudo add-apt-repository ppa:ubuntu-lxc/daily -y

- wget -q -O - https://files.viva64.com/etc/pubkey.txt | sudo apt-key add -

- sudo wget -O /etc/apt/sources.list.d/viva64.list

https://files.viva64.com/etc/viva64.list

- sudo apt-get update -qq

- sudo apt-get install -qq coccinelle parallel

libapparmor-dev libcap-dev libseccomp-dev

python3-dev python3-setuptools docbook2x

libgnutls-dev libselinux1-dev linux-libc-dev pvs-studio

libio-socket-ssl-perl libnet-ssleay-perl sendemail

ca-certificatesBefore we build a project, we need to prepare your environment:

script:

- ./coccinelle/run-coccinelle.sh -i

- git diff --exit-code

- export CFLAGS="-Wall -Werror"

- export LDFLAGS="-pthread -lpthread"

- ./autogen.sh

- rm -Rf build

- mkdir build

- cd build

- ../configure --enable-tests --with-distro=unknownNext, we need to create a license file and start analyzing the project.

Then we create a license file for the analyzer by the first command. Data for the $PVS_USERNAME and $PVS_KEY variables is taken from the project settings.

- pvs-studio-analyzer credentials $PVS_USERNAME $PVS_KEY -o PVS-Studio.licBy the next command, we start tracing the project build.

- pvs-studio-analyzer trace -- make -j4After that we run static analysis.

Note: when using a trial license, you need to specify the parameter --disableLicenseExpirationCheck.

- pvs-studio-analyzer analyze -j2 -l PVS-Studio.lic

-o PVS-Studio-${CC}.log

--disableLicenseExpirationCheckThe file with the analysis results is converted into the html-report by the last command.

- plog-converter -t html PVS-Studio-${CC}.log

-o PVS-Studio-${CC}.htmlSince TravisCI doesn't let you change the format of email notifications, in the last step we'll use the sendemail package for sending reports:

- sendemail -t mail@domain.com

-u "PVS-Studio $CC report, commit:$TRAVIS_COMMIT"

-m "PVS-Studio $CC report, commit:$TRAVIS_COMMIT"

-s smtp.gmail.com:587

-xu $MAIL_USER

-xp $MAIL_PASSWORD

-o tls=yes

-f $MAIL_USER

-a PVS-Studio-${CC}.log PVS-Studio-${CC}.htmlHere is the full text of the configuration file for running the analyzer on the virtual machine:

language: c

compiler:

- gcc

- clang

before_install:

- sudo add-apt-repository ppa:ubuntu-lxc/daily -y

- wget -q -O - https://files.viva64.com/etc/pubkey.txt | sudo apt-key add -

- sudo wget -O /etc/apt/sources.list.d/viva64.list

https://files.viva64.com/etc/viva64.list

- sudo apt-get update -qq

- sudo apt-get install -qq coccinelle parallel

libapparmor-dev libcap-dev libseccomp-dev

python3-dev python3-setuptools docbook2x

libgnutls-dev libselinux1-dev linux-libc-dev pvs-studio

libio-socket-ssl-perl libnet-ssleay-perl sendemail

ca-certificates

script:

- ./coccinelle/run-coccinelle.sh -i

- git diff --exit-code

- export CFLAGS="-Wall -Werror"

- export LDFLAGS="-pthread -lpthread"

- ./autogen.sh

- rm -Rf build

- mkdir build

- cd build

- ../configure --enable-tests --with-distro=unknown

- pvs-studio-analyzer credentials $PVS_USERNAME $PVS_KEY -o PVS-Studio.lic

- pvs-studio-analyzer trace -- make -j4

- pvs-studio-analyzer analyze -j2 -l PVS-Studio.lic

-o PVS-Studio-${CC}.log

--disableLicenseExpirationCheck

- plog-converter -t html PVS-Studio-${CC}.log -o PVS-Studio-${CC}.html

- sendemail -t mail@domain.com

-u "PVS-Studio $CC report, commit:$TRAVIS_COMMIT"

-m "PVS-Studio $CC report, commit:$TRAVIS_COMMIT"

-s smtp.gmail.com:587

-xu $MAIL_USER

-xp $MAIL_PASSWORD

-o tls=yes

-f $MAIL_USER

-a PVS-Studio-${CC}.log PVS-Studio-${CC}.html To run PVS-Studio in a container, let's pre-create it using the following Dockerfile:

FROM docker.io/ubuntu:trusty

ENV CFLAGS="-Wall -Werror"

ENV LDFLAGS="-pthread -lpthread"

RUN apt-get update && apt-get install -y software-properties-common wget && wget -q -O - https://files.viva64.com/etc/pubkey.txt |

sudo apt-key add - && wget -O /etc/apt/sources.list.d/viva64.list

https://files.viva64.com/etc/viva64.list && apt-get update && apt-get install -yqq coccinelle parallel

libapparmor-dev libcap-dev libseccomp-dev

python3-dev python3-setuptools docbook2x

libgnutls-dev libselinux1-dev linux-libc-dev

pvs-studio git libtool autotools-dev automake

pkg-config clang make libio-socket-ssl-perl

libnet-ssleay-perl sendemail ca-certificates && rm -rf /var/lib/apt/lists/*In this case, the configuration file may look like this:

before_install:

- docker pull docker.io/oandreev/lxc

env:

- CC=gcc

- CC=clang

script:

- docker run

--rm

--cap-add SYS_PTRACE

-v $(pwd):/pvs

-w /pvs

docker.io/oandreev/lxc

/bin/bash -c " ./coccinelle/run-coccinelle.sh -i

&& git diff --exit-code

&& ./autogen.sh

&& mkdir build && cd build

&& ../configure CC=$CC

&& pvs-studio-analyzer credentials

$PVS_USERNAME $PVS_KEY -o PVS-Studio.lic

&& pvs-studio-analyzer trace -- make -j4

&& pvs-studio-analyzer analyze -j2

-l PVS-Studio.lic

-o PVS-Studio-$CC.log

--disableLicenseExpirationCheck

&& plog-converter -t html

-o PVS-Studio-$CC.html

PVS-Studio-$CC.log

&& sendemail -t mail@domain.com

-u 'PVS-Studio $CC report, commit:$TRAVIS_COMMIT'

-m 'PVS-Studio $CC report, commit:$TRAVIS_COMMIT'

-s smtp.gmail.com:587

-xu $MAIL_USER -xp $MAIL_PASSWORD

-o tls=yes -f $MAIL_USER

-a PVS-Studio-${CC}.log PVS-Studio-${CC}.html"As you can see, in this case we do nothing inside the virtual machine, and all the actions on building and testing the project take place inside the container.

Note: when you start the container, you need to specify the parameter --cap-add SYS_PTRACE or --security-opt seccomp:unconfined, as a ptrace system call is used for compiler tracing.

Next, we load the configuration file in the root of the repository and see that Travis CI has been notified of changes in the project and has automatically started the build.

Details of the build progress and analyzer check can be seen in the console.

After the tests are over, we will receive two emails: the first — with static analysis results for building a project using gcc, and the second — for clang, respectively.

Briefly About the Check Results

In general, the project is quite clean, the analyzer issued only 24 high-certainty and 46 medium-certainty warnings. Let's look at a couple of interesting notifications:

Redundant conditions in if

V590 Consider inspecting the 'ret != (- 1) && ret == 1' expression. The expression is excessive or contains a misprint. attach.c 107

#define EOF -1

static struct lxc_proc_context_info *lxc_proc_get_context_info(pid_t pid)

{

....

while (getline(&line, &line_bufsz, proc_file) != -1)

{

ret = sscanf(line, "CapBnd: %llx", &info->capability_mask);

if (ret != EOF && ret == 1) // <=

{

found = true;

break;

}

}

....

}If ret == 1, it is definitely not equal to -1 (EOF). Redundant check, ret != EOF can be removed.

Two similar warnings have been issued:

- V590 Consider inspecting the 'ret != (- 1) && ret == 1' expression. The expression is excessive or contains a misprint. attach.c 579

- V590 Consider inspecting the 'ret != (- 1) && ret == 1' expression. The expression is excessive or contains a misprint. attach.c 583

Loss of High Bits

V784 The size of the bit mask is less than the size of the first operand. This will cause the loss of higher bits. conf.c 1879

struct mount_opt

{

char *name;

int clear;

int flag;

};

static void parse_mntopt(char *opt, unsigned long *flags,

char **data, size_t size)

{

struct mount_opt *mo;

/* If opt is found in mount_opt, set or clear flags.

* Otherwise append it to data. */

for (mo = &mount_opt[0]; mo->name != NULL; mo++)

{

if (strncmp(opt, mo->name, strlen(mo->name)) == 0)

{

if (mo->clear)

{

*flags &= ~mo->flag; // <=

}

else

{

*flags |= mo->flag;

}

return;

}

}

....

}Under Linux, long is a 64-bit integer variable, mo->flag is a 32-bit integer variable. Usage of mo->flag as a bit mask will lead to the loss of 32 high bits. A bit mask is implicitly cast to a 64-bit integer variable after bitwise inversion. High bits of this mask may be lost.

I'll show it using an example:

unsigned long long x;

unsigned y;

....

x &= ~y;

Here is the correct version of code:

*flags &= ~(unsigned long)(mo->flag);The analyzer issued another similar warning:

- V784 The size of the bit mask is less than the size of the first operand. This will cause the loss of higher bits. conf.c 1933

Suspicious Loop

V612 An unconditional 'return' within a loop. conf.c 3477

#define lxc_list_for_each(__iterator, __list) for (__iterator = (__list)->next; __iterator != __list; __iterator = __iterator->next)

static bool verify_start_hooks(struct lxc_conf *conf)

{

char path[PATH_MAX];

struct lxc_list *it;

lxc_list_for_each (it, &conf->hooks[LXCHOOK_START]) {

int ret;

char *hookname = it->elem;

ret = snprintf(path, PATH_MAX, "%s%s",

conf->rootfs.path ? conf->rootfs.mount : "",

hookname);

if (ret < 0 || ret >= PATH_MAX)

return false;

ret = access(path, X_OK);

if (ret < 0) {

SYSERROR("Start hook \"%s\" not found in container",

hookname);

return false;

}

return true; // <=

}

return true;

}The loop is started and interrupted on the first iteration. This might have been made intentionally, but in this case the loop could have been omitted.

Array Index out of Bounds

V557 Array underrun is possible. The value of 'bytes — 1' index could reach -1. network.c 2570

static int lxc_create_network_unpriv_exec(const char *lxcpath,

const char *lxcname,

struct lxc_netdev *netdev,

pid_t pid,

unsigned int hooks_version)

{

int bytes;

char buffer[PATH_MAX] = {0};

....

bytes = lxc_read_nointr(pipefd[0], &buffer, PATH_MAX);

if (bytes < 0)

{

SYSERROR("Failed to read from pipe file descriptor");

close(pipefd[0]);

}

else

{

buffer[bytes - 1] = '\0';

}

....

}Bytes are read in the buffer from the pipe. In case of an error, the lxc_read_nointr function will return a negative value. If all goes successfully, a terminal null is written by the last element. However, if 0 bytes are read, the index will be out of buffer bounds, leading to undefined behavior.

The analyzer issued another similar warning:

- V557 Array underrun is possible. The value of 'bytes — 1' index could reach -1. network.c 2725

Buffer Overflow

V576 Incorrect format. Consider checking the third actual argument of the 'sscanf' function. It's dangerous to use string specifier without width specification. Buffer overflow is possible. lxc_unshare.c 205

static bool lookup_user(const char *oparg, uid_t *uid)

{

char name[PATH_MAX];

....

if (sscanf(oparg, "%u", uid) < 1)

{

/* not a uid -- perhaps a username */

if (sscanf(oparg, "%s", name) < 1) // <=

{

free(buf);

return false;

}

....

}

....

}In this case, usage of sscanf can be dangerous, because if the oparq buffer is larger than the name buffer, the index will be out of bounds when forming the name buffer.

Conclusion

As we see, it's a quite simple task to configure a static code analyzer check in a cloud. For this, we just need to add one file in a repository and spend little time setting up the CI system. As a result, we'll get a tool to detect problem at the stage of writing code. The tool lets us prevent bugs from getting to the next stages of testing, where their fixing will require much time and efforts.

Of course, PVS-Studio usage with cloud platforms is not only limited to Travis CI. Similar to the method described in the article, with small differences, PVS-Studio analysis can be integrated into other popular cloud CI solutions, such as CircleCI, GitLab, etc.

Useful links

- For additional information on running PVS-Studio on Linux and MacOS, follow the link.

- You can also read about creating, setting and using containers with installed PVS-Studio static code analyzer by the link.

- TravisCI documentation.