Having analyzed earlier the capacity of standard server configurations in Digital Ocean in terms of WebRTC streaming, we have noticed that one server can cover up to 2000 viewers. In real life, cases when one server is insufficient are not uncommon.

Assume gambling amateurs in Germany are watching real-time horse races in Australia. Given that horse races are not only a sports game but also imply big gains on condition that field bets are made at the right time, the video has to be delivered with lowest possible latency.

Another example: A global corporation, one of FCMG market leaders with subsidiaries in Europe, Russia and Southeast Asia, is organizing sales manager training webinars with live streaming from the headquarters in the Mediterranean. The viewers must be able to see and hear the presenter in real time.

These examples put forward the same requirement: deliver low latency media streams to a great number of viewers. This will require deploying a content delivery network — CDN.

It should be noted that the conventional stream delivery technology using HLS is not well-suited as it can add up to 30 second delays, which are critical for a real-time show. Imagine that the horses have already finished, the results have been published on the website, while the fans are still watching the end of the race. WebRTC technology is free from this disadvantage and can guarantee less than 1 second delays, which is possible even across continents thanks to modern communication channels.

To begin with, we will see how to deploy the simplest CDN for delivering WebRTC streams and how to scale it afterwards.

CDN principles

A server in CDN can play one of the following roles:

- Origin is the server built for publishing media streams. It shares streams to other servers as well as users.

- Transcoder is the server dedicated for transcoding streams. It pulls streams from the Origin server and passes transcoded streams to Edge. We will look at this role in the following part.

- Edge is the server designed for sharing streams to users. It pulls streams from Origin or Transcoder servers and does not pass them to other CDN servers.

Origin server allows publishing WebRTC and RTMP streams or capturing streams from other sources via RTMP, RTSP or other available methods.

Users can play streams from Edge servers via WebRTC, RTMP, RTSP, HLS.

In order to minimize latency, it is desirable to transmit streams among CDN servers using WebRTC.

Static CDN is entirely described at the configuration stage. In fact, the setup of a static CDN is similar to the setup of a load balancer: all the receivers are listed in the settings of the stream source server.

For example, we have one Origin server in Frankfurt, one Edge in New York and one in Singapore

In this case Origin will be set up more or less like this:

<loadbalancer mode="roundrobin" stream_distribution="webrtc">

<node id="1">

<ip>edge1.thestaticcdn.com</ip>

<wss>443</wss>

</node>

<node id="2">

<ip>edge2.thestaticcdn.com</ip>

<wss>443</wss>

</node>

</loadbalancer>Here comes the first issue with the static CDN: in order to include into this kind of CDN a new Edge server or exclude a server out of a CDN, it is required to modify the settings and restart all Origin servers.

The streams published on Origin are broadcast to all Edge servers listed in the settings. The decision on which Edge server the user will connect to is also taken on Origin server. Here comes the second issue: if there are few or no viewers — for example, in Singapore it’s early evening while in New York it’s the middle of the night — the streams will be broadcast to Edge 1 anyway. The traffic is being wasted to no purpose, and it is not free of charge.

These two issues can be solved using Dynamic CDN.

Now, we want to set up the CDN without restarting all Origin servers and do not want to transmit streams to Edge servers with no users connected. In this case, there is no need to keep the whole list of CDN servers somewhere in the settings. Each server must create such a list independently. In order to do this, it must know the current status of the other servers at any specific time.

Ideally, specifying the entry point — the server which begins the CDN — in the settings should be enough. During startup each server must send a request to this entry point and get in response the list of CDN nodes and published streams. If the entry point cannot be accessed, the server must wait for messages from other servers.

The server must communicate any changes in its status to other servers in the CDN.

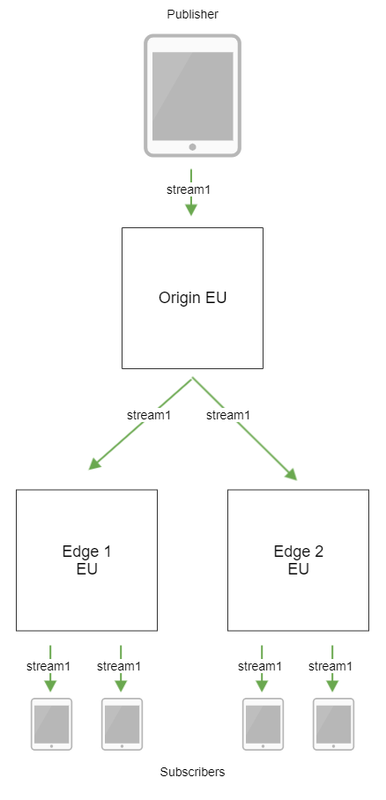

The simplest CDN: in the centre of Europe

Now we are going to try setting up and starting a dynamic CDN. Assume first we need to deliver media streams to viewers in Europe covering up to 5000 users. Suppose the stream source is also located in Europe

We are going to deploy three servers in a data center based in Europe. We will use Flashphoner WebCallServer (WebRTC stream video server) instances as CDN assembly components

Setup:

- Origin EU

cdn_enabled=true

cdn_ip=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=origin- Edge 1 EU

cdn_enabled=true

cdn_ip=e-eu1.flashphoner.com

cdn_point_of_entry=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=edge- Edge 2 EU

cdn_enabled=true

cdn_ip=e-eu2.flashphoner.com

cdn_point_of_entry=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=edgeMessage exchange between dynamic CDN nodes is implemented via Websocket, and Secure Websocket is certainly also supported.

The streams inside CDN are broadcast via WebRTC. Usually UDP is used as transport, but if it is necessary to ensure good streaming quality with the channel between the servers being not so good, it is possible to switch to TCP. Unfortunately, in this case, the latency increases.

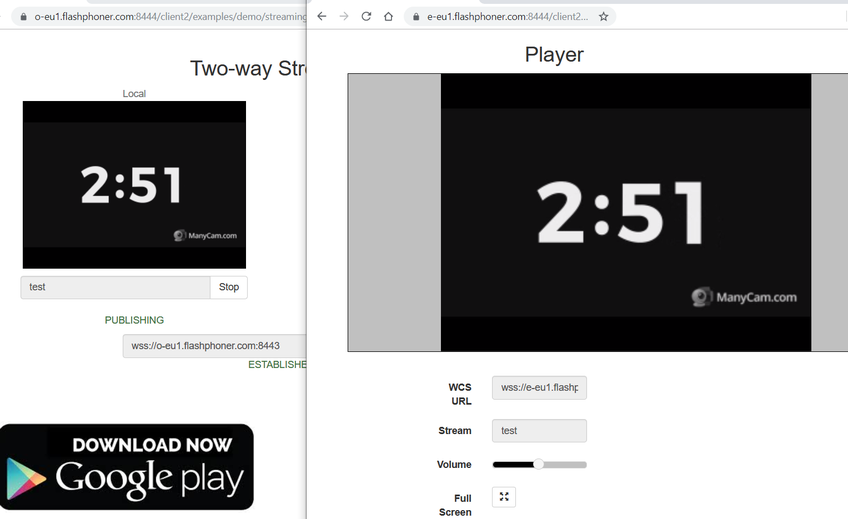

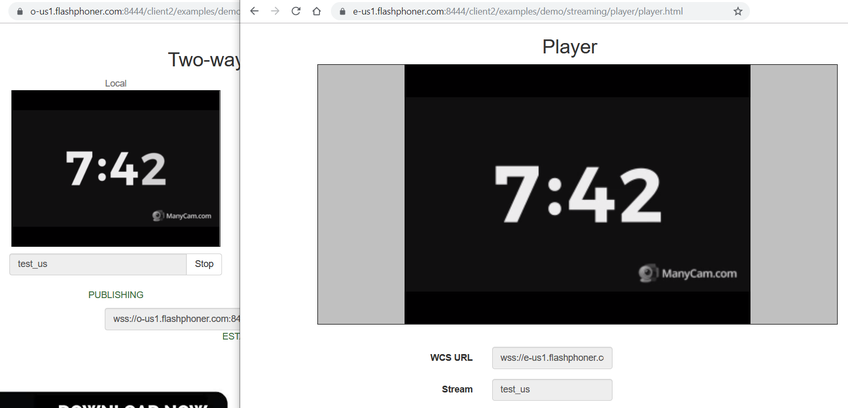

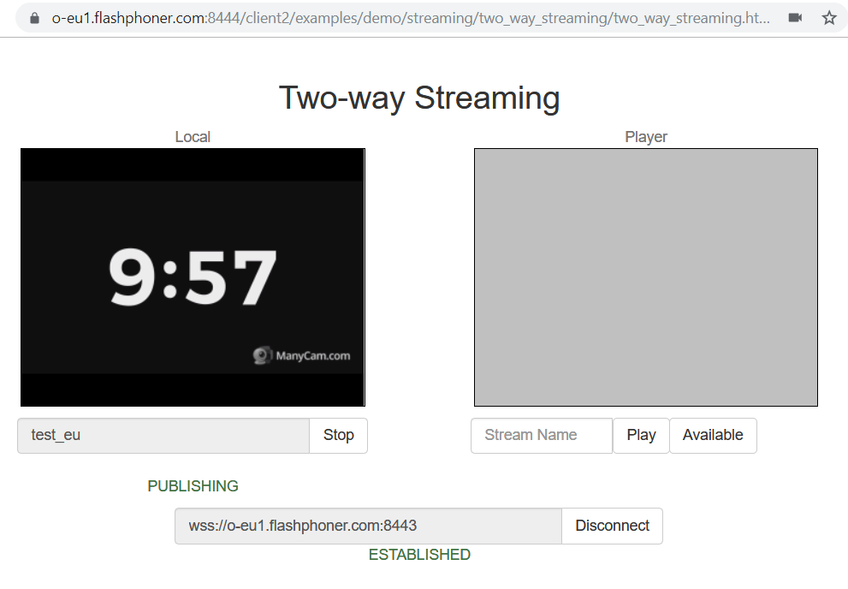

Restart the servers, open the Two Way Streaming example on the o-eu1 server, publish the cyclical 10 minutes to 0 countdown timer video

Open the Player example on the e-eu1 server, play the stream

and do the same thing on e-eu2

The CDN is working! As you can see on the screenshots, the timing in the video coincides to the second both on the publishing part and on the viewing part thanks to WebRTC and good channels.

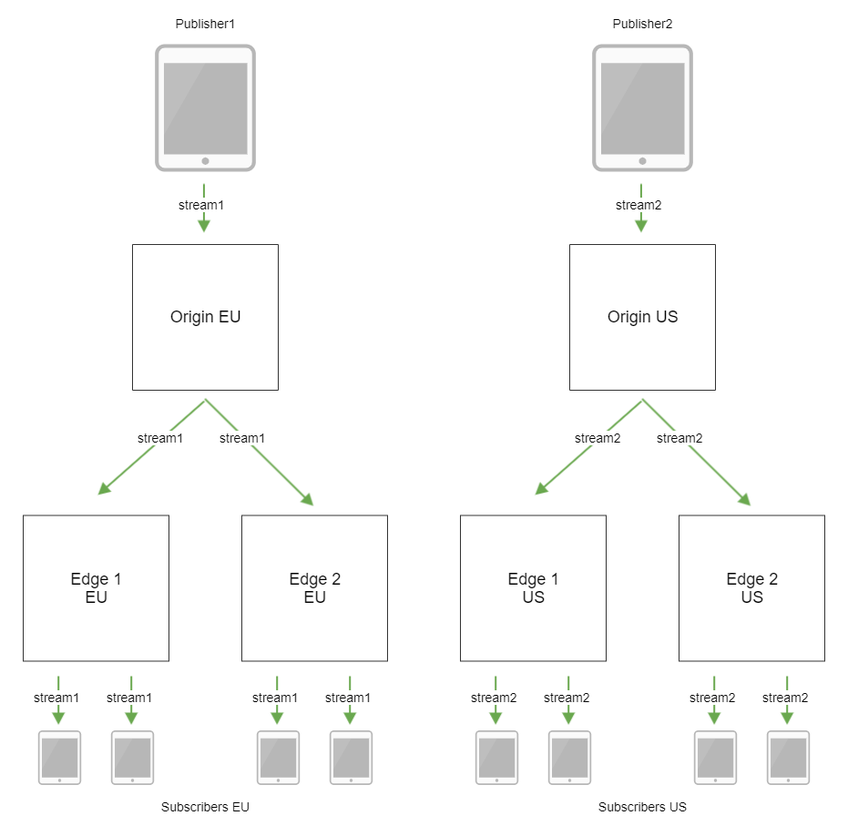

And beyond we go: connecting America

Now we are going to deliver streams to viewers on the American continent keeping in mind as well the publishing

We will deploy three servers in an American data center without shutting the European part of the CDN down

Setup:

- Origin US

cdn_enabled=true

cdn_ip=o-us1.flashponer.com

cdn_point_of_entry=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=origin- Edge 1 US

cdn_enabled=true

cdn_ip=e-us1.flashphoner.com

cdn_point_of_entry=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=edge- Edge 2 US

cdn_enabled=true

cdn_ip=e-us2.flashphoner.com

cdn_point_of_entry=o-eu1.flashponer.com

cdn_nodes_resolve_ip=false

cdn_role=edgeRestart the American servers, check the publishing

and the playback

Note that the European segment keeps working. We are going to check if the American users will be able to see the stream published from Europe. Publishing the test_eu stream on the o-eu1 server

and playing it on e-us1

And this is also working! As for the latency, the screenshots demonstrate again the synchrony of the timer in the video on the publishing part and on the viewing part to the second.

Note that playing on an Origin server the streams published on another Origin server is not possible by default, but if necessarily needed, this can be set up accordingly.

cdn_origin_to_origin_route_propagation=trueTo be continued

In summary, we have deployed a simple CDN and then have successfully scaled it to two continents publishing and playing low latency WebRTC streams. For this purpose, we did not modify the stream parameters at playback, which is quite often needed in real life: all the viewers have different channels, and in order to maintain the streaming quality it is needed, for example, to reduce the resolution or the bitrate. We will deal with this in the following part...

Related links

Flashphoner WebCallServer image in DigitalOcean Marketplace — image of Web Call Server in DigitalOcean.

CDN for low latency WebRTC streaming — Web Call Server-based content delivery network.