There was a custom configuration management solution.

I would like to share the story about a project. The project used to use a custom configuration management solution. Migration lasted 18 months. You can ask me 'Why?'. There are some answers below about changing processes, agreements and workflows.

Day № -ХХХ: Before the beginning

The infrastructure looked like a bunch of standalone Hyper-V servers. In case of creating a VM we had to perform some actions:

- Put the VM hard drives to a special place.

- Create a DNS record.

- Create a DHCP reservation.

- Save the VM configuration to a git repo.

It was a partially automated process. Unfortunately, we had to manage used resources & VMs locations manually. Hopefully, developers were able to change VMs configuration in the git repo, reboot VM and, as a result, get VM with the desired configuration.

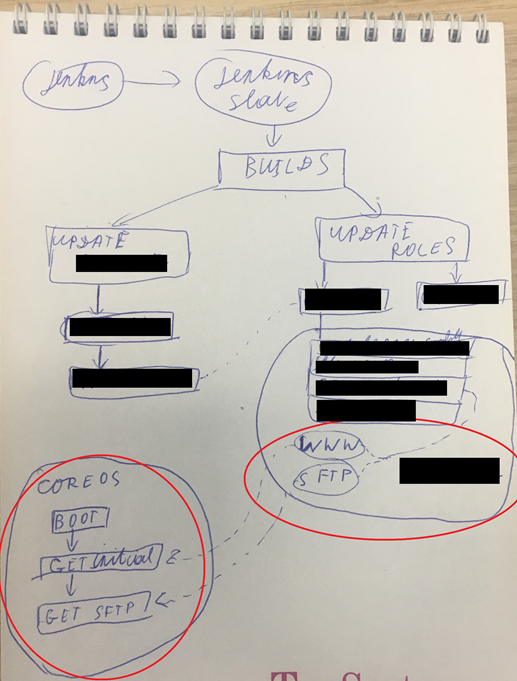

Custom Configuration Management Solution

I guess the original approach was to have IaC. It had to be a bunch of stateless VMs. Those VMs had reset they state after reboot. How did it look like?

- We create MAC address reservation.

- We mount ISO & special bootable hard drive to a VM.

- CoreOS starts OS customization: download the appropriate script(based on the IP) from a web server.

- The script downloads the rest of configuration via SCP.

- The rest of the configuration is a bunch of systemd units, compose files & bash scripts.

There were some flaws:

- ISO was CoreOS deprecated way of booting for CoreOS.

- Too many manual actions.

- Hard to update, tricky to maintain.

- Nightmare in case of installing specific kernel modules.

- State full VMs instead of original approach with stateless VMs.

- From time to time people created broken dependencies across systemd unit files, as a result, CoreOS was not able to reboot without magic sys rq.

- secret management.

It was possible to say that there was no CM. There was a pile of organized bash scripts & systemd unit files.

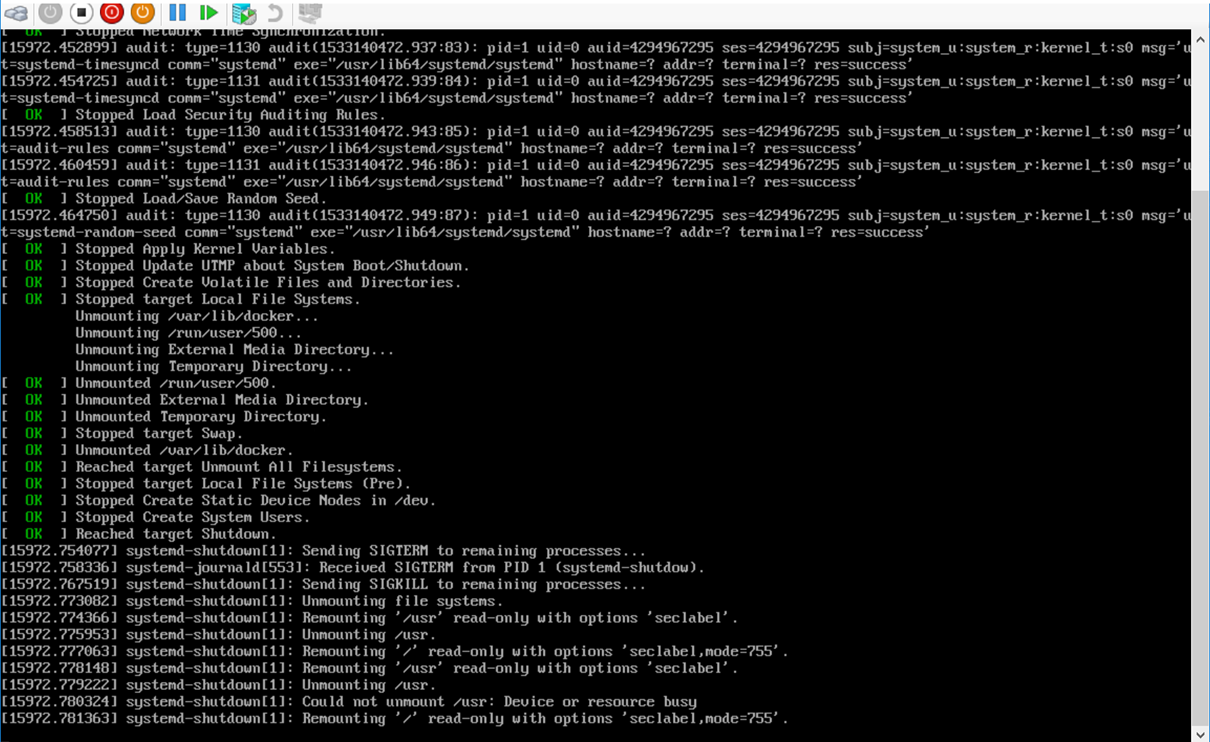

Day №0: Ok. We have a problem

It was a standard environment for developing and testing: Jenkins, test environments, monitoring, registry, etc. CoreOS developes created it as a underlying OS for k8s or rancher. So, we had a problem what we used a good tool, in the wrong way. The first step was to determine the desired technologies stack. Our idea was:

- CentOS as base OS, because it was close enough to a productions environments.

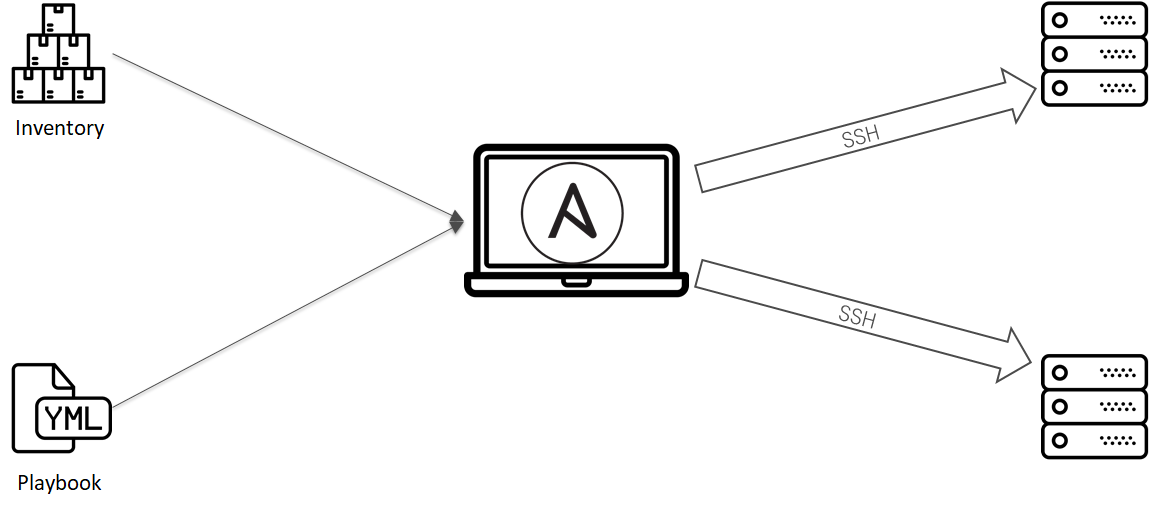

- Ansible for configuration management, because we had enough expertise.

- Jenkins as a framework for automating our workflow, agreements and process. We used it because we had it before as part release workflow.

- Hyper-V virtualization platform. There were some reasons out of scope. To make a short story long: we were not allowed to use public clouds & we had to use MS in our infrastructure.

Day №30: Agreements as Code

The next step was to put met requirements, to establish contracts or in other words to have Agreements as Code. It had to be manual actions > mechanization > automatization.

There were some processes. Let us chat about them separately

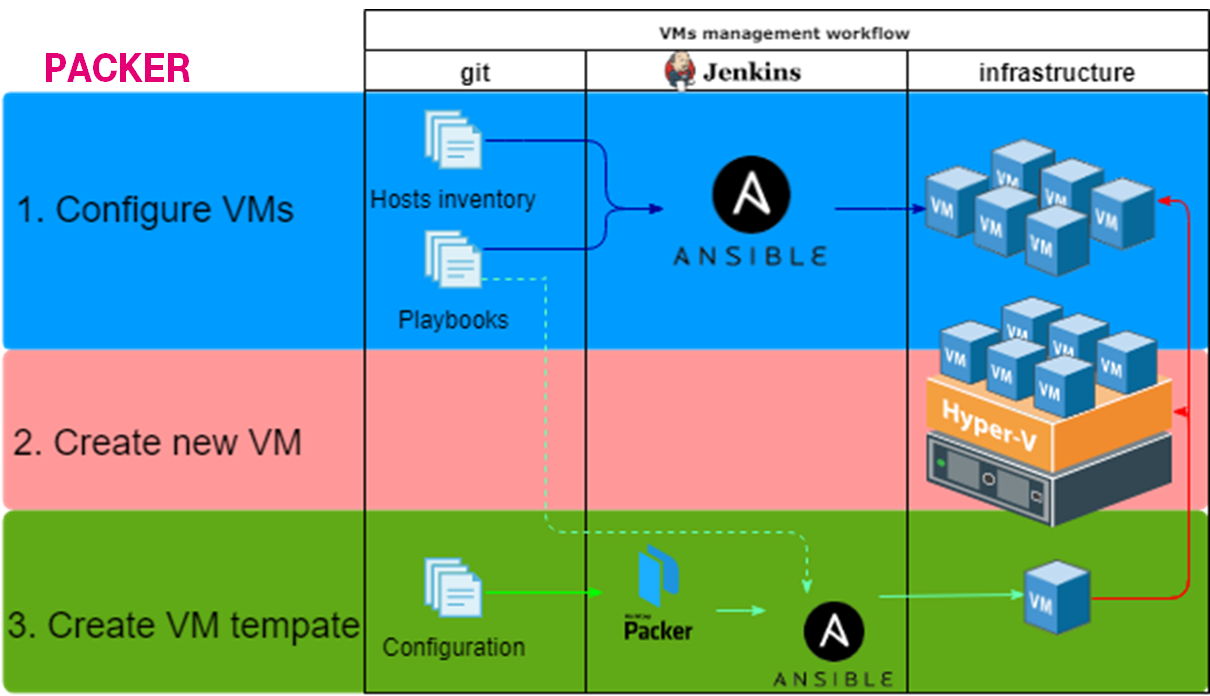

1. Configure VMs

- Created a git repository.

- Put VMs list into inventory; Configuration into playbooks & roles.

- Configured a dedicated Jenkins slave for running Ansible playbooks.

- Created & configured Jenkins pipeline.

2. Create new VM

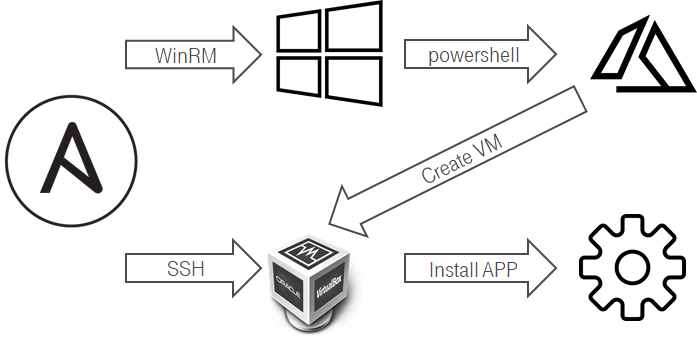

It was not a picnic. It was a bit tricky to create a VM at Hyper-V from Linux:

- Ansible connected via WinRM to a windows host.

- Ansible ran powershell script.

- The PowerShell created a new VM.

- The Hyper-V/ScVMM customized the VM i.e. hostname.

- VM with DHCP request sent hostname.

- Integration ddns & DHCP on Domain Controller side configured DNS record.

- We added the VM into Ansible inventory & apply the configuration.

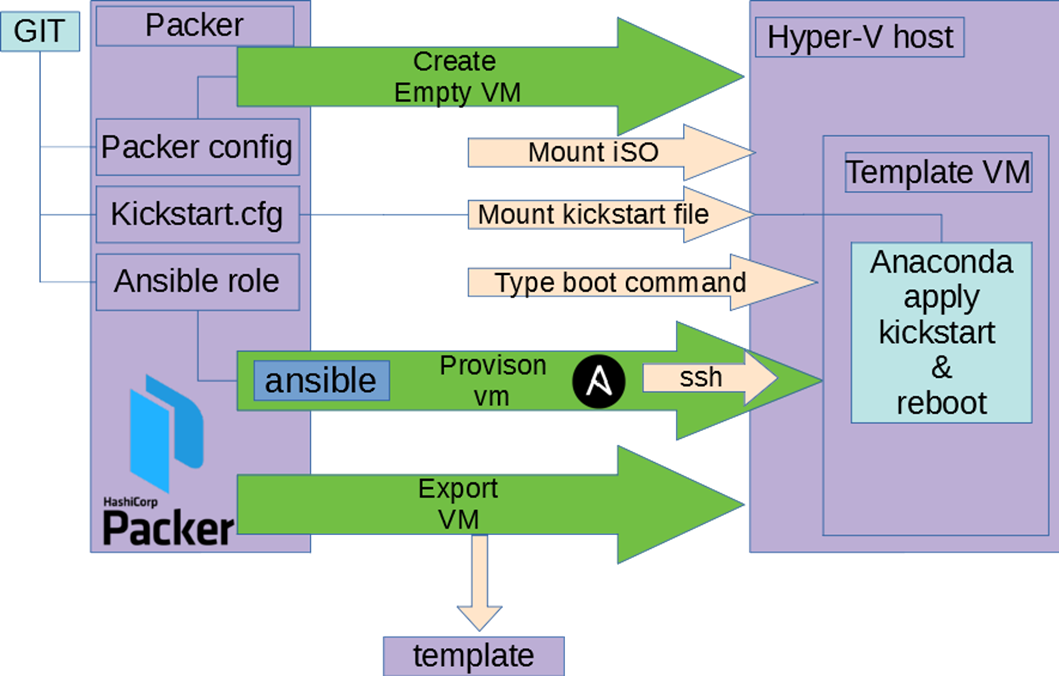

3. Create VM template

We decided not to reinvent the wheel and use the packer:

- Put packer config & kickstart file into the git repository.

- Configured Jenkins slave with hyper-v & Packer.

- Created & configured Jenkins pipeline.

It worked really easy:

- Packer created an empty VM & mounted an ISO.

- VM booted, Packer typed a boot command into the grub

- Grub got kickstart file from the packer web server or floppy drive provided by the packer.

- Anaconda started with the kickstart file & installed base OS.

- Packer was waiting for available SSH connection to the VM.

- Packer ran Ansible in local mode inside the VM.

- Ansible used exactly the same roles as in the use case №1.

- Packer exported the template.

Day №75: Refactor agreements & break nothing = Test Ansible roles

Agreements as Code was not enough for us. The amount of IaC was increasing, agreements were changing.We faced a problem about how to sync our knowledge about infrastructure across the team. The solution was to test Ansible roles. You can read the article about that process Test me if you can. Do YML developers Dream of testing ansible? or more general How to test Ansible and don't go nuts.

Day №130: What is about Openshift? is it better then Ansible + CentOS?

As I mentioned our infrastructure was like a creature. It was alive. It was growing. It was changing. As a part of that process & development process, we had to research was it possible or not to run our application inside Openshift/k8s. It is better to read Let us deploy to openshift. Unfortunately, we were not able to re-use Openshift inside development infrastructure.

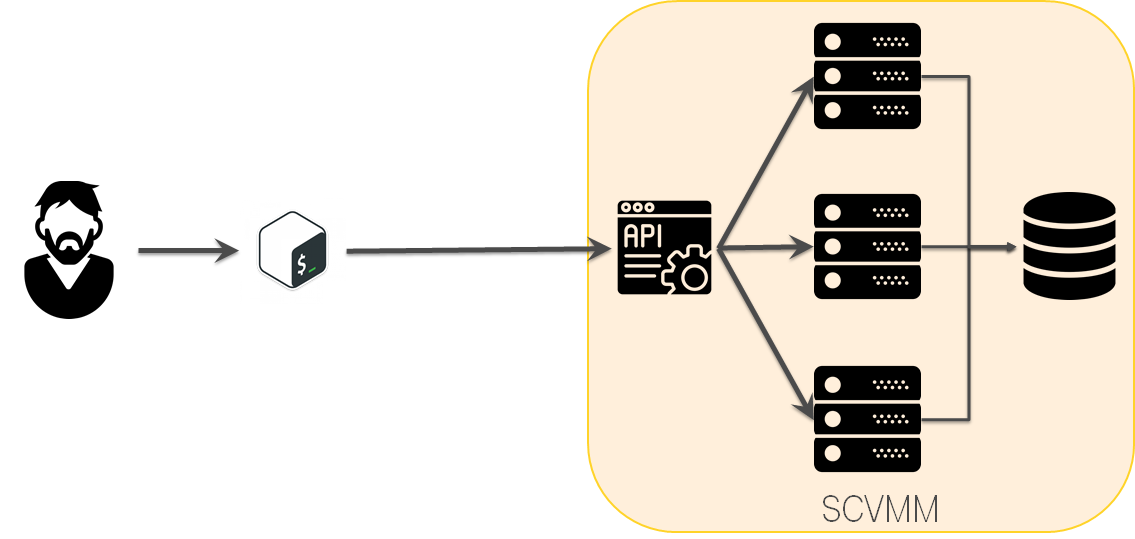

Day №170: Let us try Windows Azure Pack

Hyper-V & SCVMM were not user friendly for us. There was much more interesting thing — Windows Azure Pack. It was an SCVMM extension. It looked like Windows Azure, it provided HTTP REST API. Unfortunately, in reality, it was an abandoned project. However, we spent time on research.

Day №250: Windows Azure Pack is so so. SCVMM is our choice

Windows Azure Pack looked interesting, but we decided it was too risky to use. We used SCVMM.

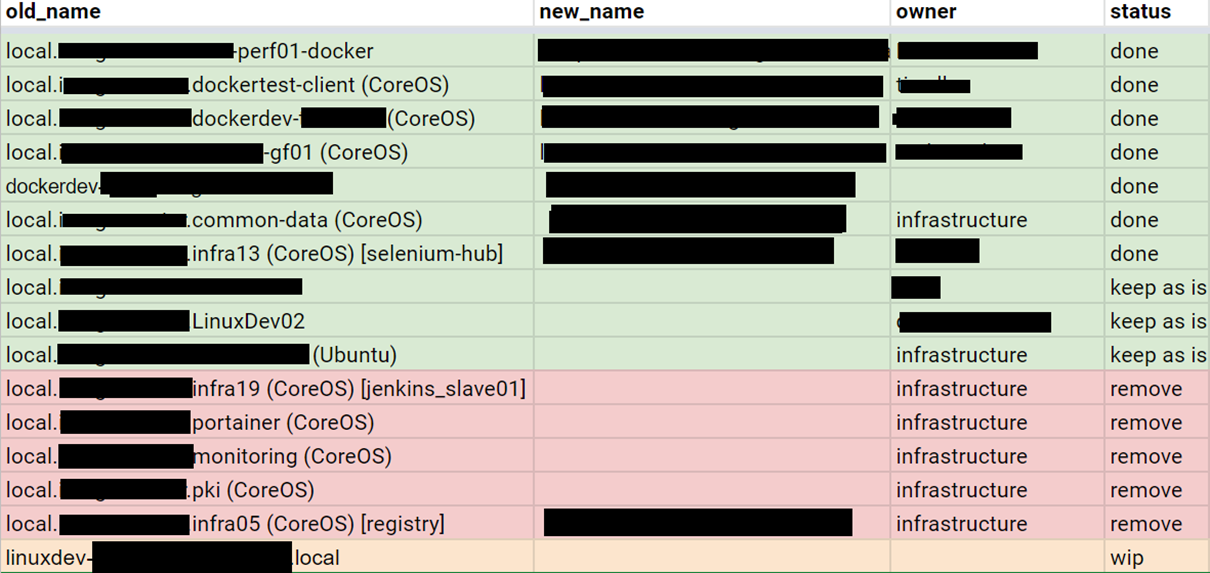

Day №360: Yak shaving

As you can see, a year later we had the foundation for starting the migration. The migration had to be S.M.A.R.T.. We created the list of VMs & started yak shaving. We were dealing one by one with each old VM, create Ansible roles & cover them by tests.

Day №450: Migration

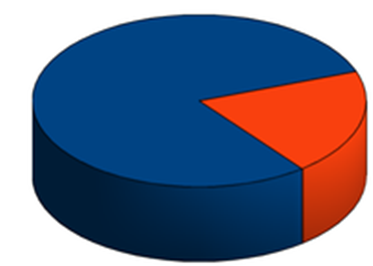

Migration was prune determined process. It followed the Pareto principle:

- 80% of the time was spent on preparation & 20% on migration.

- 80% VMs configuration rewriting took 20% of our time.

Day №540: Lessons learned

- Agreements as Code.

- Manual actions -> mechanization -> automatization.

Links

It is text version of my speech at DevopsConf 2019-10-01 and SPbLUG 2019-09-25 slides.