If you use static analyzers, you will have, sooner or later, to address the task of making their integration into existing projects easier, where fixing all warnings on legacy code is unfeasible.

The purpose of this article is not to help with integration but rather to elaborate on the technicalities of the process: the exact implementations of warning suppression mechanisms and pros and cons of each approach.

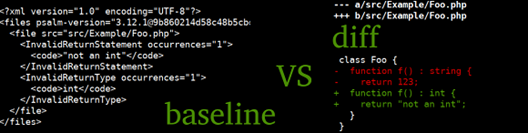

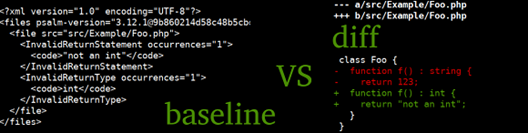

This approach is known by various names: baseline file in Psalm and Android Lint, suppress base (or profile) in PVS-Studio, code smell baseline in detekt.

This file is generated by the linter when run on the project:

Inside, it stores all the warnings produced at the creation step.

When running a static analyzer with the baseline.xml file, all the warnings contained in it will be ignored:

A straightforward approach, where warnings are kept in full along with line numbers, won't be working well enough: adding new code to the beginning of the source file will result in shifting the lines and, therefore, bringing back all the warnings meant to stay hidden.

We typically seek to accomplish the following goals:

What we can configure in this approach is which fields of the warning to include to form its hash value (or "signature"). To avoid problems related to the shifting of line numbers, don't include the line number in the list of these fields.

Here is an example list of fields that can form a warning signature:

The more properties used, the lower the risk of collision, but also the higher the risk of getting an unexpected warning due to signature invalidation. If any of the specified properties changes, the warning will no longer be ignored.

Along with the warning-triggering line, PVS-Studio stores the line before and the line after it. This helps better identify the triggering line, but with this approach, you may start getting the warning after modifying a neighboring line.

Another – less obvious – property is the name of the function or method the warning was issued for. This helps reduce the number of collisions but renaming the function will cause a storm of warnings on it.

It was empirically found that using this property allows you to weaken the filename field and store only the base name rather than the full path. This enables moving files between directories without the signature getting invalidated. In languages like C#, PHP, or Java, where the file name usually reflects the class name, such moves may have sense.

A well composed set of properties makes the baseline approach more effective.

Suppose we have a diagnostic, W104, that detects calls to die in the source code.

The project under analysis has the file foo.php:

The properties we use are {file name, diagnostic code, source line}.

When creating a baseline, the analyzer adds the call die('test') to its ignore base:

Now, let's add some more code:

All properties of the new call die('test') are exactly the same as those forming the signature of the fragment to be ignored. That's what we call a collision: a coincidence of warning signatures for potentially different code fragments.

One way of solving this issue is to add an additional field to distinguish between the two calls – say, "name of containing function".

But what if the new die('test') call is added to the same function? Neighboring lines may be the same in both cases, so including the previous and next lines in the signature won't help.

This is where the counter of signatures with collisions comes in handy. It will let us know that we get two or more warnings when only one was expected inside a function – then all warnings but the first must be shown.

With this solution, however, we somewhat lose in precision: you can't determine which line is newly added and which already existed. The analyzer will report the line following the ignored ones.

The original goal was to have warnings issued only on "newly written" code. This is where version control systems can be useful.

The revgrep utility receives a flow of warnings at stdin, analyzes the git diff, and outputs only warnings produced for new lines.

If you take this path, you'll have to answer the following questions:

Also keep in mind that sometimes you still want to get warnings outside the scope of the diff. For example, suppose you deleted a meme director class, MemeDirector. If that class was mentioned in any doc comments, you'd like the linter to tell you about that.

We need not only to get a correct set of affected lines but also to expand it so as to trace the side effects of the changes throughout the whole project.

The commit range can also be different. You wouldn't probably want to check the last commit only because in that case you'd be able to push two commits at once: one with the warnings, and the other for CI traversal. Even if done unintentionally, this poses a risk of overlooking a critical defect. Also keep in mind that the previous commit can be taken from the master branch, in which case it shouldn't be checked either.

NoVerify has two working modes: diff and full diff.

The regular diff can find warnings on files affected by modifications within the specified commit range. It's fast, but it doesn't provide thorough analysis of dependencies, and so new warnings on unaffected files can't be found.

The full diff runs the analyzer twice: first on existing code and then on new code, with subsequent filtering of results. This is similar to generating a baseline file on the fly based on the ability to get the previous version of the code using git. As you would expect, execution time increases almost twofold in this mode.

The initially suggested scenario was to run the faster analysis on pre-push hooks – in diff mode – so that feedback comes as soon as possible; then run the full diff mode on CI agents. As a result, people would ask why issues were found on agents but none were found locally. It's more convenient to have identical analysis processes so that passing a pre-push hook guarantees passing the linter's CI phase.

We can make an analog of full diff but without having to run double analysis.

Suppose we've got the following line in the diff:

If we try to classify this line, we'll tag it as "Foo class deletion".

Each diagnostic that in any way depends on the class being present must issue a warning if this class got deleted.

Similarly, when deleting variables (whether global or local) and functions, we have a collection of facts generated about all changes that we can classify.

Renames don't require additional processing. We view it as deleting the character with the old name and adding a character with the new one:

The biggest difficulty here is to correctly classify lines with changes and reveal all their dependencies without slowing down the algorithm to the speed of full diff with double traversal of the code base.

baseline: simple approach used in many analyzers. The obvious downside to it is that you will have to place this baseline file somewhere and update it every now and then. The more appropriate the collection of properties forming the warning signature, the more accuracy.

diff: simple in basic implementation but complicated if you want to achieve the best result possible. Theoretically, this approach can provide the highest accuracy. Your customers won't be able to integrate the analyzer into their process unless they use a version control system.

Hybrid approaches are also used: for example, you first take the baseline file and then resolve collisions and calculate line shifts using git.

Personally, I find the diff approach more elegant, but the one-pass implementation of full analysis may be too problematic and fragile.

The purpose of this article is not to help with integration but rather to elaborate on the technicalities of the process: the exact implementations of warning suppression mechanisms and pros and cons of each approach.

baseline, or the so-called suppress profile

This approach is known by various names: baseline file in Psalm and Android Lint, suppress base (or profile) in PVS-Studio, code smell baseline in detekt.

This file is generated by the linter when run on the project:

superlinter --create-baseline baseline.xml ./projectInside, it stores all the warnings produced at the creation step.

When running a static analyzer with the baseline.xml file, all the warnings contained in it will be ignored:

superlinter --baseline baseline.xml ./projectA straightforward approach, where warnings are kept in full along with line numbers, won't be working well enough: adding new code to the beginning of the source file will result in shifting the lines and, therefore, bringing back all the warnings meant to stay hidden.

We typically seek to accomplish the following goals:

- All warnings on new code must be issued

- Warnings on existing code must be issued only if it was modified

- (optional) Allow moving files or code fragments

What we can configure in this approach is which fields of the warning to include to form its hash value (or "signature"). To avoid problems related to the shifting of line numbers, don't include the line number in the list of these fields.

Here is an example list of fields that can form a warning signature:

- Diagnostic name or code

- Warning message

- File name

- Source line that triggers the warning

The more properties used, the lower the risk of collision, but also the higher the risk of getting an unexpected warning due to signature invalidation. If any of the specified properties changes, the warning will no longer be ignored.

Along with the warning-triggering line, PVS-Studio stores the line before and the line after it. This helps better identify the triggering line, but with this approach, you may start getting the warning after modifying a neighboring line.

Another – less obvious – property is the name of the function or method the warning was issued for. This helps reduce the number of collisions but renaming the function will cause a storm of warnings on it.

It was empirically found that using this property allows you to weaken the filename field and store only the base name rather than the full path. This enables moving files between directories without the signature getting invalidated. In languages like C#, PHP, or Java, where the file name usually reflects the class name, such moves may have sense.

A well composed set of properties makes the baseline approach more effective.

Collisions in a baseline method

Suppose we have a diagnostic, W104, that detects calls to die in the source code.

The project under analysis has the file foo.php:

function legacy() {

die('test');

}The properties we use are {file name, diagnostic code, source line}.

When creating a baseline, the analyzer adds the call die('test') to its ignore base:

{

"filename": "foo.php",

"diag": "W104",

"line": "die('test');"

}Now, let's add some more code:

+ function newfunc() {

+ die('test');

+ }

function legacy() {

die('test');

}All properties of the new call die('test') are exactly the same as those forming the signature of the fragment to be ignored. That's what we call a collision: a coincidence of warning signatures for potentially different code fragments.

One way of solving this issue is to add an additional field to distinguish between the two calls – say, "name of containing function".

But what if the new die('test') call is added to the same function? Neighboring lines may be the same in both cases, so including the previous and next lines in the signature won't help.

This is where the counter of signatures with collisions comes in handy. It will let us know that we get two or more warnings when only one was expected inside a function – then all warnings but the first must be shown.

With this solution, however, we somewhat lose in precision: you can't determine which line is newly added and which already existed. The analyzer will report the line following the ignored ones.

Approach based on diff capabilities of VCSs

The original goal was to have warnings issued only on "newly written" code. This is where version control systems can be useful.

The revgrep utility receives a flow of warnings at stdin, analyzes the git diff, and outputs only warnings produced for new lines.

golangci-lint employs revgrep's fork as a library, so it uses just the same algorithms for diff calculations.

If you take this path, you'll have to answer the following questions:

- What commit range to choose for calculating the diff?

- How are you going to process commits coming from the master branch (merge/rebase)?

Also keep in mind that sometimes you still want to get warnings outside the scope of the diff. For example, suppose you deleted a meme director class, MemeDirector. If that class was mentioned in any doc comments, you'd like the linter to tell you about that.

We need not only to get a correct set of affected lines but also to expand it so as to trace the side effects of the changes throughout the whole project.

The commit range can also be different. You wouldn't probably want to check the last commit only because in that case you'd be able to push two commits at once: one with the warnings, and the other for CI traversal. Even if done unintentionally, this poses a risk of overlooking a critical defect. Also keep in mind that the previous commit can be taken from the master branch, in which case it shouldn't be checked either.

diff mode in NoVerify

NoVerify has two working modes: diff and full diff.

The regular diff can find warnings on files affected by modifications within the specified commit range. It's fast, but it doesn't provide thorough analysis of dependencies, and so new warnings on unaffected files can't be found.

The full diff runs the analyzer twice: first on existing code and then on new code, with subsequent filtering of results. This is similar to generating a baseline file on the fly based on the ability to get the previous version of the code using git. As you would expect, execution time increases almost twofold in this mode.

The initially suggested scenario was to run the faster analysis on pre-push hooks – in diff mode – so that feedback comes as soon as possible; then run the full diff mode on CI agents. As a result, people would ask why issues were found on agents but none were found locally. It's more convenient to have identical analysis processes so that passing a pre-push hook guarantees passing the linter's CI phase.

full diff in one pass

We can make an analog of full diff but without having to run double analysis.

Suppose we've got the following line in the diff:

- class Foo {If we try to classify this line, we'll tag it as "Foo class deletion".

Each diagnostic that in any way depends on the class being present must issue a warning if this class got deleted.

Similarly, when deleting variables (whether global or local) and functions, we have a collection of facts generated about all changes that we can classify.

Renames don't require additional processing. We view it as deleting the character with the old name and adding a character with the new one:

- class MemeManager {

+ class SeniorMemeManager {The biggest difficulty here is to correctly classify lines with changes and reveal all their dependencies without slowing down the algorithm to the speed of full diff with double traversal of the code base.

Conclusions

baseline: simple approach used in many analyzers. The obvious downside to it is that you will have to place this baseline file somewhere and update it every now and then. The more appropriate the collection of properties forming the warning signature, the more accuracy.

diff: simple in basic implementation but complicated if you want to achieve the best result possible. Theoretically, this approach can provide the highest accuracy. Your customers won't be able to integrate the analyzer into their process unless they use a version control system.

| baseline | diff |

|---|---|

| + can be easily made powerful | + doesn't require storing an ignore file |

| + easy to implement and configure | + easier to distinguish between new and existing code |

| − collisions must be resolved | − takes much effort to prepare properly |

Hybrid approaches are also used: for example, you first take the baseline file and then resolve collisions and calculate line shifts using git.

Personally, I find the diff approach more elegant, but the one-pass implementation of full analysis may be too problematic and fragile.