RTSP is a simple signaling protocol which they cannot replace with anything for many years already, and it has to be admitted that they don't try really hard.

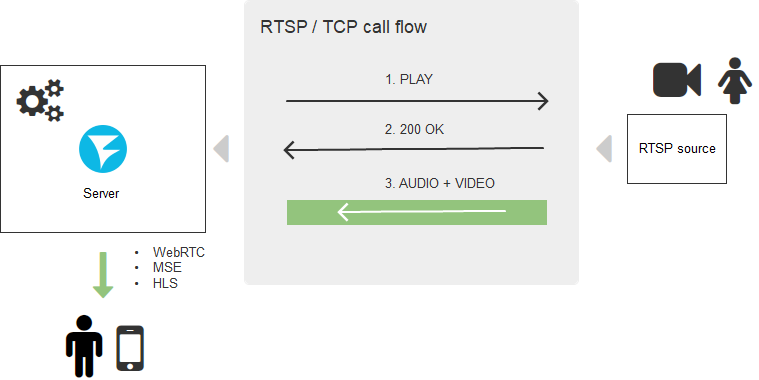

For example, we have an IP camera that supports RTSP. Anyone who has ever tested the traffic with a Sharkwire cable will tell you that first there comes DESCRIBE, then PLAY, and then the traffic begins to pour directly via RTP or wrapped in the TCP channel for instance.

A typical scheme of establishing a RTSP connection looks like this:

It is conceivable that the RTSP protocol support is unnecessary for browsers and of about as much use as the fifth wheel, therefore browsers are not in a hurry to implement it on a massive scale, and that will hardly ever happen. On the other hand, browsers could create direct TCP connections, and that would solve the task, but now it bumps up against security issues: have you ever seen a browser that would allow a script to use transport protocols directly?

But the people demand that streams be available in “any browser without installing any additional software”, and startuppers write on their websites, “you don’t have to install anything, it will work in all browsers out of the box”, when they want to show a stream from an IP camera.

In this article we are going to explore how this can be achieved. And as the upcoming year contains a round figure, let’s add some topicality to our article and stick a label with 2020 to it, especially as it is really so.

So, which video display technologies for a web page have to be forgotten in the year of 2020? It’s Flash in the browser. It is dead. It doesn’t exist anymore, cross it off the list.

Three useful methods

The tools which will allow you to watch a video stream in your browser today are:

- WebRTC

- HLS

- Websocket + MSE

What is wrong with WebRTC

In two words: it is resource-intensive and complicated.

You will wave it away, “What do you mean by resource-intensive?”, as the CPUs are powerful nowadays, the memory is cheap, so what’s the problem? Well, firstly, it is compulsory encryption of all the traffic even if you do not need it. Secondly, WebRTC is a complicated two-way connection and peer-to-peer feedback exchange about the channel quality (peer-to-server, in this case): at every moment of time there is bitrate calculated, packet losses detected, decisions on their redirecting taken, and audio-to-video synchronization is calculated with regard to all of that, the so-called lipsync, that makes the speaker’s lips coincide with their words. All these calculations, as well as the incoming traffic on the server, allocate and free gigabytes of the RAM in real time, and if anything goes wrong a 256-gigabyte server with a 48-core CPU will go into a tail-spin easily despite all the gigaherzs, nanometers, and DDR 10 on board.

So, it’s kind of hunting squirrels with an Iskander missile. All we need to suck out is a RTSP stream, and then display it, and what WebRTC says is, “Yes, c’mon, but you’ll have to pay for it.”

Why WebRTC is good

The latency. It is really low. If you are ready to sacrifice the performance and complexity for the low latency, WebRTC is the most suitable variant for you.

Why HLS is good

In two words: it can work everywhere

HLS is a slow off-roader in the world of displaying live content. It can work everywhere due to two things: the HTTP-transport and Apple’s patronage. Indeed, the HTTP protocol is omnipresent, I can feel its presence even when I’m writing these lines. So, no matter where you are and what ancient tablet you use to surf the net, HLS (HTTP Live Streaming) will get to you and deliver the video to your screen sure enough.

It’s all good enough, but...

Why HLS is bad

The latency. There are, for example, projects of construction site video surveillance. A facility is under construction for years, and during all that time the poor camera records videos of the construction site day and night, 24/7. This is an example of a situation where the low latency is not needed.

Another example is boars. Real boars. Ohio's farmers suffer from an invasion of wild boars which feed on the crops and trample them down like locusts, therefore posing a threat to the financial well-being of the farms. Enterprising startuppers have launched a system of video surveillance from RTSP cameras which monitors the grounds in real time and triggers a trap when the intruders invade. In this case the low latency is critically important, and if HLS is used (with a 15-second latency), the boars will run away before the trap is activated.

Here is one more example: video presentations where someone demonstrates a product to you and expects a prompt response. In the case of a high latency, they will show the product to you on the camera, then ask, “So how do you like it?”, and that will reach you only in 15 seconds. Within 15 seconds, the signal may travel to the Moon and back 12 times. No, we don’t want such latency. It rather looks like a pre-recorded video than Live. But pre-recorded video, as this is how HLS works: it records portions of a video on a disc or to the server’s memory, and the player downloads the recorded portions. This is how HTTP Live works which isn’t Live at all.

Why does it happen? The HTTP protocol’s global presence and its simplicity results in its sluggishness as HTTP was not initially intended for quick downloading and displaying of thousands of large video fragments (HLS segments). They are of course downloaded and played with high quality, but very slowly: it takes those 15 seconds or more for them to be downloaded, buffered, and decoded.

It has to be noted here that Apple announced its HLS Low Latency in autumn of 2019, but that’s a different story already. Let’s take a more detailed look at their results later. And now we also have MSE in stock.

Why MSE is good

Media Source Extension is native support of playing packet videos in the browser. It can be called a native player for H.264 and AAC, which you can feed video segments to, and which is not bound to a transport protocol, unlike HLS. For this reason, you can choose the transport via the Websockets protocol. In other words, the segments will not be downloaded using the ancient Request-Response (HTTP) technology anymore but pouring readily via the Websockets connection which is almost a direct TCP channel. This is of great help in regards to decreasing the delays which can become as low as 3-5 seconds. Such latency is not fantastic but suitable for most projects which do not require a hard real-time. The complexity and resource-intensity are relatively low as well because a TCP channel is opened, and almost the same HLS segments pour through it, which are collected by the player and sent to be played.

Why MSE is bad

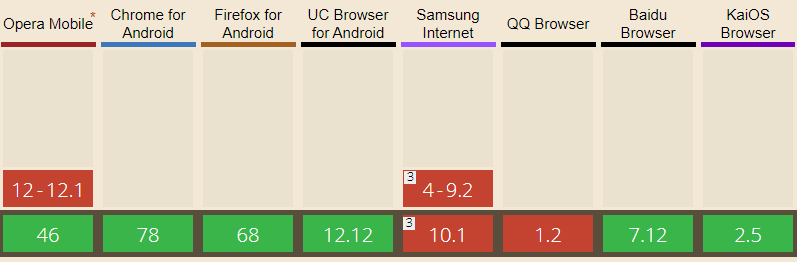

It cannot work everywhere. The same as with WebRTC, its penetration into browsers is lower. iPhones (iOS) have an especially remarkable history of failure to play MSE, which makes MSE hardly suitable as the sole solution for a startup.

It is fully available in the following browsers: Edge, Firefox, Chrome, Safari, Android Browser, Opera Mobile, Chrome for Android, Firefox for Android, UC Browser for Android.

Limited support of MSE appeared in iOS Safari quite a short time ago, starting with iOS 13.

RTSP leg

We have discussed the delivery in the video server > browser direction. Besides that, you will also need these two things:

1) To deliver your video from the IP camera to the server.

2) To convert the video into one of the formats / protocols described above.

This is where the server side comes in.

Ta-dah… Meet Web Call Server 5 (or simply WCS for friends). Someone has to receive the RTSP traffic, de-packetize the video correctly, convert it into WebRTC, HLS or MSE, preferably without being overcompressed by the transcoder, and send it towards the browser in presentable shape, not corrupted with artifacts and freezes.

The task is not complicated at a first glance, but there can be so many hidden pitfalls, Chinese cameras, and conversion nuances lying behind it that it’s really horrible. It isn’t possible without hacks as a matter of fact, but it works, and works well. In production.

The delivery scheme

As the result, a complete scheme of RTSP content delivery with conversion on an intermediate server begins to take shape.

One of the most frequently asked questions from our Indian colleagues is, “Is it possible? Directly, without a server?”. No, it isn’t; you will need the server side that will do the work. In the cloud, on hardware, on corei7 in your balcony, but you cannot do without it.

Let’s get back to our year 2020

So, here’s the recipe for cooking a RTSP in your browser:

- Take a fresh WCS (Web Call Server).

- Add WebRTC, HLS or MSE to taste.

-

Serve them on the web page.

Enjoy your meal!

No, this isn’t all yet.

The inquiring neurons will definitely want to ask this question, “How? Really, how can this be done? What will it look like in the browser?”

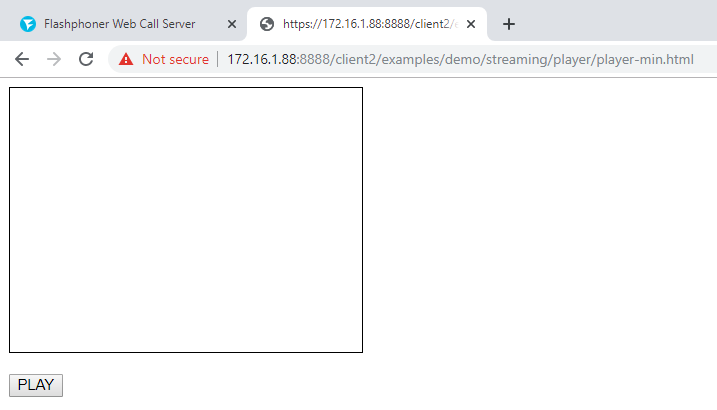

We would like to bring to your attention the minimalistic WebRTC player assembled as a kitchen-table effort.

1) Connect the main API script flashphoner.js and the script my_player.js, which we are going to create a bit later, to the web page.

<script type="text/javascript" src="../../../../flashphoner.js"></script>

<script type="text/javascript" src=my_player.js"></script> 2) Initialize API in the body of the web page

<body onload="init_api()"> 3) Add div, which will serve as a container for the videos, to the page. Set the sizes and the boundary for it.

<div id="myVideo" style="width:320px;height:240px;border: solid 1px"></div>4) Add the Play button, the clicking on which will initialize connection to the server and start playing the video

<input type="button" onclick="connect()" value="PLAY"/>5) Now let’s create the script my_player.js which will contain the main code of our player. Describe the constants and variables

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS;

var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS;

var session;6) Initialize API when a HTML page is loaded

function init_api() {

console.log("init api");

Flashphoner.init({

});

}7) Connect to the WCS server via WebSocket. For everything to function correctly, replace "wss://demo.flashphoner.com" with your WCS address

function connect() {

session = Flashphoner.createSession({urlServer: "wss://demo.flashphoner.com"}).on(SESSION_STATUS.ESTABLISHED, function(session){

console.log("connection established");

playStream(session);

});

}8) After that, transmit the following two parameters, “name” and “display”, where “name” is the RTSP URL of the stream being played, and “display” is the element of myVideo which our player will be installed into. Set the URL of your stream here as well, instead of ours.

function playStream() {

var options = {name:"rtsp://b1.dnsdojo.com:1935/live/sys2.stream",display:document.getElementById("myVideo")};

var stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) {

console.log("playing");

});

stream.play();

}Save the files and try to launch the player. Is your RTSP stream played?

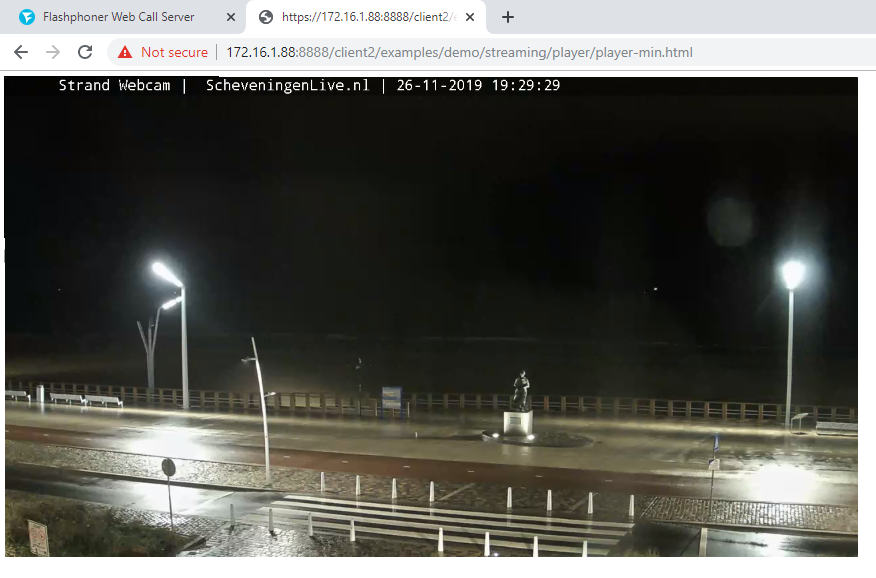

When we tested it, this one was played: rtsp://b1.dnsdojo.com:1935/live/sys2.stream.

And this is what it looks like:

The player before the Play button is clicked on

The player with a video launched

There is not much code at all:

HTML

<!DOCTYPE html>

<html lang="en">

<head>

<script type="text/javascript" src="../../../../flashphoner.js"></script>

<script type="text/javascript" src=my_player.js"></script>

</head>

<body onload="init_api()">

<div id="myVideo" style="width:320px;height:240px;border: solid 1px"></div>

<br/><input type="button" onclick="connect()" value="PLAY"/>

</body>

</html> JavaScript

//Status constants

var SESSION_STATUS = Flashphoner.constants.SESSION_STATUS;

var STREAM_STATUS = Flashphoner.constants.STREAM_STATUS;

//Websocket session

var session;

//Init Flashphoner API on page load

function init_api() {

console.log("init api");

Flashphoner.init({

});

}

//Connect to WCS server over websockets

function connect() {

session = Flashphoner.createSession({urlServer: "wss://demo.flashphoner.com"}).on(SESSION_STATUS.ESTABLISHED, function(session){

console.log("connection established");

playStream(session);

});

}

//Playing stream with given name and mount the stream into myVideo div element

function playStream() {

var options = {"rtsp://b1.dnsdojo.com:1935/live/sys2.stream",display:document.getElementById("myVideo")};

var stream = session.createStream(options).on(STREAM_STATUS.PLAYING, function(stream) {

console.log("playing");

});

stream.play();

}demo.flashphoner.com is used as the server side. The full code of the example is available below, at the foot of the page containing the links.

Have a pleasant streaming!

Links

Integration of an RTSP player into a web page or mobile application

zoonman

Guys, you should package your server as docker container and configure it through env variables. This will increase adoption and you will have more clients.