I'm putting part of older WebForms portions of my site that still run on bare metal to ASP.NET Core and Azure App Services, and while I'm doing that I realized that I want to make sure my staging sites don't get indexed by Google/Bing.

I already have a robots.txt, but I want one that's specific to production and others that are specific to development or staging. I thought about a number of ways to solve this. I could have a static robots.txt and another robots-staging.txt and conditionally copy one over the other during my Azure DevOps CI/CD pipeline.

Then I realized the simplest possible thing would be to just make robots.txt be dynamic. I thought about writing custom middleware but that sounded like a hassle and more code that needed. I wanted to see just how simple this could be.

- You could do this as a single inline middleware, and just lambda and func and linq the heck out it all on one line

- You could write your own middleware and do lots of options, then activate it bested on env.IsStaging(), etc.

- You could make a single Razor Page with environment taghelpers.

The last one seemed easiest and would also mean I could change the cshtml without a full recompile, so I made a RobotsTxt.cshtml single razor page. No page model, no code behind. Then I used the built-in environment tag helper to conditionally generate parts of the file. Note also that I forced the mime type to text/plain and I don't use a Layout page, as this needs to stand alone.

@page

@{

Layout = null;

this.Response.ContentType = "text/plain";

}

# /robots.txt file for http://www.hanselman.com/

User-agent: *

<environment include="Development,Staging">Disallow: /</environment>

<environment include="Production">Disallow: /blog/private

Disallow: /blog/secret

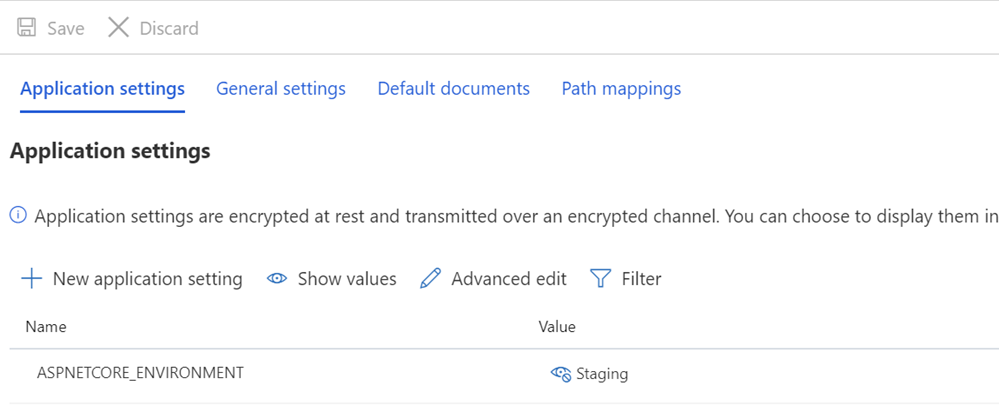

Disallow: /blog/somethingelse</environment>I then make sure that my Staging and/or Production systems have ASPNETCORE_ENVIRONMENT variables set appropriately.

I also want to point out what may look like odd spacing and how some text is butted up against the TagHelpers. Remember that a TagHelper's tag sometimes «disappears» (is elided) when it's done its thing, but the whitespace around it remains. So I want User-agent: * to have a line, and then Disallow to show up immediately on the next line. While it might be prettier source code to have that start on another line, it's not a correct file then. I want the result to be tight and above all, correct. This is for staging:

User-agent: *

Disallow: /This now gives me a robots.txt at /robotstxt but not at /robots.txt. See the issue? Robots.txt is a file (or a fake one) so I need to map a route from the request for /robots.txt to the Razor page called RobotsTxt.cshtml.

Here I add a RazorPagesOptions in my Startup.cs with a custom PageRoute that maps /robots.txt to /robotstxt. (I've always found this API annoying as the parameters should, IMHO, be reversed like («from»,«to») so watch out for that, lest you waste ten minutes like I just did.

public void ConfigureServices(IServiceCollection services)

{

services.AddMvc()

.AddRazorPagesOptions(options =>

{

options.Conventions.AddPageRoute("/robotstxt", "/Robots.Txt");

});

}And that's it! Simple and clean.

You could also add caching if you wanted, either as a larger middleware, or even in the cshtml Page, like

context.Response.Headers.Add("Cache-Control", $"max-age=SOMELARGENUMBEROFSECONDS");but I'll leave that small optimization as an exercise to the reader.

UPDATE: After I was done I found this robots.txt middleware and NuGet up on GitHub. I'm still happy with my code and I don't mind not having an external dependency, but it's nice to file this one away for future more sophisticated needs and projects.

How do you handle your robots.txt needs? Do you even have one?